This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

OpenClarity Documentation

OpenClarity is an open source tool for agentless detection and management of Virtual Machine

Software Bill Of Materials (SBOM) and security threats such as vulnerabilities, exploits, malware, rootkits, misconfigurations and leaked secrets.

Join OpenClarity’s Slack channel to hear about the latest announcements and upcoming activities. We would love to get your feedback!

Table of Contents

Why OpenClarity?

Virtual machines (VMs) are the most used service across all hyperscalers. AWS,

Azure, GCP, and others have virtual computing services that are used not only

as standalone VM services but also as the most popular method for hosting

containers (for example, Docker, Kubernetes).

VMs are vulnerable to multiple threats:

- Software vulnerabilities

- Leaked Secrets/Passwords

- Malware

- System Misconfiguration

- Rootkits

There are many very good open source and commercial-based solutions for

providing threat detection for VMs, manifesting the different threat categories above.

However, there are challenges with assembling and managing these tools yourself:

- Complex installation, configuration, and reporting.

- Integration with deployment automation.

- Siloed reporting and visualization.

The OpenClarity project is focused on unifying detection and management of VM security threats in an agentless manner.

For more information on the capabilities of OpenClarity, see Features.

Getting Started

For step-by-step guidance on how to deploy OpenClarity across different environments, including AWS, Azure, GCP, and Docker, see Getting Started and choose your preferred provider for detailed deployment instructions.

Overview

OpenClarity uses a pluggable scanning infrastructure to provide:

- SBOM analysis

- Package and OS vulnerability detection

- Exploit detection

- Leaked secret detection

- Malware detection

- Misconfiguration detection

- Rootkit detection

The pluggable scanning infrastructure uses several tools that can be

enabled/disabled on an individual basis. OpenClarity normalizes, merges and

provides a robust visualization of the results from these various tools.

These tools include:

- SBOM Generation and Analysis

- Vulnerability detection

- Exploits

- Secrets

- Malware

- Misconfiguration

- Rootkits

* Windows only

** Linux and MacOS only

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in the following providers:

Supported Filesystems

The following filesystem operations are supported on different host types:

| Host | List block devices | Mount Ext2, Ext3, Ext4 | Mount XFS | Mount NTFS |

| Linux | Supported | Supported | Supported | Supported |

| Darwin | Supported | Supported | Supported | Supported |

| Windows | Not supported | Not supported | Not supported | Not supported |

Architecture

A high-level architecture overview is available under OpenClarity Stack.

Roadmap

The OpenClarity project roadmap is available here.

Contributing

If you are ready to jump in and test, add code, or help with documentation,

please follow the instructions on our contributing guide

for details on how to open issues and setup OpenClarity for development and testing.

Code of Conduct

You can view our code of conduct here.

License

Apache License, Version 2.0

1 - Getting Started

The following sections describe the installation of the OpenClarity backend and the CLI, and show you the most common tasks that you can perform with OpenClarity.

1.1 - Deploy on AWS

An AWS CloudFormation template is provided for quick deployment of the OpenClarity environment.

Note: To avoid extra costs (cross-region snapshots), you may want to deploy the OpenClarity AWS CloudFormation template in the same region where the majority of the VMs are that you want to scan with OpenClarity.

The following figure shows the basic AWS resources that the OpenClarity CloudFormation template creates:

-

a VPC with a public and private subnet, and

-

an AWS Internet Gateway (IGW) and NAT Gateway (NGW) into the VPC.

The public subnet (OpenClarityServerSubnet) hosts the OpenClarity Server (OpenClarityServer) EC2 instance. The OpenClarity server houses the scanning configuration, the UI, and other control components. The EC2 instance is assigned an external IPv4 address (EIP) for SSH and web UI access.

The private subnet (OpenClarityScannerSubnet) hosts the VM snapshot instances (EC2) that are scanned for security vulnerabilities.

Prerequisites

Deployment steps

To deploy the OpenClarity AWS CloudFormation Stack, you can:

- click this quick-create link to navigate directly to the AWS CloudFormation console and jump to the wizard instructions, or

- complete the following steps.

-

Download the latest OpenClarity release.

wget https://github.com/openclarity/openclarity/releases/download/v1.1.2/aws-cloudformation-v1.1.2.tar.gz

Alternatively, copy the AWS CloudFormation template file from the project repository to deploy the latest development code and skip the next step.

-

Create a new directory and extract the files.

mkdir aws-cloudformation-v1.1.2

tar -xvzf aws-cloudformation-v1.1.2.tar.gz -C aws-cloudformation-v1.1.2

-

Log in to the AWS CloudFormation console and go to the AWS CloudFormation Stacks section, then select Create Stack > With New Resources (standard).

-

Check Template is ready and Upload a template file, then click Upload a template file/Choose file and upload the previously downloaded CFN template file.

-

In the OpenClarity CloudFormation Stack wizard, set the following:

- Enter a name for the stack.

- Select the InstanceType (defaults to

t2.large for the OpenClarity Server, and the scanner VMs). - Specify the SSH key for the EC2 instance in the KeyName field. You will need this key to connect to OpenClarity.

- Adjust SSHLocation according to your policies.

- Do not change AdvancedConfiguration, unless you are building from a custom registry.

- Click NEXT.

- (Optional) Add tags as needed for your environment. You can use the defaults unless you need to adjust for your own policies.

- Click NEXT, then scroll to the bottom of the screen, and check I acknowledge….

- Click SUBMIT.

-

Once the stack is deployed successfully, copy the OpenClarity SSH address from the Outputs tab.

-

Open an SSH tunnel to OpenClarity the server

ssh -N -L 8080:localhost:80 -i "<Path to the SSH key specified during install>" ubuntu@<OpenClarity SSH Address copied during install>

-

Access the OpenClarity UI.

-

Open the OpenClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API at http://localhost:8080/api. For details on the API, see the API reference.

Next steps

Complete the First Tasks on the UI.

1.2 - Deploy on Azure

Prerequisites

Deployment steps

-

Click here to deploy OpenClarity’s custom template.

-

Fill out the required Project details and Instance details in the Basics tab.

You can set the following parameters:

| Parameter | Required | Description |

| Subscription | True | Azure subscription where resources will be billed. |

| Region | False | Azure region where resources will be deployed. |

| OpenClarity Deploy Postfix | True | Postfix for Azure resource group name (e.g. openclarity-<postfix>). |

| OpenClarity Server SSH Username | True | SSH Username for the OpenClarity Server Virtual Machine. |

| OpenClarity Server SSH Public Key | True | SSH Public Key for the OpenClarity Server Virtual Machine. Paste the contents of ~/.ssh/id_rsa2.pub here. |

| OpenClarity Server VM Size | True | The size of the OpenClarity Server Virtual Machine. |

| OpenClarity Scanner VMs Size | True | The size of the OpenClarity Scanner Virtual Machines. |

| Security Type | False | Security Type of the OpenClarity Server Virtual Machine, e.g. TrustedLaunch (default) or Standard. |

-

(Optional) In the Advanced tab, modify the Container Image for each service if a specific OpenClarity version is required. Then, select the delete policy and the database.

| Parameter | Required | Description |

| Service Container Image | True | Docker Container Image to use for each service. |

| Asset Scan Delete Policy | True | Delete Policy for resources created when performing an asset scan, e.g. Always, OnSuccess or Never. |

| Database To Use | True | Database type to use, e.g. SQLite, PostgreSQL or External PostgreSQL. |

-

Click Review + create to create the deployment.

-

Once the deployment is completed successfully, copy the OpenClarity SSH address from the Outputs tab.

-

Open an SSH tunnel to OpenClarity the server

ssh -N -L 8080:localhost:80 -i "<Path to the SSH key specified during install>" ubuntu@<OpenClarity SSH Address copied during install>

-

Access the OpenClarity UI.

-

Open the OpenClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API at http://localhost:8080/api. For details on the API, see the API reference.

Next steps

Complete the First Tasks on the UI.

1.3 - Deploy on Docker

Prerequisites

Deployment steps

To run OpenClarity in Docker on a local machine, complete the following steps.

-

Download the latest OpenClarity release.

wget https://github.com/openclarity/openclarity/releases/download/v1.1.2/docker-compose-v1.1.2.tar.gz

-

Create a new directory, extract the files and navigate to the directory.

mkdir docker-compose-v1.1.2

tar -xvzf docker-compose-v1.1.2.tar.gz -C docker-compose-v1.1.2

cd docker-compose-v1.1.2

-

Start every control plane element with the docker compose file.

docker compose --project-name openclarity --file docker-compose.yml up -d --wait --remove-orphans

The output should be similar to:

[+] Running 14/14

⠿ Network openclarity Created 0.2s

⠿ Volume "openclarity_grype-server-db" Created 0.0s

⠿ Volume "openclarity_apiserver-db-data" Created 0.0s

⠿ Container openclarity-orchestrator-1 Healthy 69.7s

⠿ Container openclarity-yara-rule-server-1 Healthy 17.6s

⠿ Container openclarity-exploit-db-server-1 Healthy 17.7s

⠿ Container openclarity-swagger-ui-1 Healthy 7.8s

⠿ Container openclarity-trivy-server-1 Healthy 26.7s

⠿ Container openclarity-uibackend-1 Healthy 17.6s

⠿ Container openclarity-ui-1 Healthy 7.7s

⠿ Container openclarity-freshclam-mirror-1 Healthy 7.8s

⠿ Container openclarity-grype-server-1 Healthy 37.3s

⠿ Container openclarity-gateway-1 Healthy 7.7s

⠿ Container openclarity-apiserver-1 Healthy 17.7s

Please note that the image_override.env file enables you to use the images you build yourself. You can override parameters in the docker-compose.yml by passing a custom env file to the docker compose up command via the --env-file flag. The /installation/docker/image_override.env file contains an example overriding all the container images.

-

Check the running containers in the Docker desktop.

-

Access the OpenClarity UI. Navigate to http://localhost:8080/ in your browser.

Next steps

Complete the First Tasks on the UI.

Clean up steps

-

After you’ve finished your tasks, stop the running containers.

docker compose --project-name openclarity --file docker-compose.yml down --remove-orphans

1.4 - Deploy on GCP

Prerequisites

- You can install OpenClarity using the CLI, so you have to have gcloud on your

computer available beforehand. For details on installing and configuring gcloud, see the official installation guide.

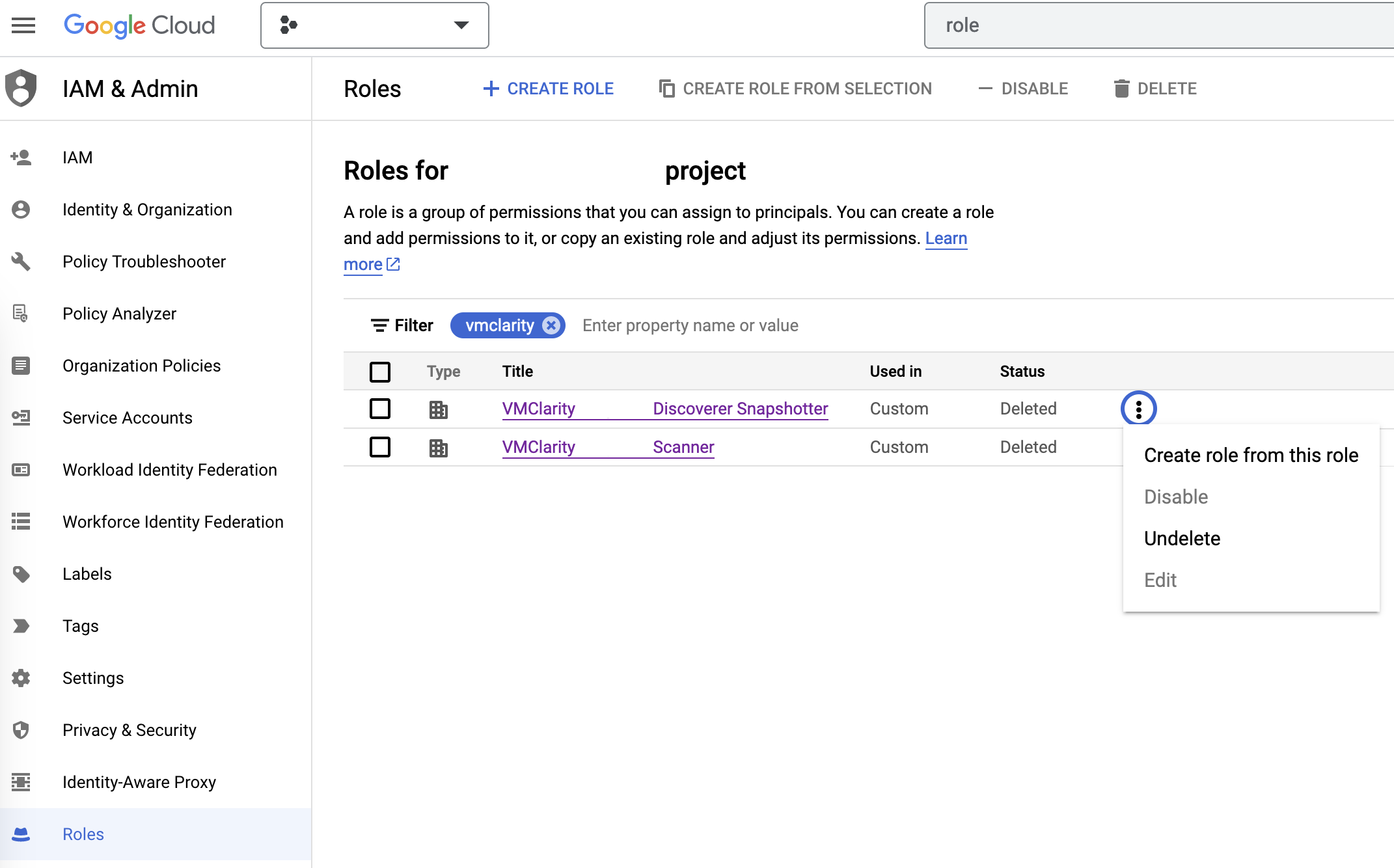

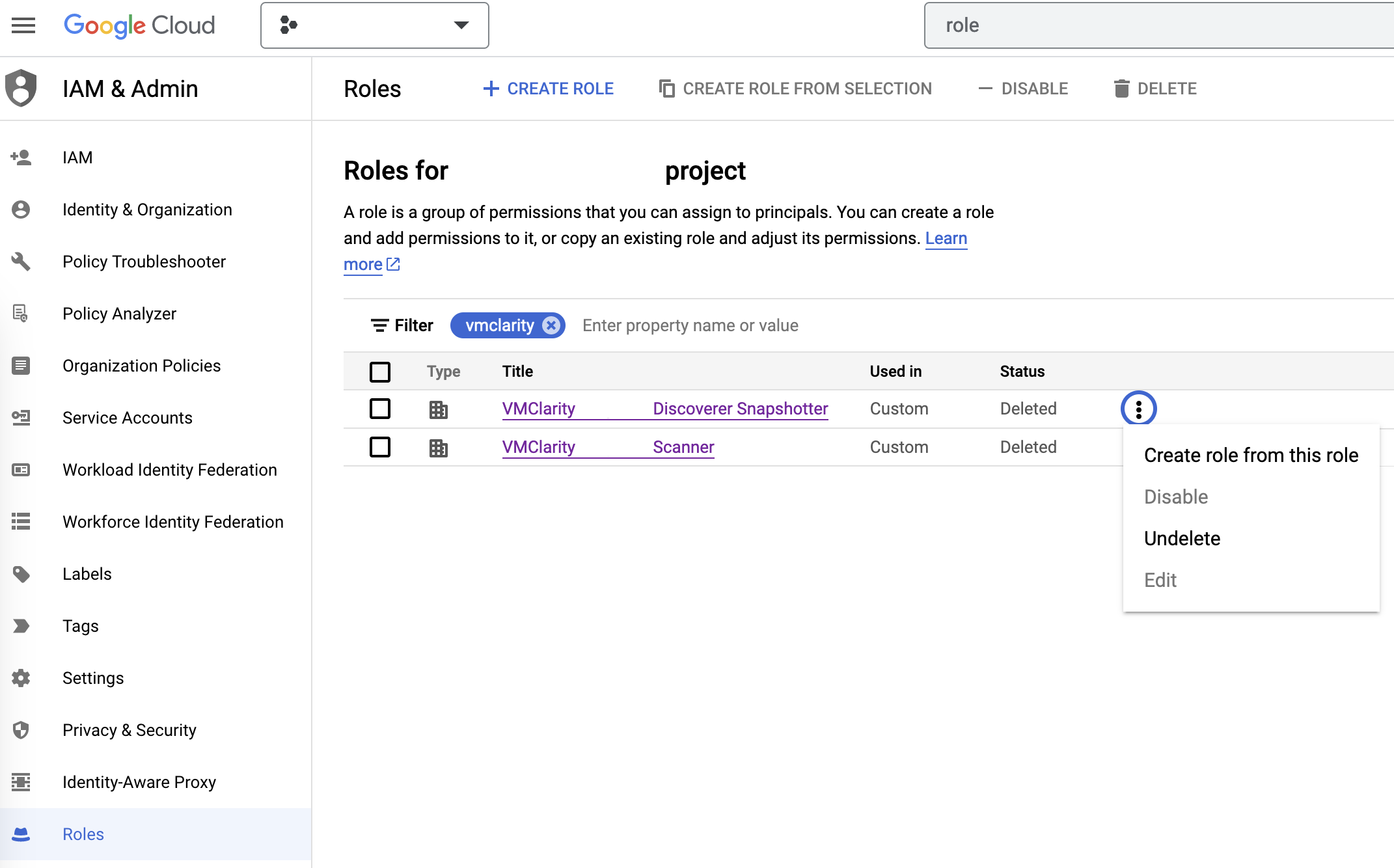

- If you have already installed OpenClarity before and want to reinstall it, you have to manually restore deleted roles that were created during the previous installation.

Deployment steps

To install OpenClarity on Google Cloud Platform (GCP), complete the following steps.

-

Download the newest GCP deployment release from GitHub and extract it to any location.

wget https://github.com/openclarity/openclarity/releases/download/v1.1.2/gcp-deployment-v1.1.2.tar.gz

-

Create a new directory, extract the files and navigate to the directory.

mkdir gcp-deployment-v1.1.2

tar -xvzf gcp-deployment-v1.1.2.tar.gz -C gcp-deployment-v1.1.2

cd gcp-deployment-v1.1.2

-

Copy the example configuration file and rename it.

cp openclarity-config.example.yaml openclarity-config.yaml

-

The following table contains all the fields that can be set in the openclarity-config.yaml file. You have to set at

least the required ones.

| Field | Required | Default | Description |

zone | yes | | The Zone to locate the OpenClarity server. |

machineType | yes | | The machine type for the OpenClarity server. |

region | yes | | The region to locate OpenClarity. |

scannerMachineType | | e2-standard-2 | Machine type to use for the Scanner instances. |

scannerSourceImage | | projects/ubuntu-os-cloud/global/images/ubuntu-2204-jammy-v20230630 | Source image to use for the Scanner instances. |

databaseToUse | | SQLite | The database that OpenClarity should use. |

apiserverContainerImage | | ghcr.io/openclarity/openclarity-apiserver:1.1.2 | The container image to use for the apiserver. |

orchestratorContainerImage | | ghcr.io/openclarity/openclarity-orchestrator:1.1.2 | The container image to use for the orchestrator. |

uiContainerImage | | ghcr.io/openclarity/openclarity-ui:1.1.2 | The container image to use for the ui. |

uibackendContainerImage | | ghcr.io/openclarity/openclarity-ui-backend:1.1.2 | The container image to use for the uibackend. |

scannerContainerImage | | ghcr.io/openclarity/openclarity-cli:1.1.2 | The container image to use for the scanner. |

exploitDBServerContainerImage | | ghcr.io/openclarity/exploit-db-server:v0.2.4 | The container image to use for the exploit db server. |

trivyServerContainerImage | | docker.io/aquasec/trivy:0.41.0 | The container image to use for the trivy server. |

grypeServerContainerImage | | ghcr.io/openclarity/grype-server:v0.7.0 | The container image to use for the grype server. |

freshclamMirrorContainerImage | | ghcr.io/openclarity/freshclam-mirror:v0.2.0 | The container image to use for the fresh clam mirror server. |

postgresqlContainerImage | | docker.io/bitnami/postgresql:12.14.0-debian-11-r28 | The container image to use for the postgresql server. |

assetScanDeletePolicy | | Always | When asset scans should be cleaned up after scanning. |

postgresDBPassword | | | Postgres DB password. Only required if DatabaseToUse is Postgresql. |

externalDBName | | | DB to use in the external DB. Only required if DatabaseToUse is External. |

externalDBUsername | | | Username for the external DB. Only required if the DatabaseToUse is External. |

externalDBPassword | | | Password for the external DB. Only required if the DatabaseToUse is External. |

externalDBHost | | | Hostname or IP for the external DB. Only required if the DatabaseToUse is External. |

externalDBPort | | | Port for the external DB. Only required if the DatabaseToUse is External. |

-

Deploy OpenClarity using gcloud deployment-manager.

gcloud deployment-manager deployments create <openclarity deployment name> --config openclarity-config.yaml

-

Open an SSH tunnel to the OpenClarity server with gcloud. For further information on how to create an SSH connection

with gcloud to one of your instances check the official page.

gcloud compute ssh --project=<project id> --zone=<zone name> <name of your VM> -- -NL 8080:localhost:80

-

Access the OpenClarity UI.

-

Open the OpenClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API at http://localhost:8080/api. For details on the API, see the API reference.

Next steps

Complete the First Tasks on the UI.

Uninstall OpenClarity

-

You can uninstall OpenClarity using the gcloud manager.

gcloud deployment-manager deployments delete <openclarity deployment name>

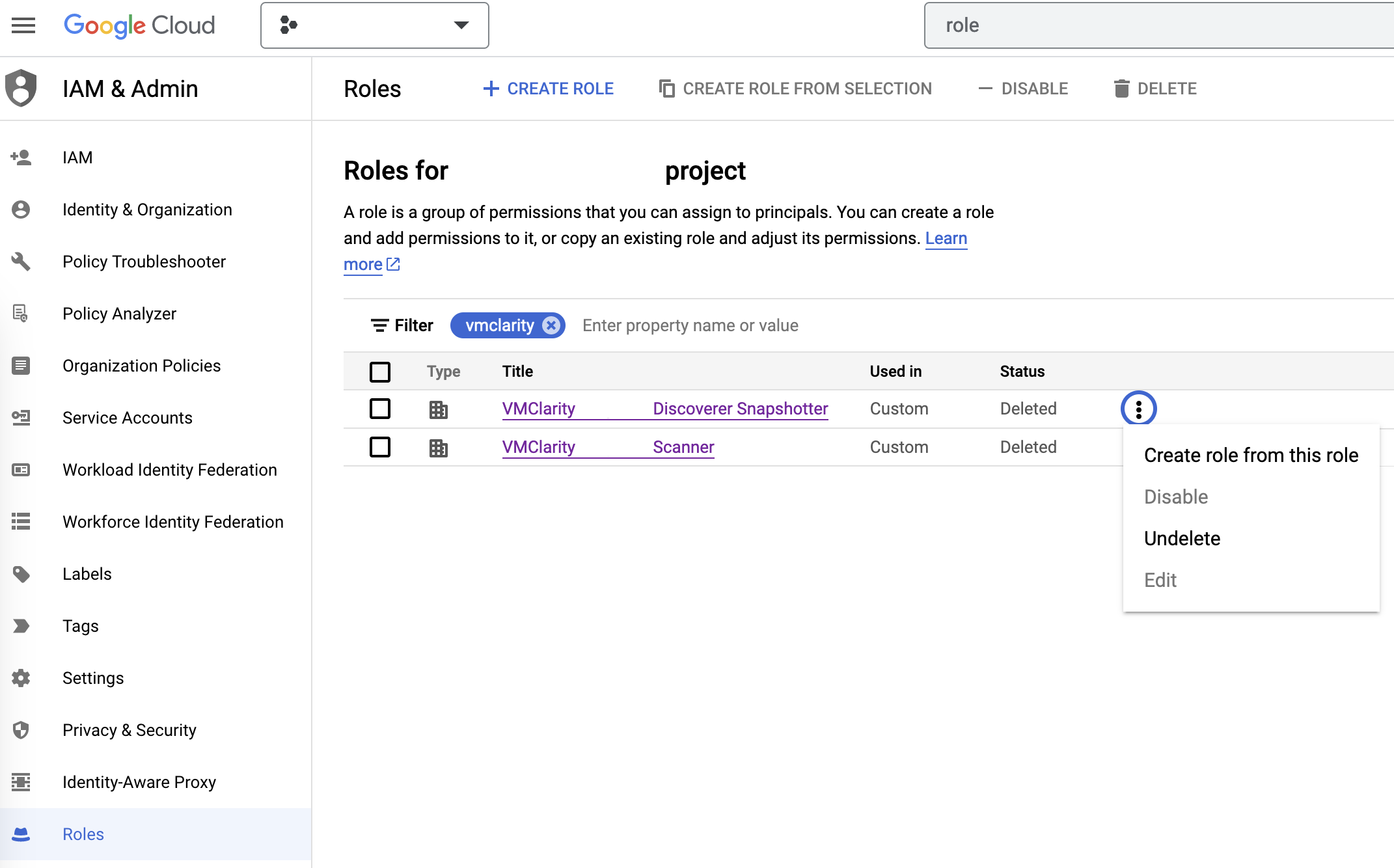

Restore deleted roles

-

On the IAM & Admin page on GCP, open the Roles tab, then search for OpenClarity in the filter input.

-

Now manually undelete the Discoverer Snapshotter and Scanner roles to set their statuses from Deleted to Enabled.

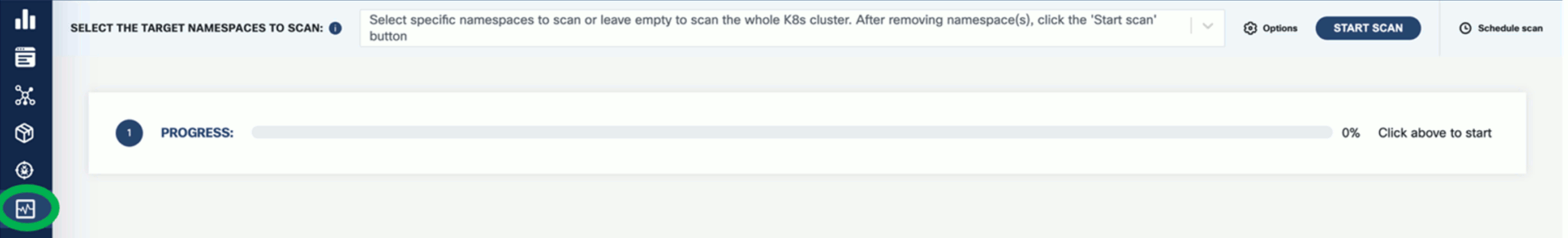

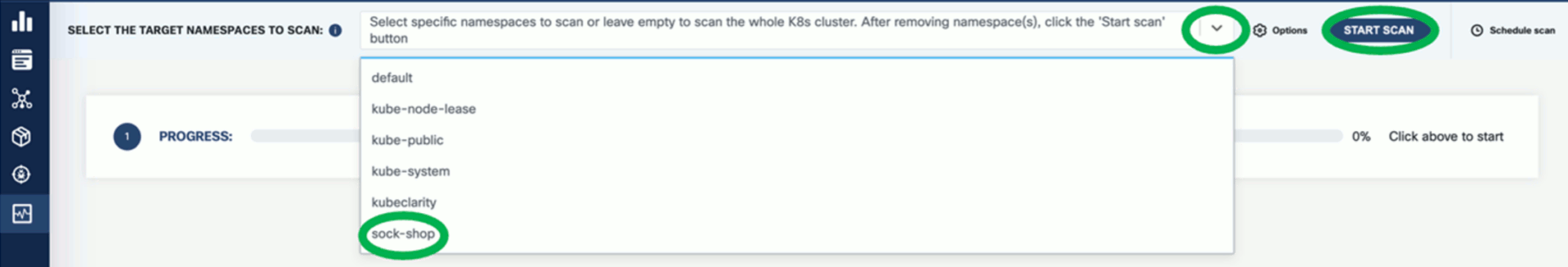

1.5 - Deploy on Kubernetes

Prerequisites

- Install a tool to run local Kubernetes clusters. Here, Kind is used as the default option for creating a local cluster.

- Helm to install OpenClarity.

Deployment steps

To deploy OpenClarity to your Kubernetes cluster, complete the following steps.

-

Create a Kubernetes cluster.

kind create cluster --name openclarity-k8s

-

Ensure the Kubernetes cluster is up and running. If you’re using kind, you can check the status of your clusters with the following command:

-

Use Helm to install OpenClarity. Run the following command:

helm install openclarity oci://ghcr.io/openclarity/charts/openclarity --version 1.1.2 \

--namespace openclarity --create-namespace \

--set orchestrator.provider=kubernetes \

--set orchestrator.serviceAccount.automountServiceAccountToken=true

-

Verify that all the OpenClarity pods have been successfully deployed by executing the following command:

kubectl get pods -n openclarity

-

Wait until all pods are in the Running state or have completed their initialization.

-

Once the pods are ready, start port forwarding to access the OpenClarity gateway service. Use the following command to forward traffic from your local machine to the cluster:

kubectl port-forward -n openclarity service/openclarity-gateway 8080:80

-

Access the OpenClarity UI by navigating to http://localhost:8080/ in your web browser.

Next steps

Complete the First Tasks on the UI.

Clean up steps

-

Uninstall OpenClarity with Helm. Run the following command:

helm uninstall openclarity --namespace openclarity

-

Delete the Kubernetes cluster.

kind delete clusters openclarity-k8s

1.6 - First Tasks on the UI

-

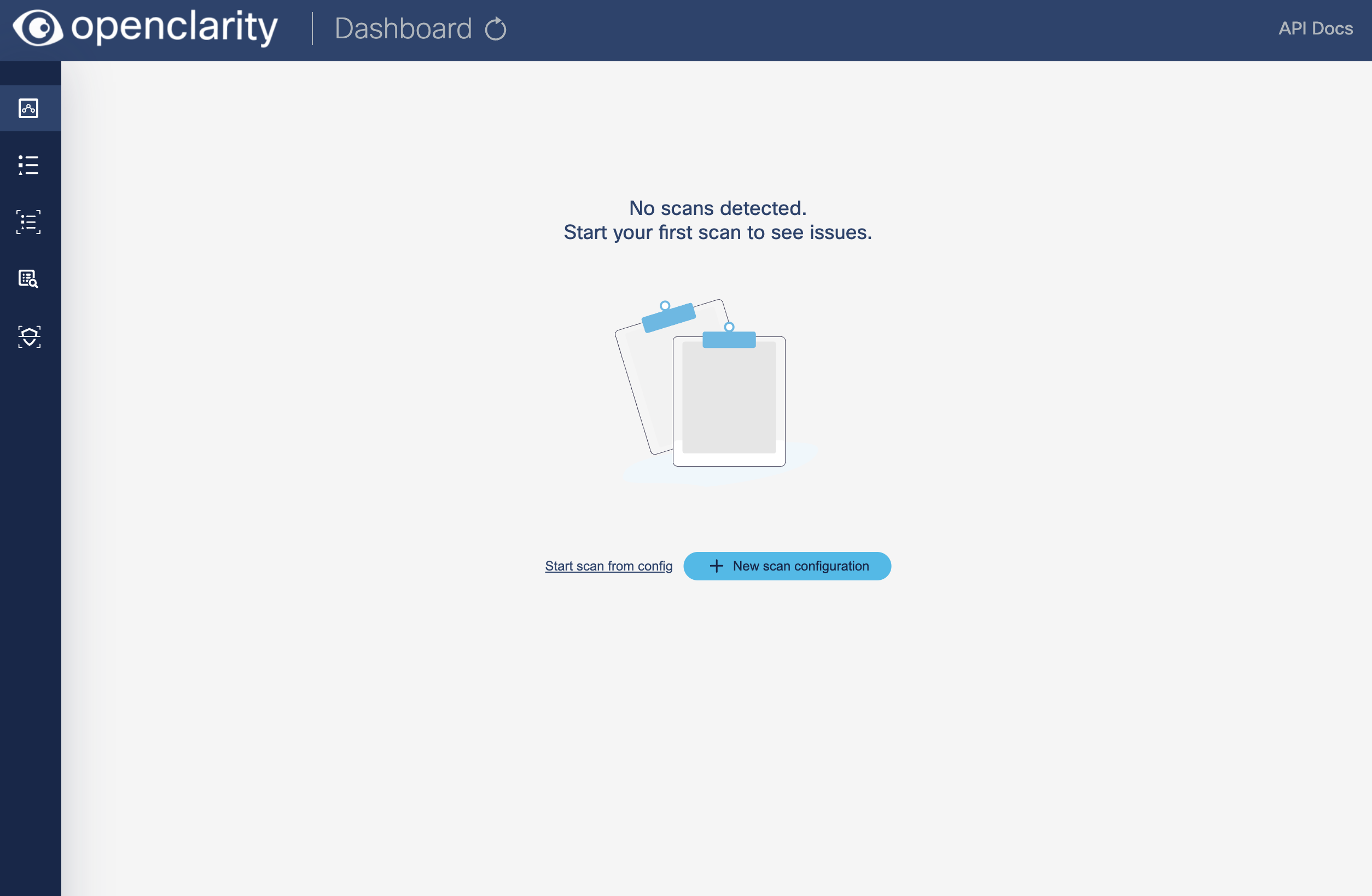

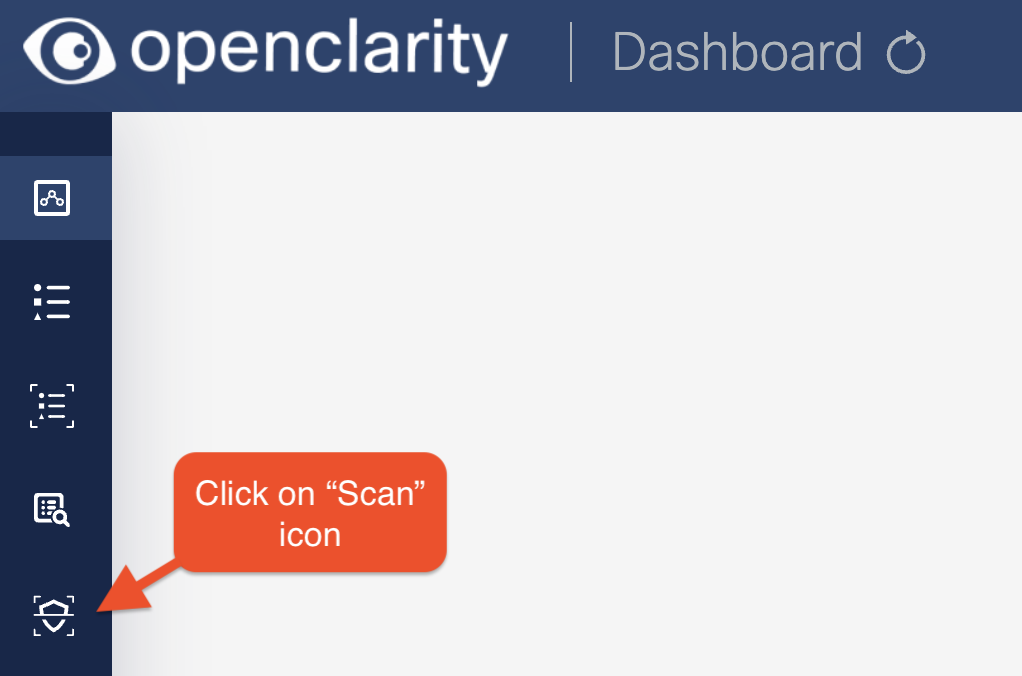

Open the UI.

-

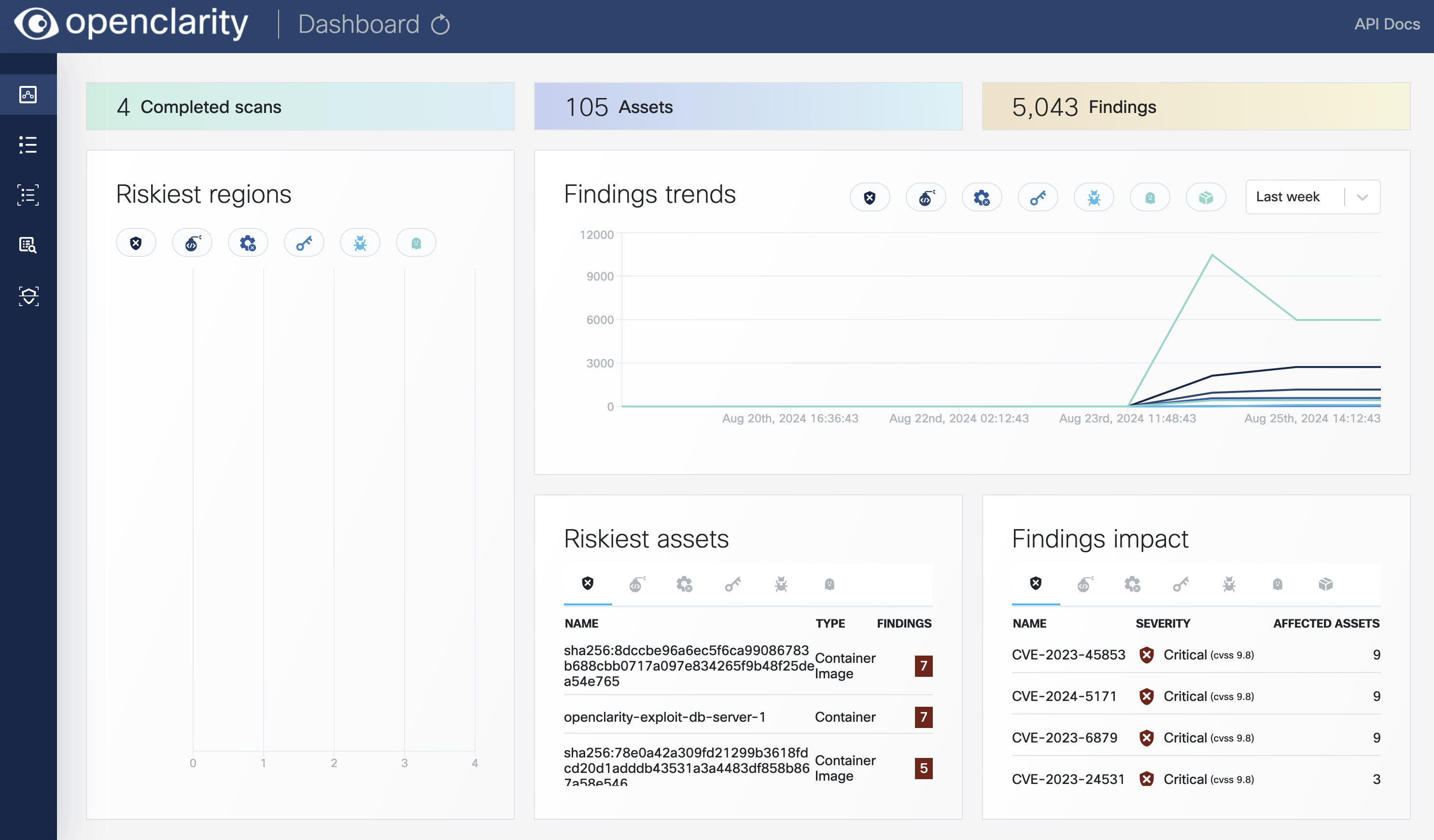

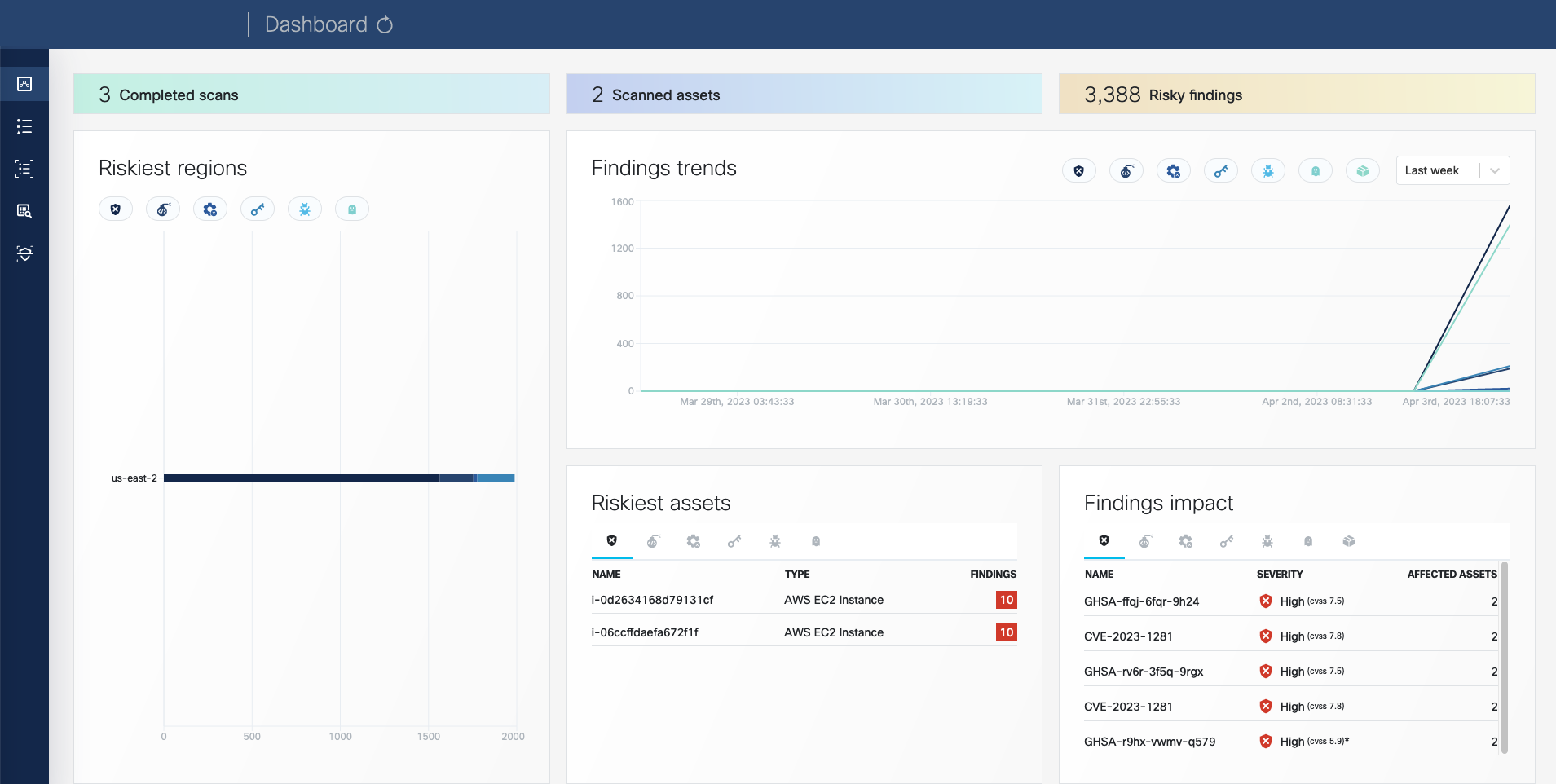

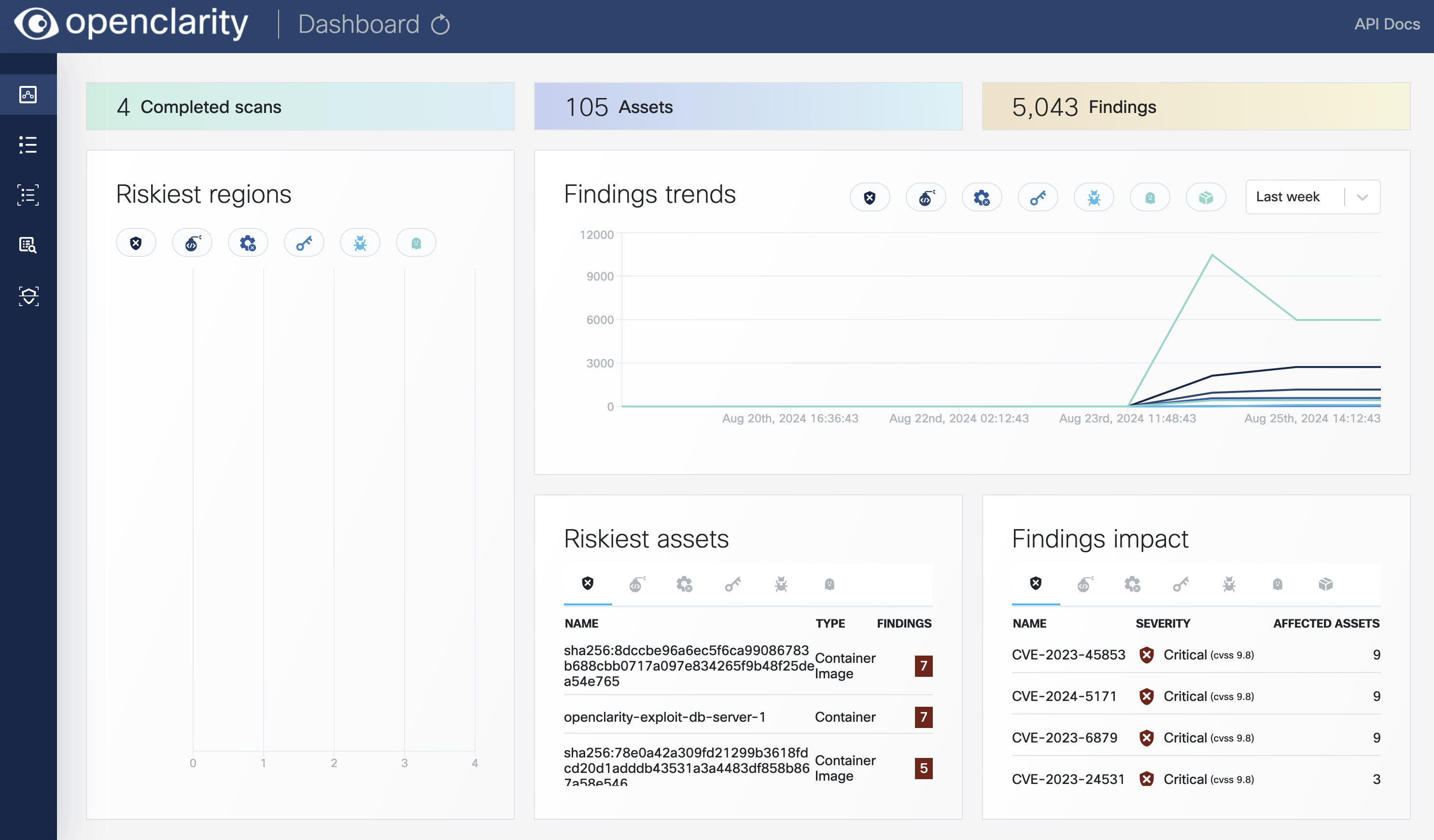

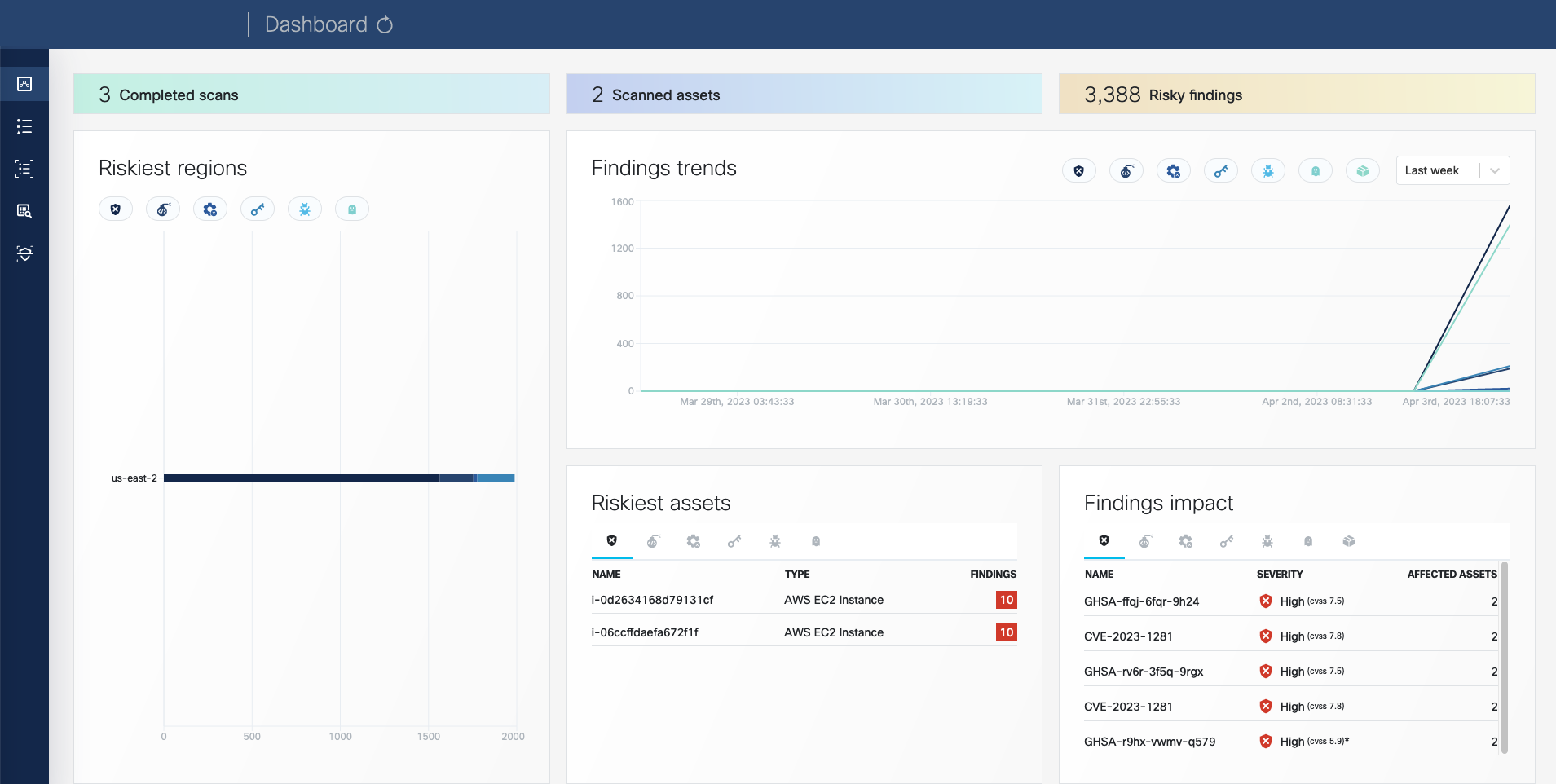

Open the OpenClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API at http://localhost:8080/api. For details on the API, see the API reference.

-

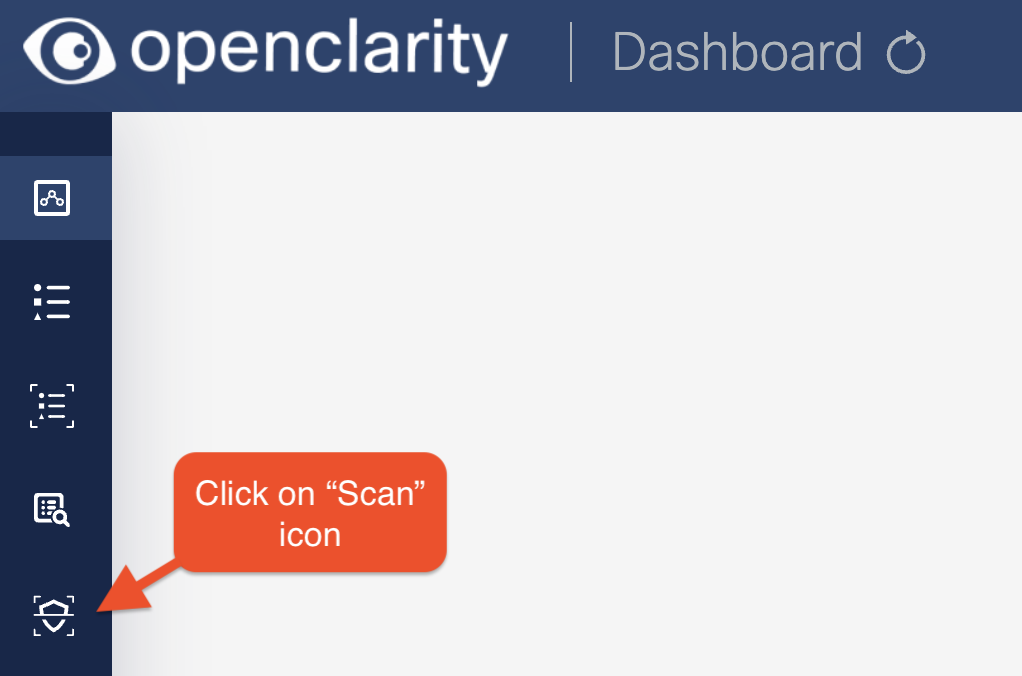

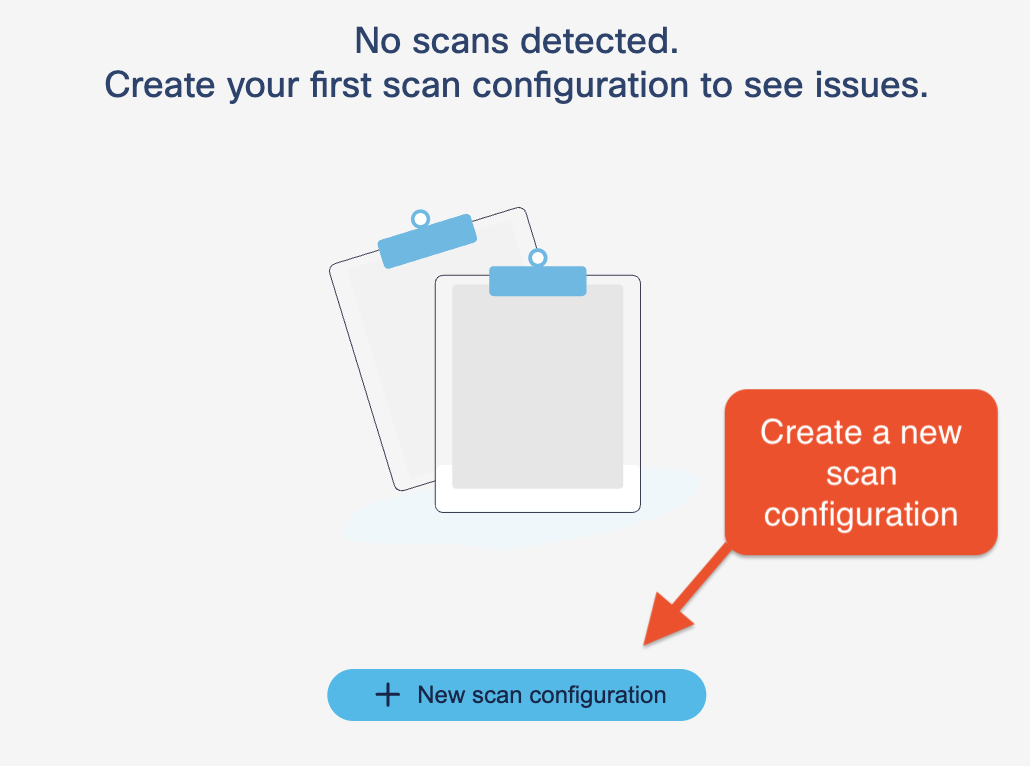

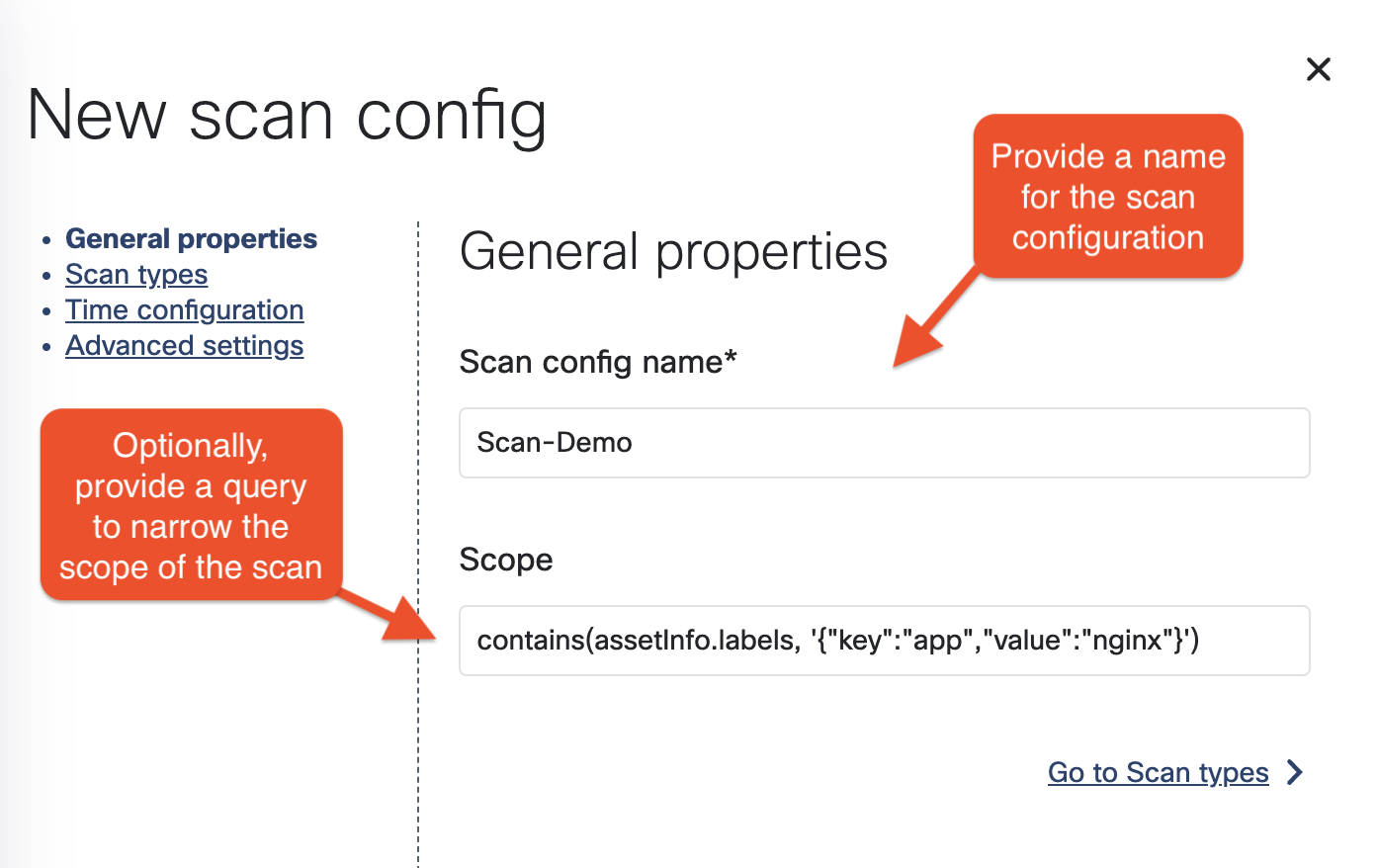

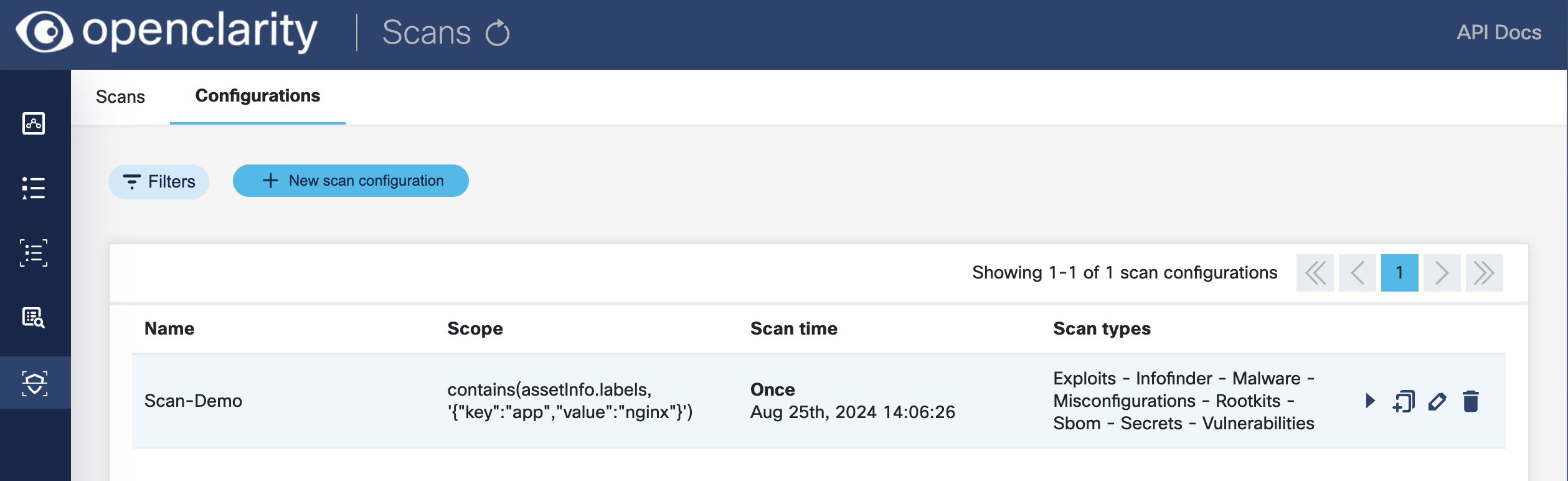

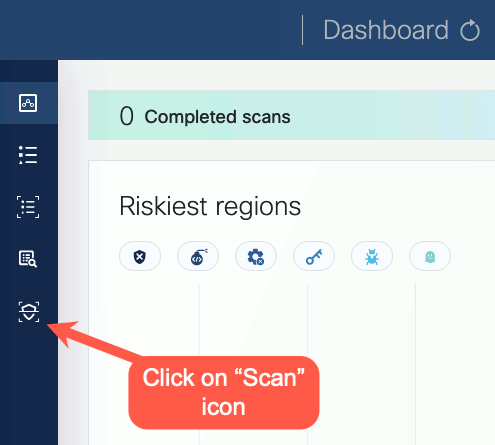

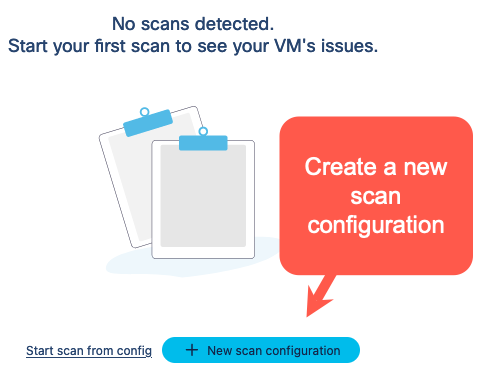

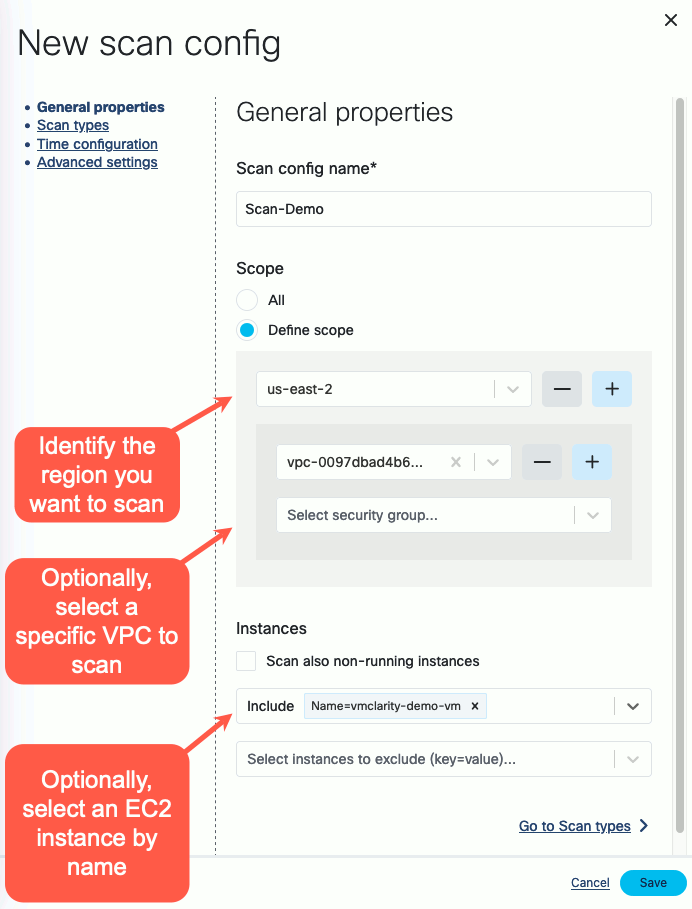

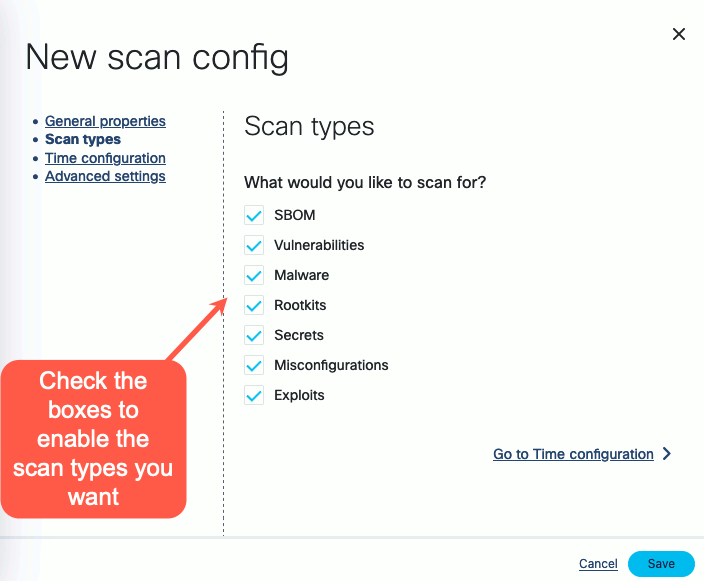

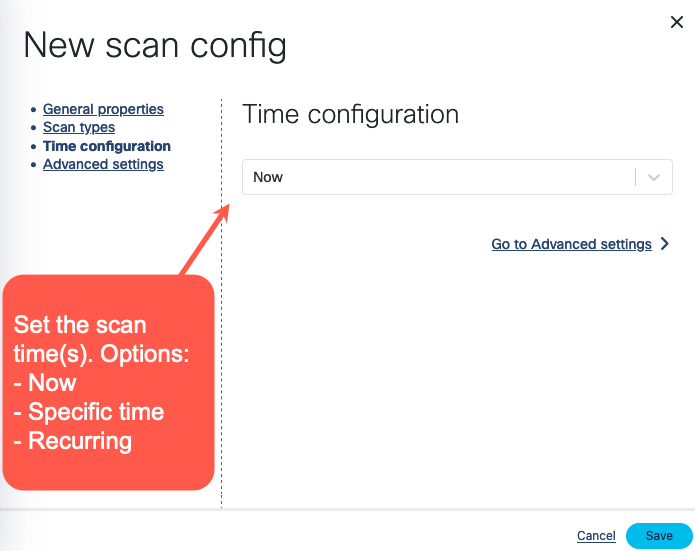

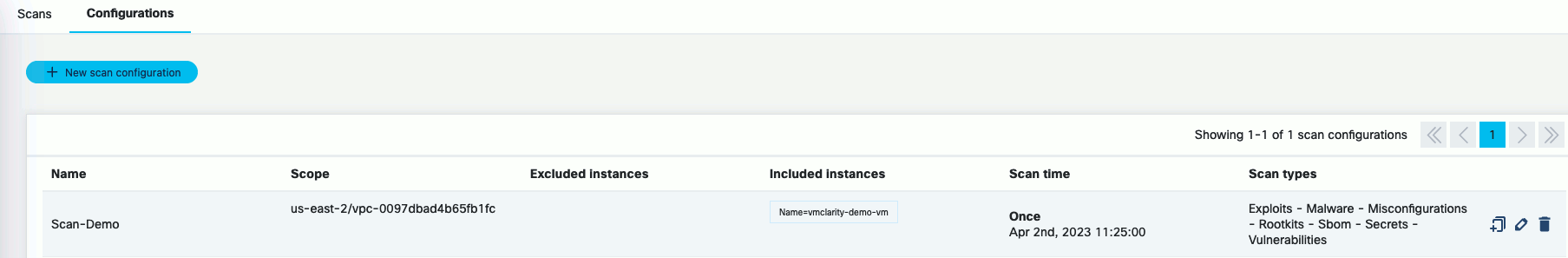

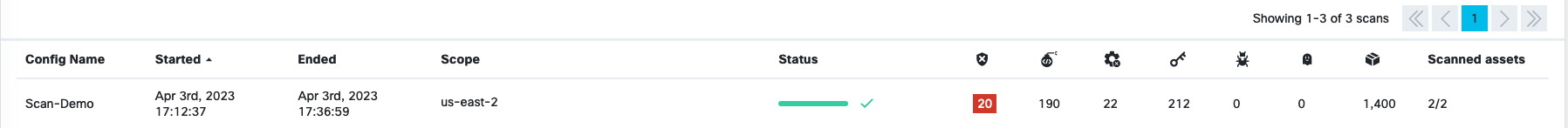

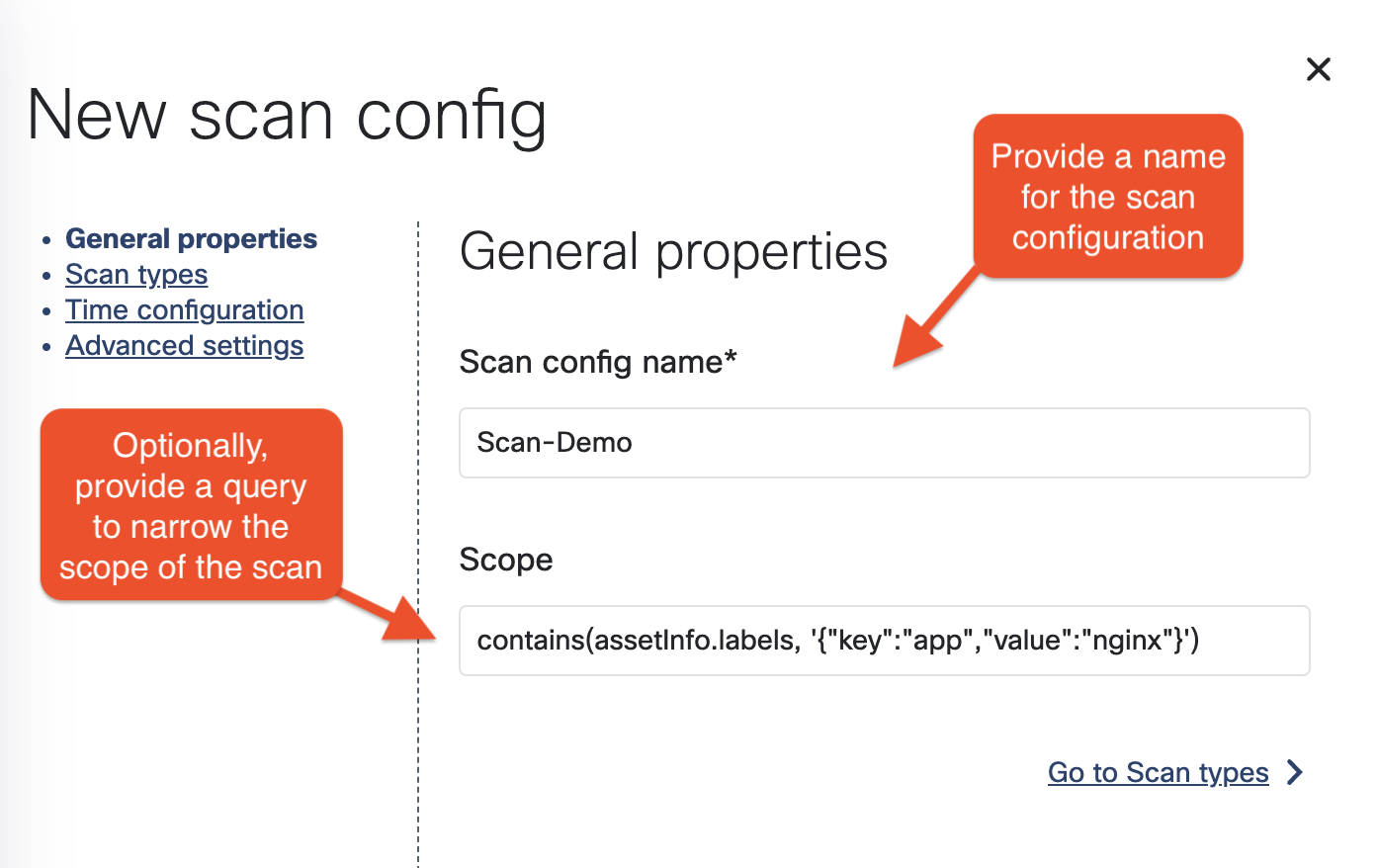

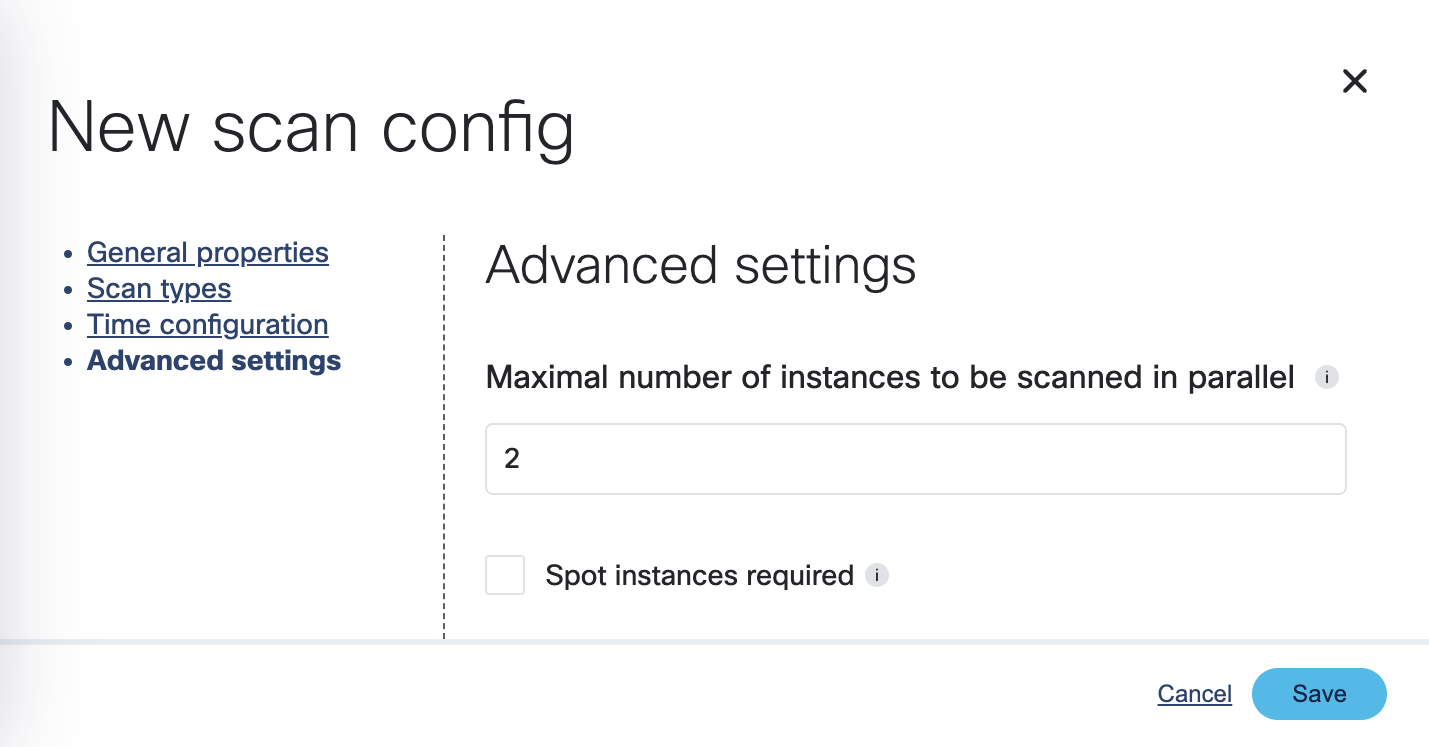

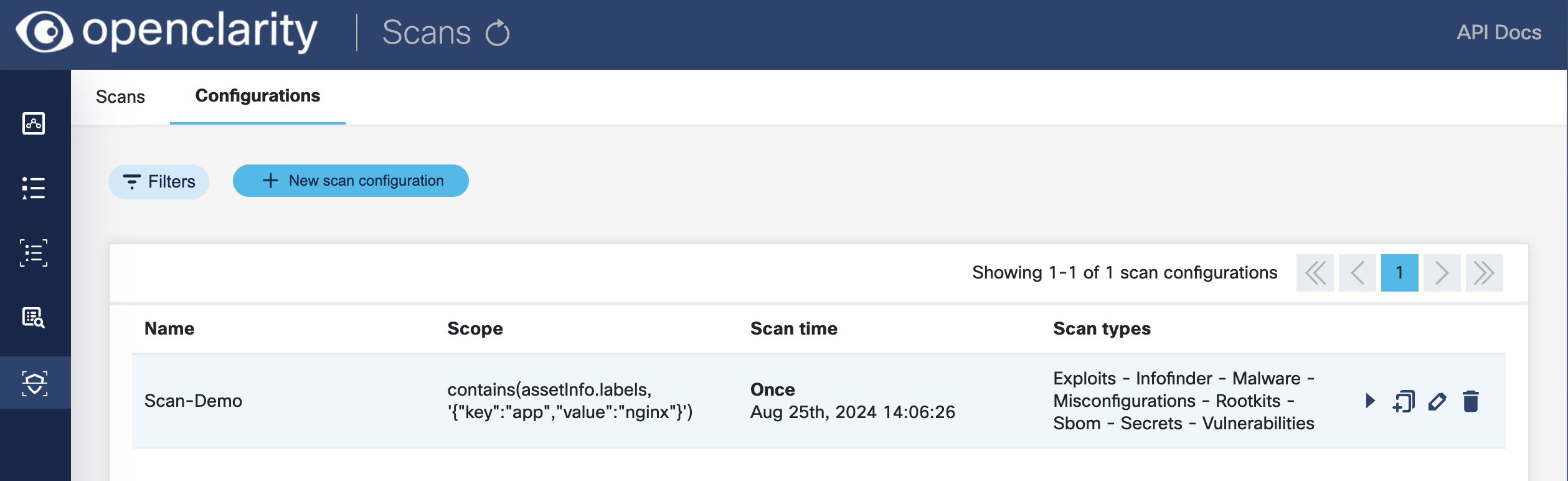

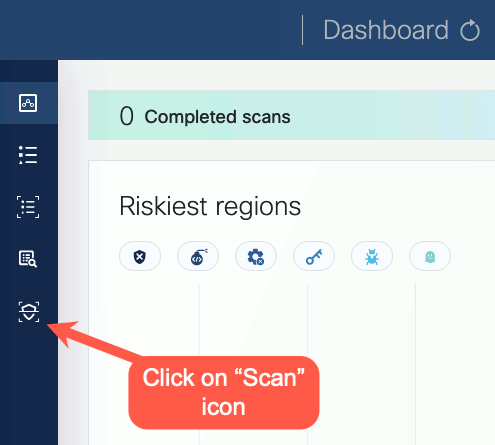

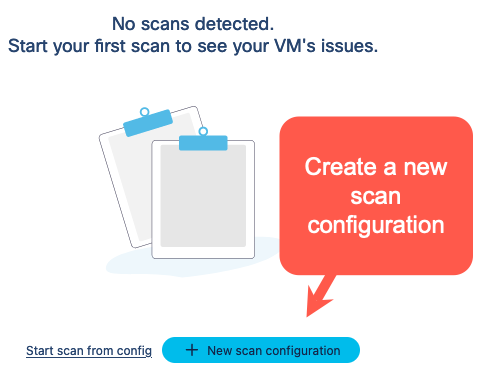

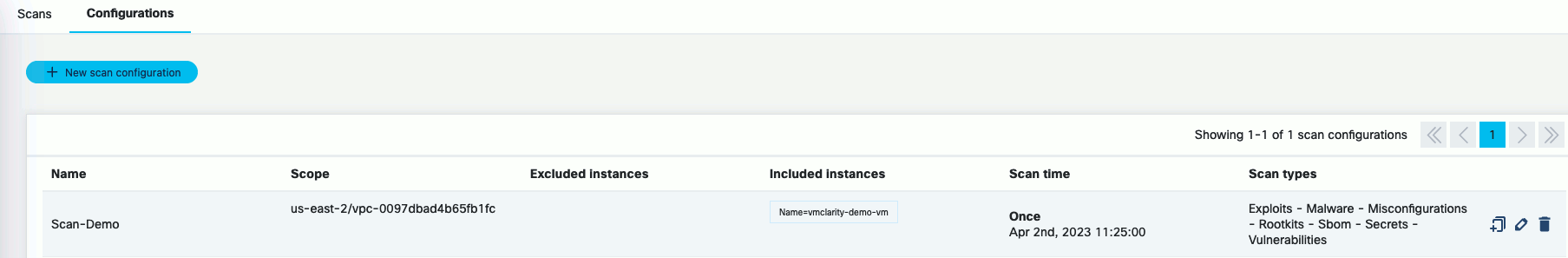

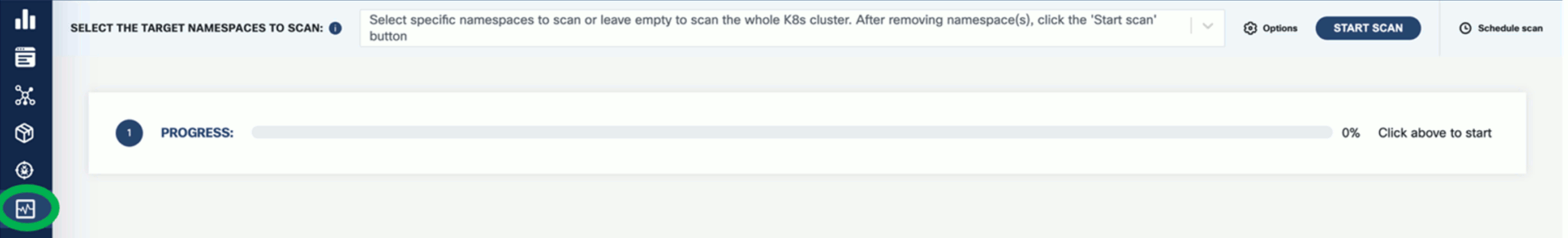

Click on the Scans icon. In the Scans window, you can create a new scan configuration.

-

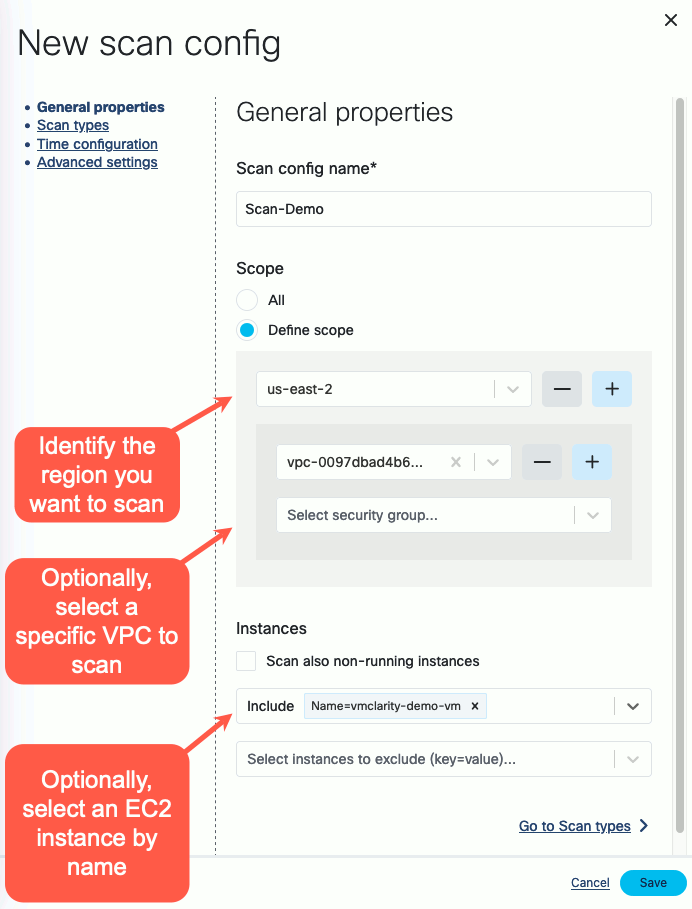

Click New scan configuration.

-

Follow the steps of the New scan config wizard to name the scan, and optionally narrow the scope down with an

OData query.

-

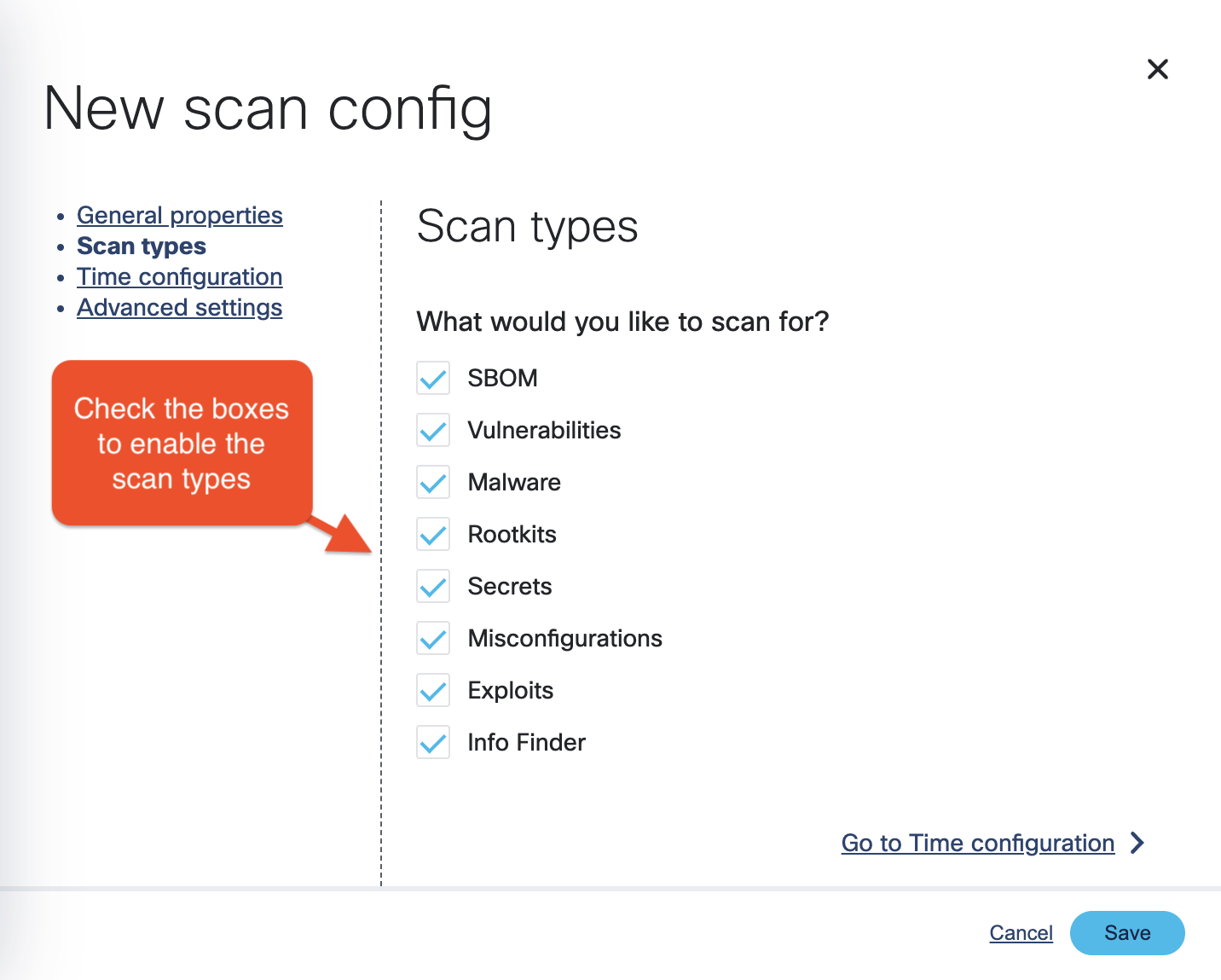

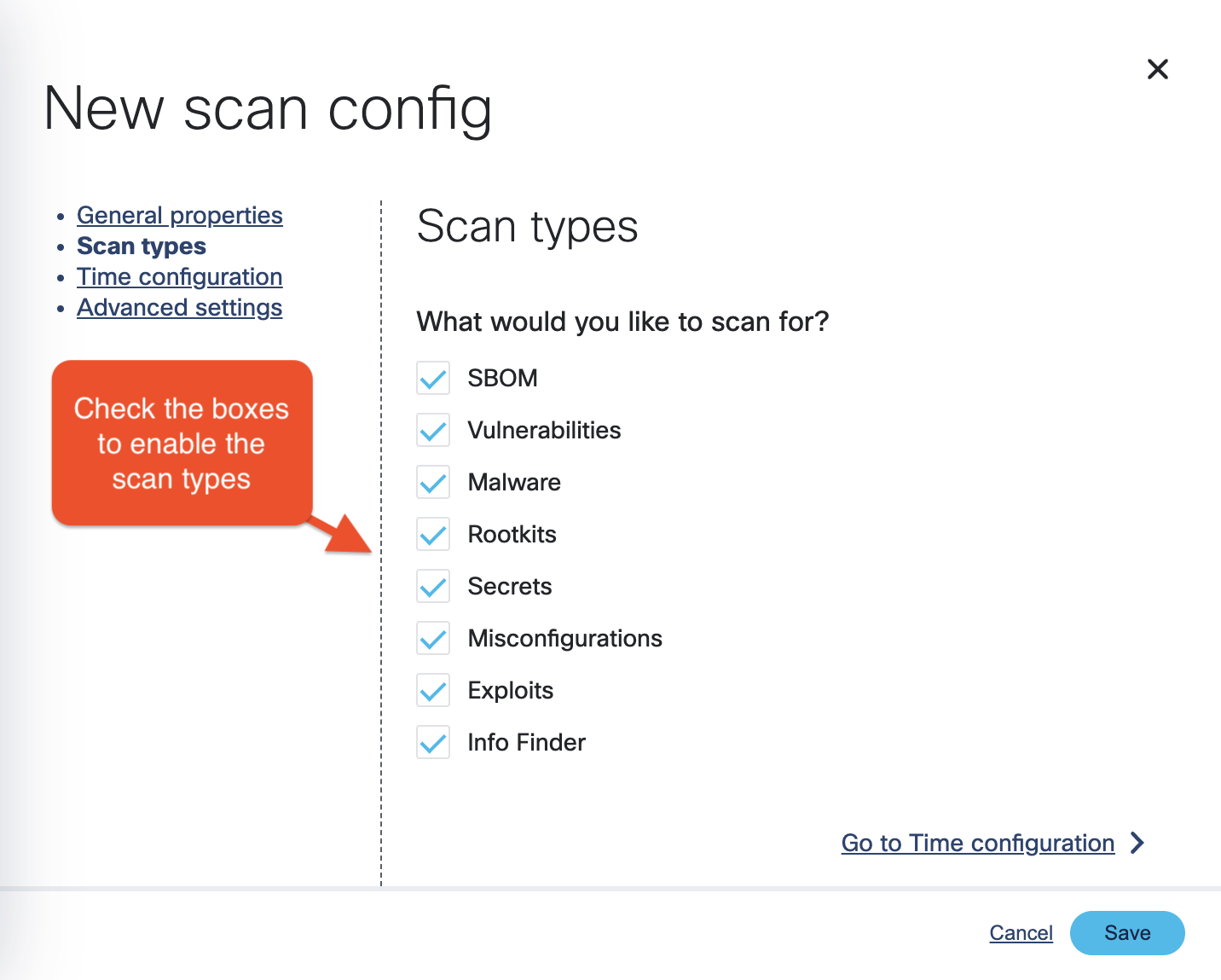

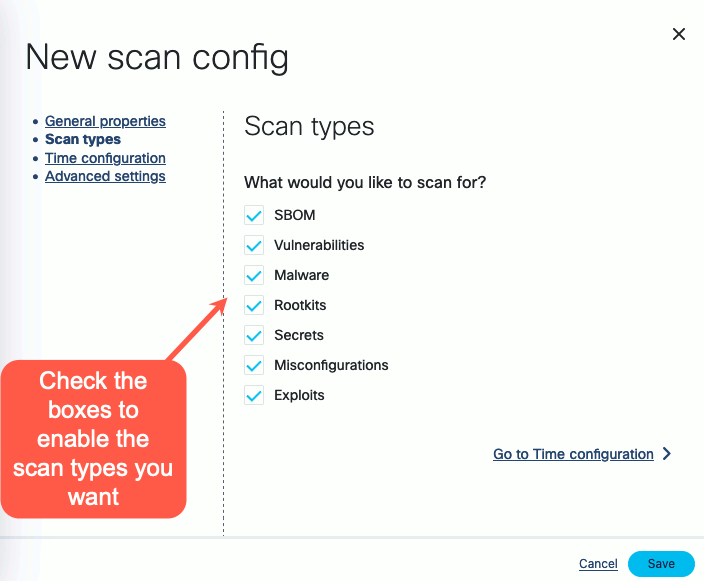

Enable the scan types you want to perform.

-

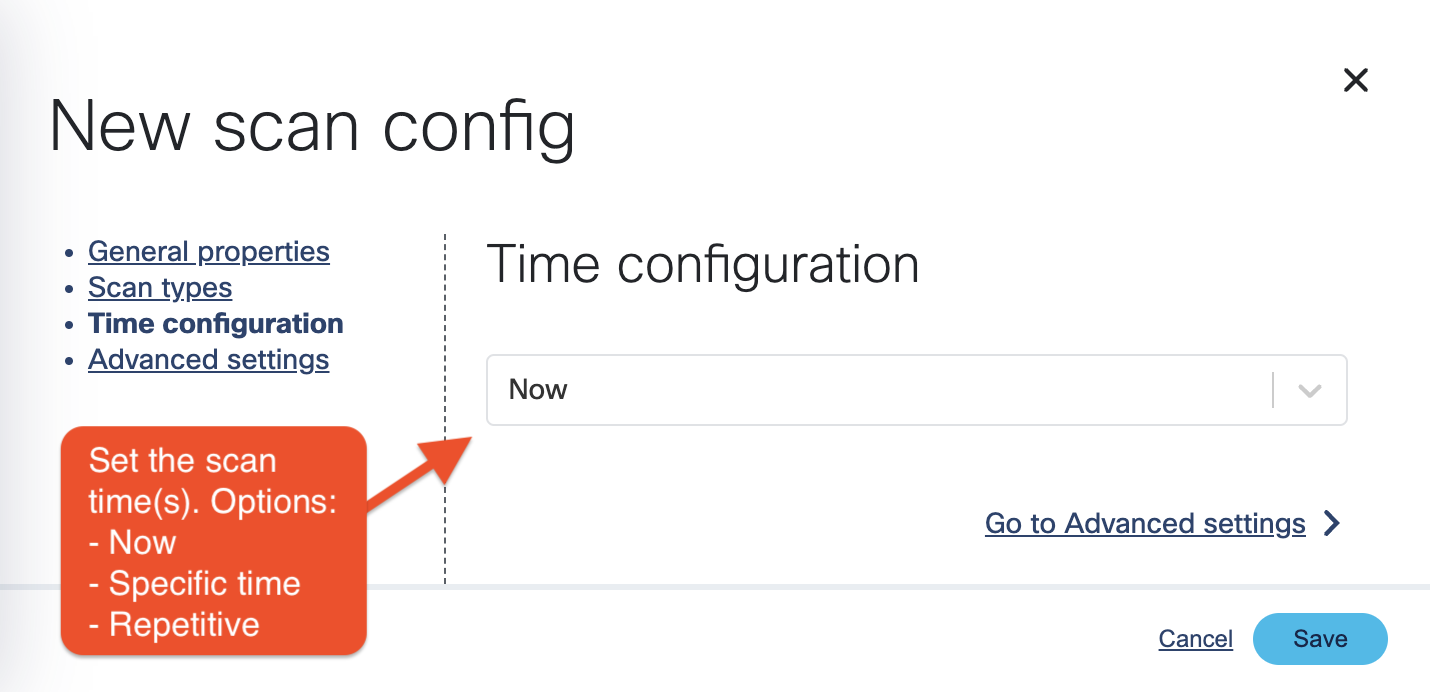

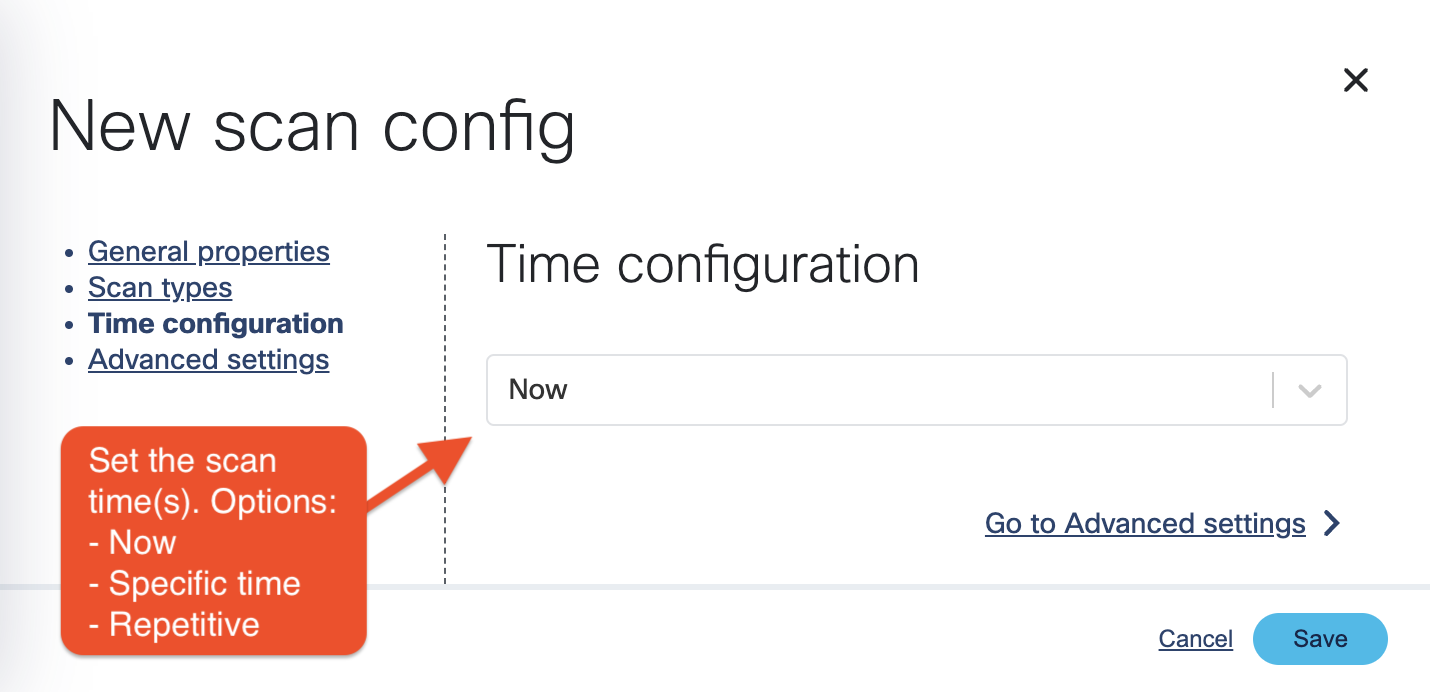

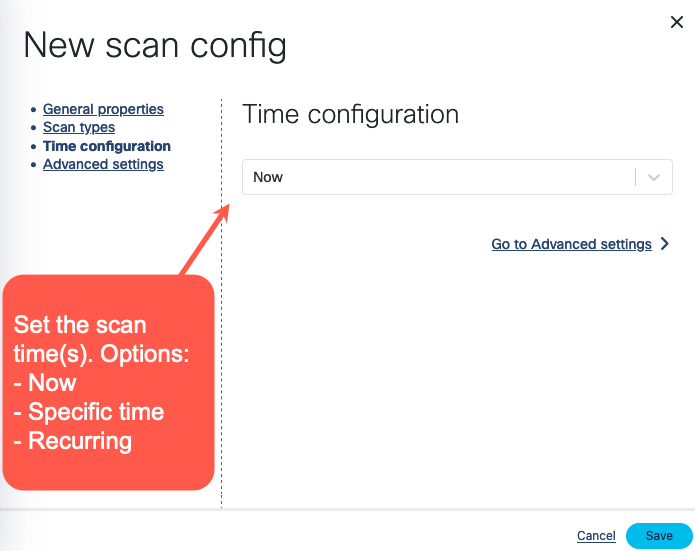

Select the time and/or frequency of the scans. To run the scan immediately, select Now.

-

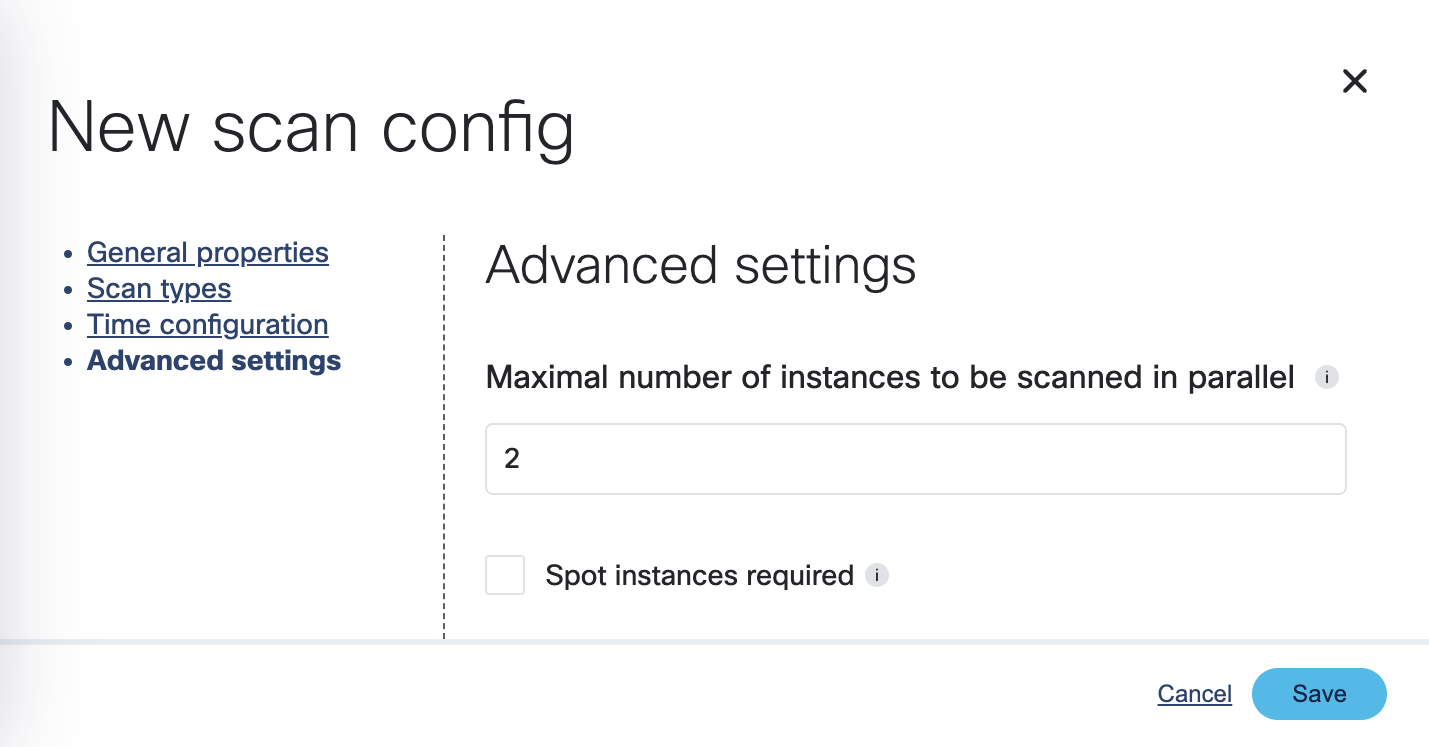

Optionally, adjust the number of scanners to run in parallel and whether to use spot instances on cloud providers, or

not.

-

Click Save. The new scan appears on the Scan Configurations tab.

-

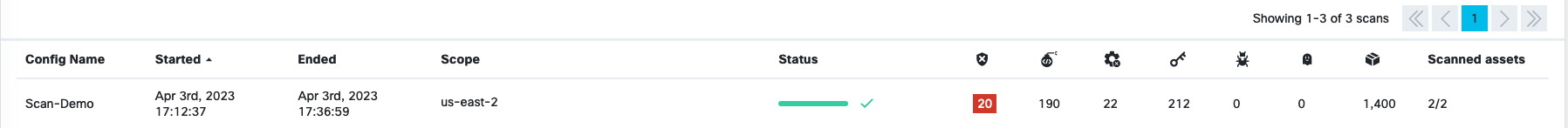

Once a scan is finished, you can browse around the various OpenClarity UI features and investigate the security scan reports.

2 - Concepts

The following sections give you the concepts and background information about the scans provided by OpenClarity.

2.1 - Software Bill of Materials

A software bill of materials (SBOM) is a list of all the components, libraries, and other dependencies that make up a software application, along with information about the versions, licenses, and vulnerabilities associated with each component. They are formal, structured documents detailing the components of a software product and its supply chain relationships.

SBOMs are important because organizations increasingly rely on open source and third-party software components to build and maintain their applications. These components can introduce security vulnerabilities and must be adequately managed and updated. SBOMs help you understand what open source and third-party components are used in your applications, and identify and address any security vulnerabilities.

Under specific scenarios, generating and publishing SBOMs is mandatory for compliance with regulations and industry standards that require organizations to disclose the use of open source and third-party software in their products.

SBOM Standards

There are several related standards, for example, CycloneDX, SPDX, SWID.

SPDX (Software Package Data Exchange) is a standard format for communicating a software package’s components, licenses, and copyrights. It is commonly used to document the open source components included in a proprietary software product. SPDX files can be easily read and understood by humans and machines, making it easy to track and manage open source components in a software project. SPDX format is supported by Linux Foundation.

CycloneDX is an open source standard for creating software bill of materials files. It is like SPDX in that it documents the components and licenses associated with a software package, but it is specifically designed for use in software supply chain security. CycloneDX is a more lightweight format compared to SPDX, which is intended to be more detailed. CycloneDX format is supported by OWASP.

SBOM Architecture

A typical SBOM architecture can be laid out as a tree-like dependency graph with the following key elements:

- Component inventory: Information about the components, libraries, and other assets used in the software, including version numbers, licenses, and vulnerabilities.

- Dependency mapping: A map of relationships between different components and libraries, showing how they depend on each other and how changes to one may impact the other.

- License management: It should also include information about the licenses of the components and libraries used to ensure that the software complies with legal and ethical obligations.

SBOM Generators

There are two typical ways to generate SBOM: during the build process, or after the build and deployment using a Software Composition Analysis tool. Trivy and Syft are two noteworthy open source generators among many other generators, including open source and commercial. Both use CycloneDX format. It is also important to note that not all SBOMs can be generated equally. Each generator may pick up a few language libraries better than the others based on its implementation. It might take multiple runs through a few different types of generators to draw comprehensive insights.

OpenClarity content analyzer integrates with the following SBOM generators:

Multiple SBOMs for Accuracy

OpenClarity can run multiple SBOM generators in parallel, and unify their results to generate a more accurate document.

In such cases, OpenClarity compiles a merged SBOM from multiple open source analyzers, and delivers a comprehensive SBOM document report. Although OpenClarity does not generate SBOMs, it integrates with popular generators so that a combined document can provide amplified inputs that can be further analyzed using vulnerability scanners. Leveraging multiple SBOM documents can improve visibility into software dependency posture.

OpenClarity formats the merged SBOM to comply with the input requirements of vulnerability scanners before starting vulnerability scans.

Note: OpenClarity can merge vulnerability scans from various sources like Grype and Trivy to generate a robust vulnerability scan report.

Scan SBOM Documents for Vulnerabilities

You can feed the generated SBOM documents to vulnerability scanners, which analyze the SBOMs and generate a vulnerability report detailing all known and fixed CVEs of the software components listed by SBOM.

2.1.1 - SBOM Output Format

The openclarity-cli scan command can format the resulting SBOM into different formats to integrate with another system. The supported formats are:

| Format | Configuration Name |

| CycloneDX JSON (default) | cyclonedx-json |

| CycloneDX XML | cyclonedx-xml |

| SPDX JSON | spdx-json |

| SPDX Tag Value | spdx-tv |

| Syft JSON | syft-json |

CAUTION:

OpenClarity processes CycloneDX internally, the other formats are supported through a conversion. The conversion process can be lossy due to incompatibilities between formats, therefore in some cases not all fields/information are present in the resulting output.

To configure the openclarity-cli to use a format other than the default, the sbom.output_format config parameter can be used with the configuration name from above:

# Create config based on https://github.com/openclarity/openclarity/blob/main/.families.yaml

cat <<EOF > config.yml

sbom:

enabled: true

analyzers_list:

- "syft"

inputs:

- input: "/dir-to-scan"

input_type: "rootfs"

output_format: "cyclonedx-json"

EOF

# Run scan

openclarity-cli scan --config config.yml

For more information the CLI configuration, see the Example CLI Configuration.

2.2 - Vulnerability Scanning

Vulnerability scanning identifies weak spots in software code and dependencies. Vulnerability scanners can identify infrastructure, networks, applications, or website vulnerabilities. These tools scan various target systems for security flaws that attackers could exploit.

The scanners use the information contained in the SBOM to identify vulnerabilities and potential security risks within software applications. Vulnerability scanners use SBOM information to:

- Identify vulnerable components: Scanners use the SBOM to identify a software application’s components, then cross-reference this information with known vulnerabilities and security issues to identify vulnerable components within the software.

- Prioritize vulnerabilities: After the vulnerability scanner has identified all vulnerable components within the software application, it uses the SBOM to prioritize the vulnerabilities so you can focus on the most critical vulnerabilities.

- Identify supply chain risks: SBOMs provide visibility into the software supply chain, enabling vulnerability scanners to identify third-party or security risks. As a result, organizations can mitigate supply chain risks and reduce their overall security exposure.

- Track changes and updates: Software vulnerability scanners use SBOM information to determine whether software changes have introduced new vulnerabilities or security risks.

The SBOM is a critical tool for vulnerability scanners, providing the information needed to identify, prioritize, and mitigate security risks within software applications. In addition, scanners also rely on other types of inputs, as listed below.

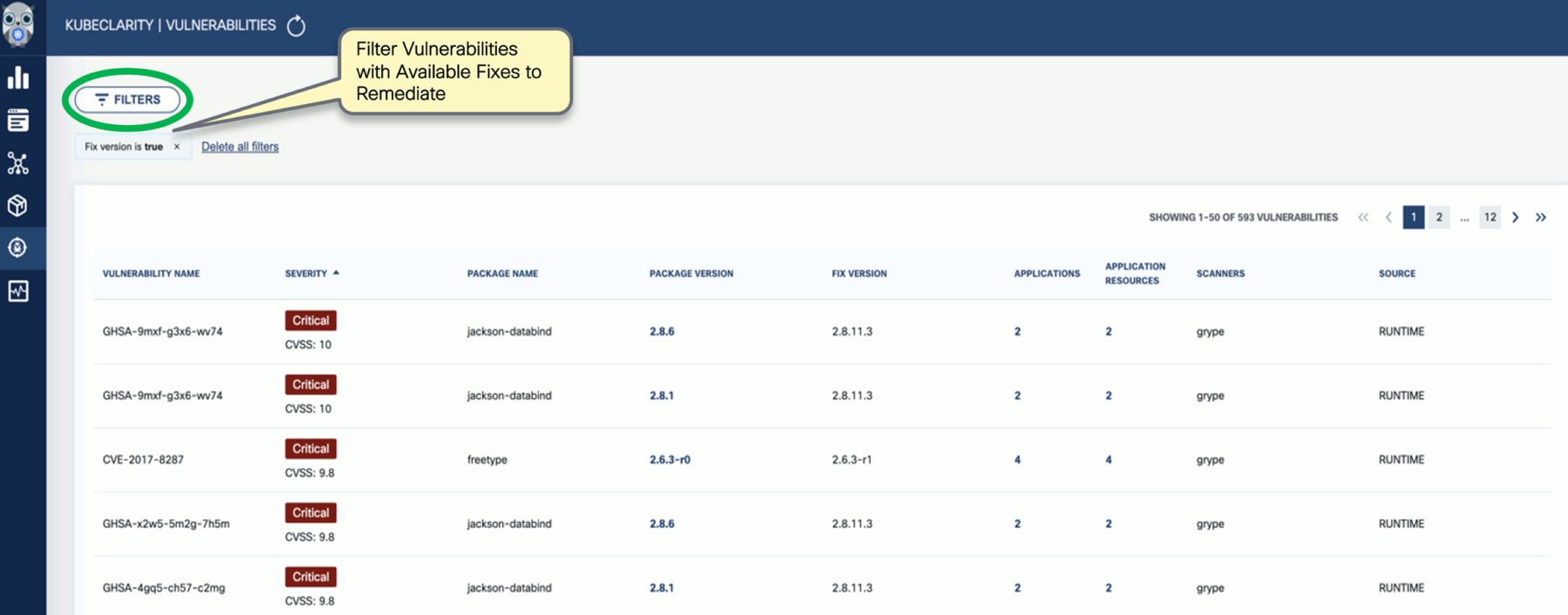

OpenClarity and Vulnerability Scanning

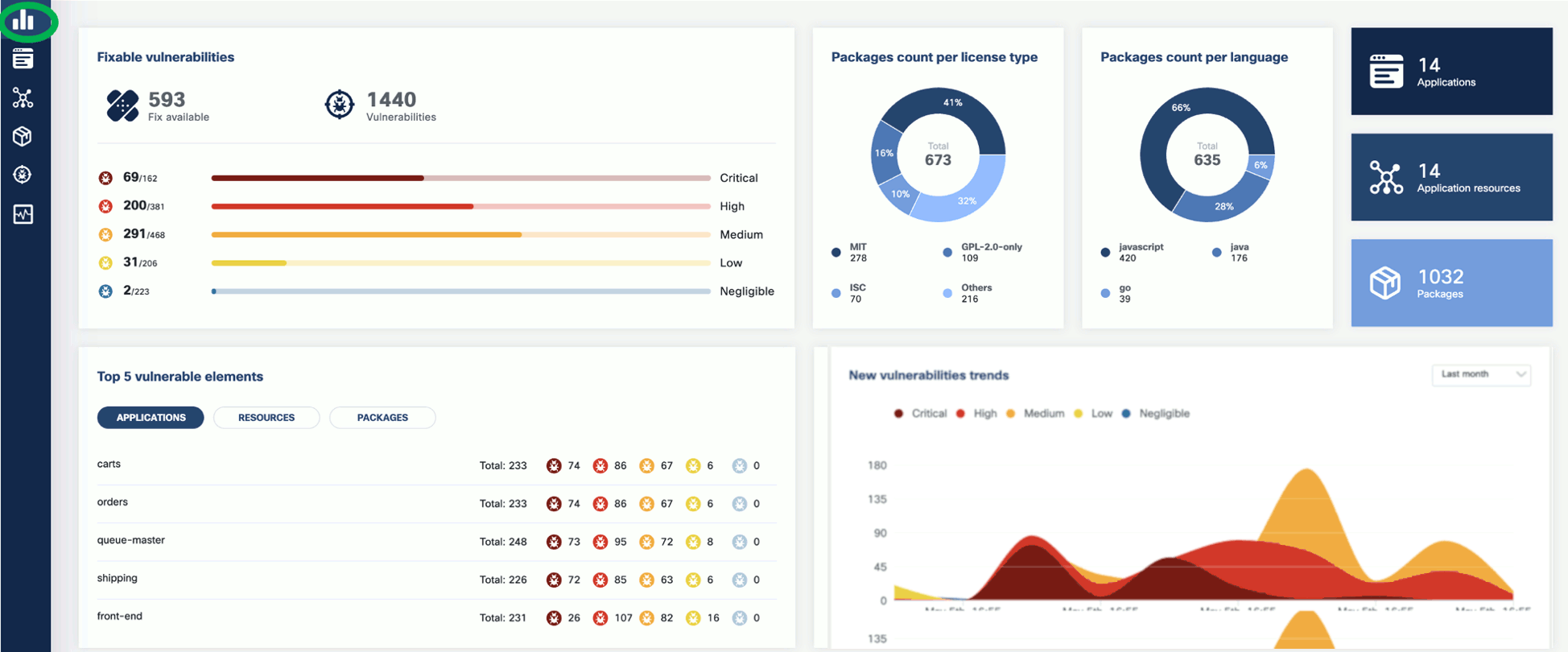

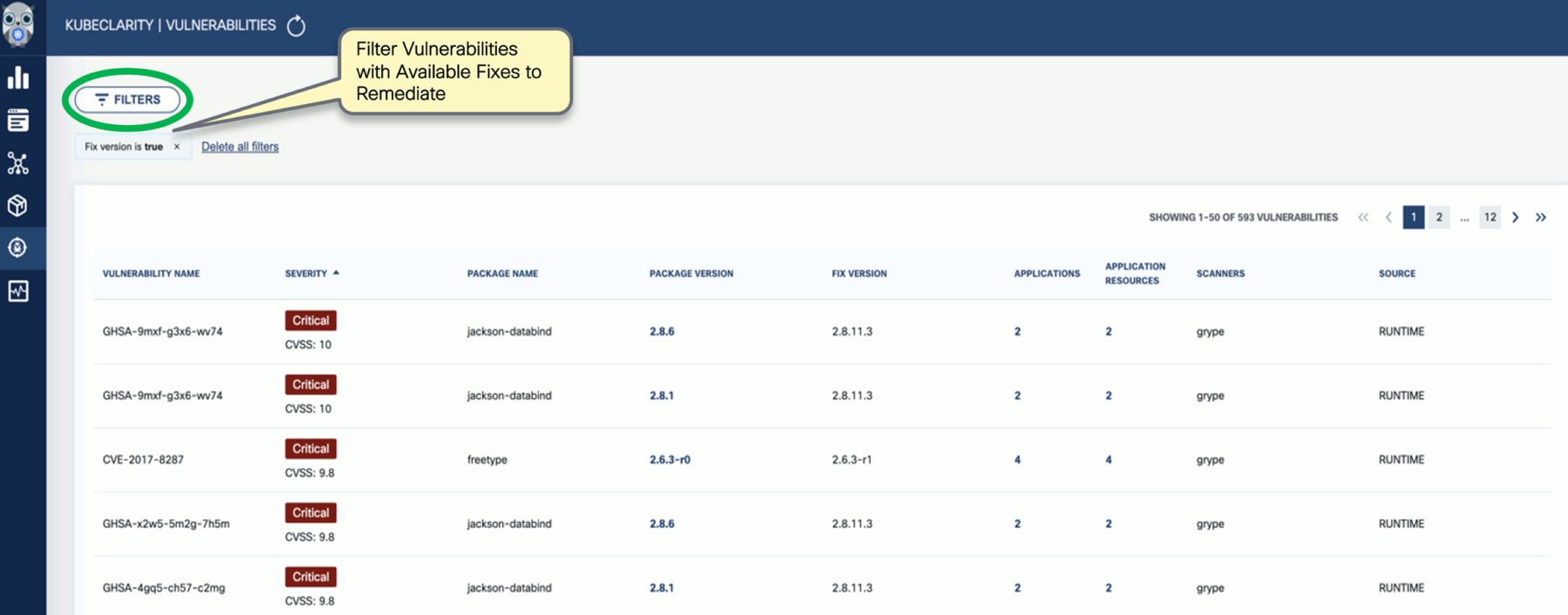

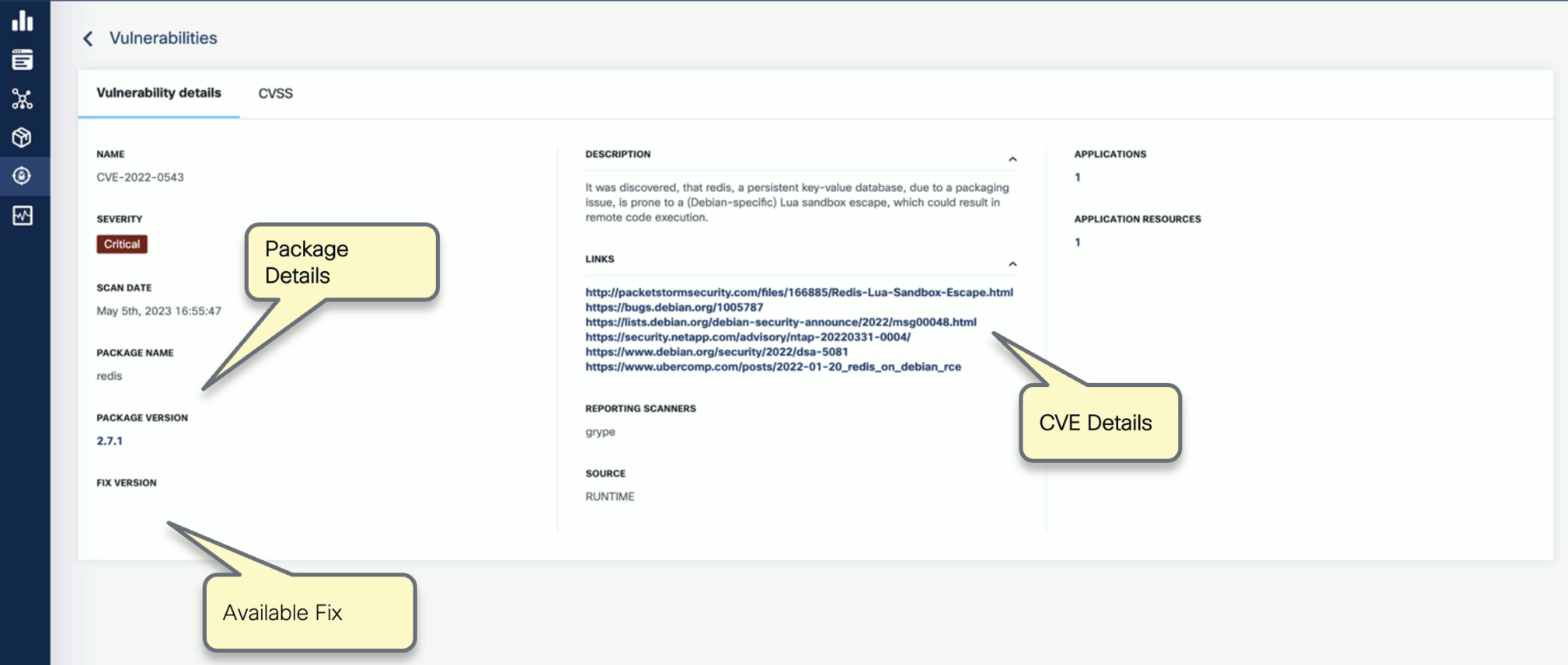

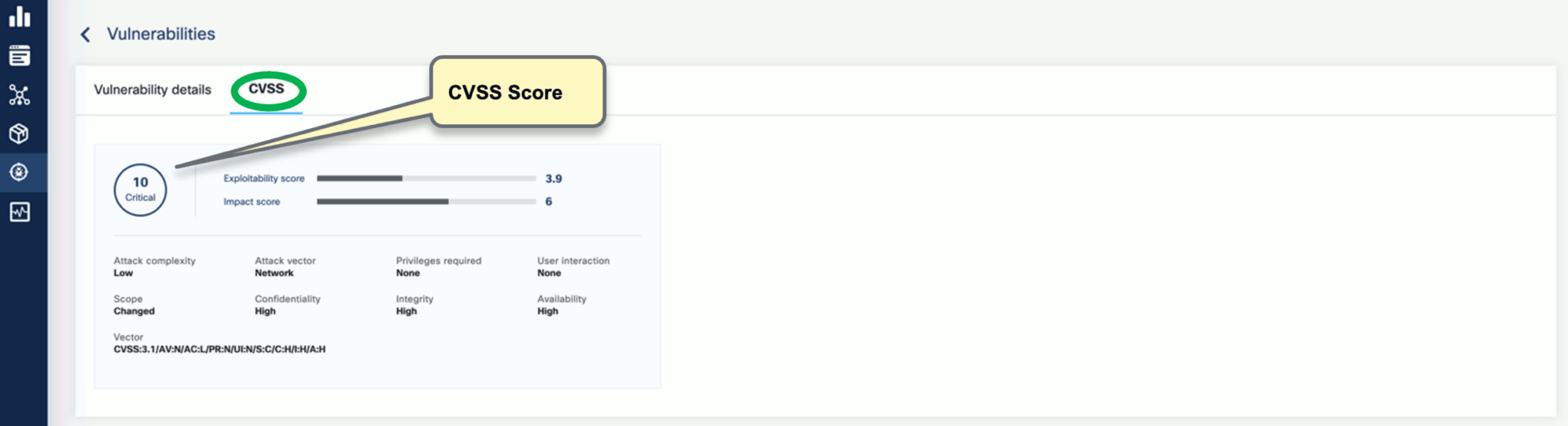

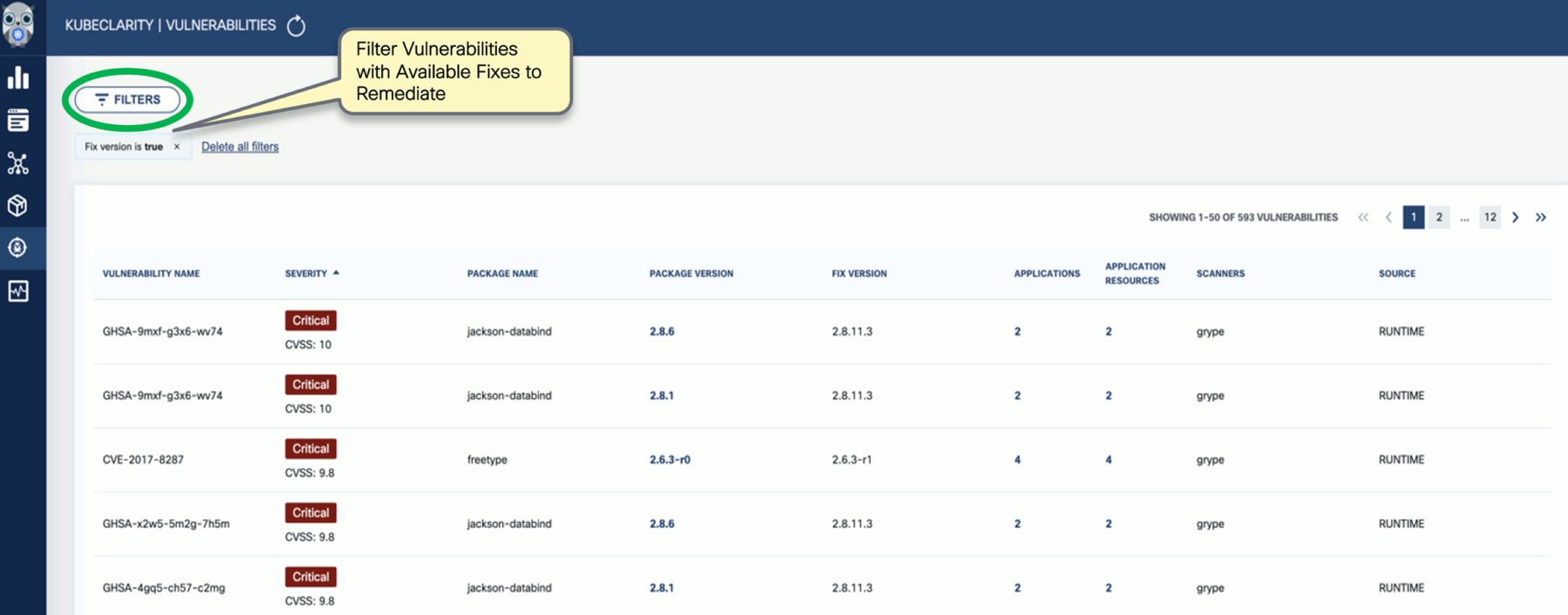

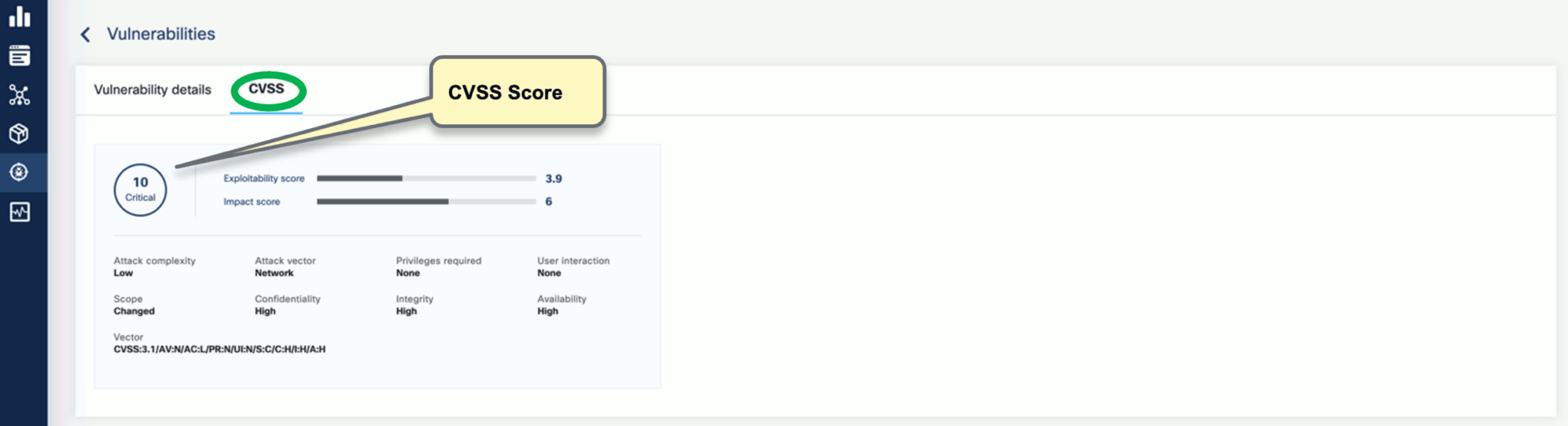

OpenClarity isn’t a vulnerability scanner but integrates with top opensource vulnerability scanners. It also helps with prioritization and risk management by visualization and filtering. It is often necessary to prioritize CVEs because of the sheer volume of identified CVEs. With OpenClarity’s vulnerability trending dashboard and APIs, you can locate and double-click into a specific CVE in your application or infrastructure.

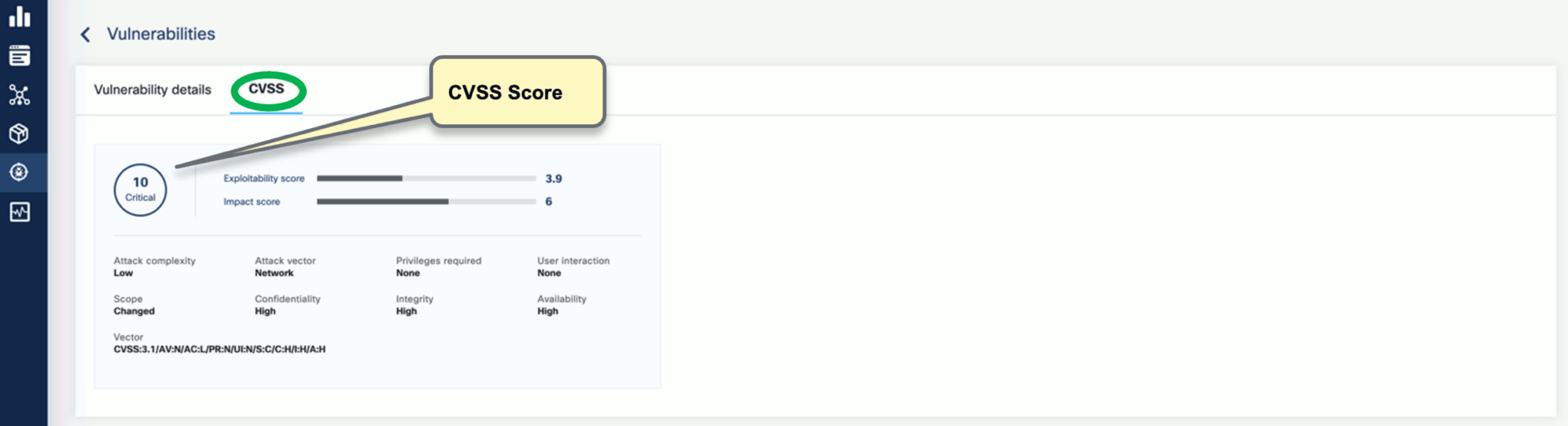

OpenClarity features a range of flexible and dynamic filters that help map CVEs down to an application->package->Image level. Additionally, it normalizes reports from multiple scanners and calculates missing CVSS (Common Vulnerability Scoring System) scores.

OpenClarity vulnerability scanner integrates with the following scanners:

OpenClarity supports both automatic scans to find common vulnerabilities quickly and efficiently, and manual scans to help verify automated scans, and also to help identify more complex and less common vulnerabilities. In addition to conventional scans, OpenClarity also provides multi-scanner integration.

Multi-scanner Architecture

OpenClarity infrastructure enables multiple scanners’ configuration and simultaneous operation. Scanners in OpenClarity are designed to work in parallel.

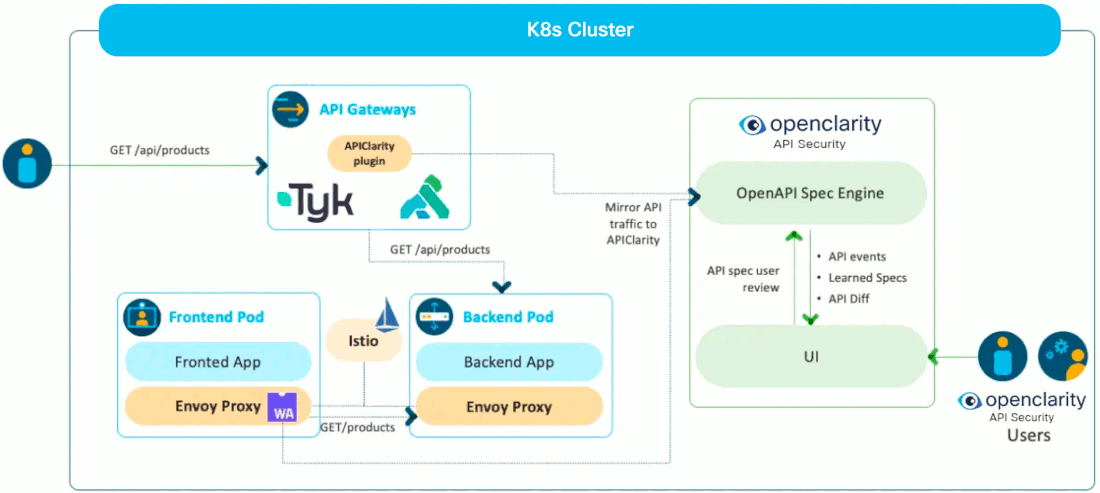

The following figure shows the multi-scanner architecture for vulnerability scanning: OpenClarity preprocesses the SBOMs so they conform to the specific formatting requirements of the specific scanner. Each scanner may have different types and unique formatting expectations. The scanners analyze the incoming data and generate vulnerability outputs in their native formats.

OpenClarity can merge the vulnerability reports of different scanners, to include severity levels, sources, and available fixes. These reports serve as valuable outputs, allowing you to filter and focus on specific areas of vulnerabilities for further investigation and resolution.

Run Vulnerability Scans

For details on running vulnerability scans with OpenClarity, see the Getting Started.

2.3 - Plugins

Plugins provide additional scanning capabilities to OpenClarity ecosystem.

Project structure:

- runner - Provides necessary logic to manage scanner plugins in OpenClarity.

- sdk - Language-specific libraries, templates, and examples to aid with the implementation of scanner plugins.

- store - Collection of available plugins that can be directly used in OpenClarity.

Requirements

Scanner plugins are distributed as containers and require Docker Engine on the host that runs the actual scanning via

OpenClarity CLI to work.

Support

List of supported environments:

- AWS

- GCP

- Azure

- Docker

List of unsupported environments:

- Kubernetes - We plan on adding plugin support to Kubernetes once we have dealt with all the security considerations.

Note: Plugin support has been tested against OpenClarity installation artifacts for the given environments.

Usage

You can start using plugins via Plugins Store.

For example, you can pass the .families.yaml scan config file defined below to the OpenClarity CLI scan command.

This configuration uses KICS scanner to scan /tmp dir for IaC security misconfigurations.

# --- .families.yaml

plugins:

enabled: true

scanners_list:

- "kics"

inputs:

- input: "/tmp"

input_type: "rootfs"

scanners_config:

kics:

image_name: "ghcr.io/openclarity/openclarity-plugin-kics:latest"

config: "{}"

SDKs

You can use one of available SDKs in your language of choice to quickly develop scanner plugins for OpenClarity.

List of supported languages:

3 - Using OpenClarity

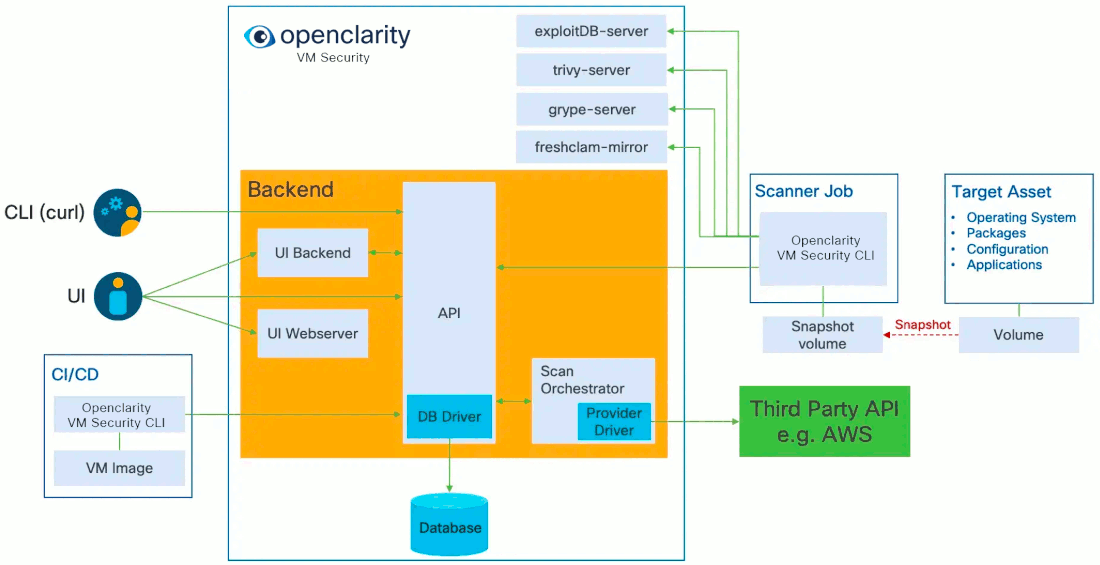

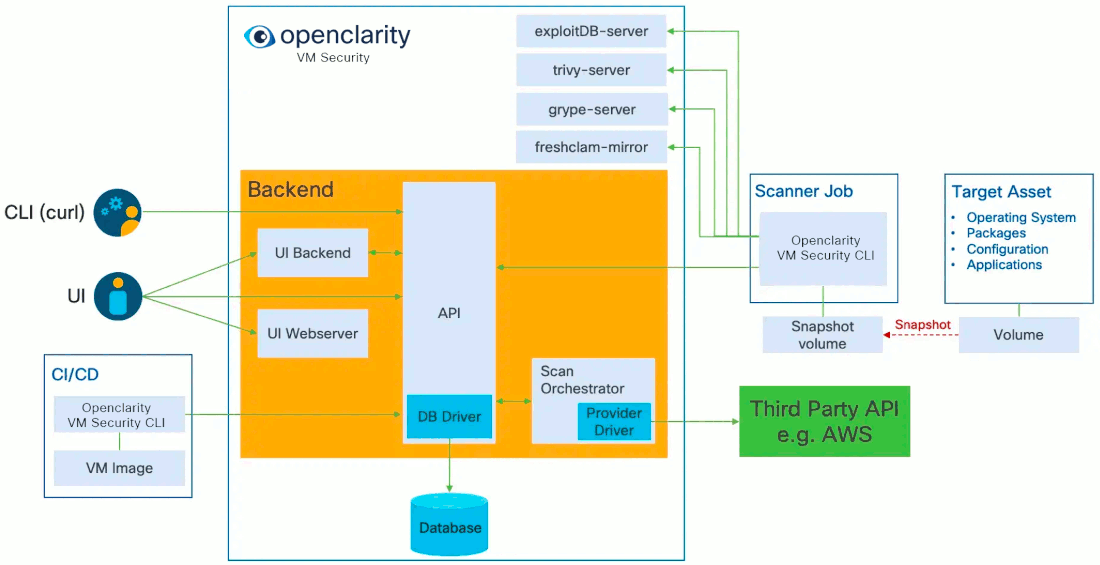

3.1 - OpenClarity Stack

Today, OpenClarity has two halves, the OpenClarity control plane, and the

OpenClarity CLI.

The OpenClarity control plane includes several microservices:

-

API Server: The OpenClarity API for managing all objects in the OpenClarity

system. This is the only component in the system which talks to the DB.

-

Orchestrator: Orchestrates and manages the life cycle of OpenClarity

scan configs, scans and asset scans. Within the Orchestrator there is a

pluggable “provider” which connects the orchestrator to the environment to be

scanned and abstracts asset discovery, VM snapshotting as well as creation of

the scanner VMs. (Note The only supported provider today is AWS, other

hyperscalers are on the roadmap)

-

UI Backend: A separate backend API which offloads some processing from

the browser to the infrastructure to process and filter data closer to the

source.

-

UI Webserver: A server serving the UI static files.

-

DB: Stores the OpenClarity objects from the API. Supported options are

SQLite and Postgres.

-

Scanner Helper services: These services provide support to the OpenClarity

CLI to offload work that would need to be done in every scanner, for example

downloading the latest vulnerability or malware signatures from the various DB

sources. The components included today are:

- grype-server: A rest API wrapper around the grype vulnerability scanner

- trivy-server: Trivy vulnerability scanner server

- exploitDB server: A test API which wraps the Exploit DB CVE to exploit mapping logic

- freshclam-mirror: A mirror of the ClamAV malware signatures

The OpenClarity CLI contains all the logic for performing a scan, from mounting

attached volumes and all the pluggable infrastructure for all the families, to

exporting the results to OpenClarity API.

These components are containerized and can be deployed in a number of different

ways. For example our cloudformation installer deploys OpenClarity on a VM using

docker in an dedicated AWS Virtual Private Cloud (VPC).

Once the OpenClarity server instance has been deployed, and the scan

configurations have been created, OpenClarity will discover VM resources within

the scan range defined by the scan configuration (e.g., by region, instance

tag, and security group). Once the asset list has been created, snapshots of

the assets are taken, and a new scanner VM are launched using the snapshots as

attached volumes. The OpenClarity CLI running within the scanner VM will perform

the configured analysis on the mounted snapshot, and report the results to the

OpenClarity API. These results are then processed by the OpenClarity backend into

findings.

3.2 -

Initiate scan using the cli

Reporting results into file

./bin/openclarity-cli scan --config ~/testConf.yaml -o outputfile

If we want to report results to the OpenClarity backend, we need to create asset and asset scan object before scan because it requires asset-scan-id

Reporting results to OpenClarity backend

ASSET_ID=$(./bin/openclarity-cli asset-create --file assets/dir-asset.json --server http://localhost:8080/api) --jsonpath {.id}

ASSET_SCAN_ID=$(./bin/openclarity-cli asset-scan-create --asset-id $ASSET_ID --server http://localhost:8080/api) --jsonpath {.id}

./bin/openclarity-cli scan --config ~/testConf.yaml --server http://localhost:8080/api --asset-scan-id $ASSET_SCAN_ID

Using one-liner:

./bin/openclarity-cli asset-create --file docs/assets/dir-asset.json --server http://localhost:8080/api --update-if-exists --jsonpath {.id} | xargs -I{} ./bin/openclarity-cli asset-scan-create --asset-id {} --server http://localhost:8080/api --jsonpath {.id} | xargs -I{} ./bin/openclarity-cli scan --config ~/testConf.yaml --server http://localhost:8080/api --asset-scan-id {}

3.2.1 - Example CLI Configuration

This section provides a sample configuration for scanner families supported by

the OpenClarity CLI tool. Each family can be enabled or disabled, and configured

with specific options. The configuration is used to define which scanners to

run, what inputs to scan, and the configuration for each scanner.

# This file contains a sample configuration for scanner families supported by the OpenClarity CLI tool.

# Each family can be enabled or disabled, and configured with specific options.

# The configuration is used to define the scanners to run, the inputs to scan, and the configuration for each scanner.

# SBOM (Software Bill of Materials) scanner family

sbom:

enabled: false # Enable or disable SBOM scanner family

analyzers_list: # List of analyzers to run

- "syft"

- "trivy"

- "windows"

- "gomod"

inputs: # List of inputs to scan

- input: "node:slim"

input_type: "image" # Type of input (image, rootfs, etc.)

# - input: "/mnt"

# input_type: "rootfs"

# - input: "nginx:1.10"

# input_type: "image"

# merge_with: # Merge multiple SBOMs into one

# - sbom_path: "nginx.11.cdx.json" # Path to SBOM file to merge with

local_image_scan: true # Scan images from local docker daemon (true) or from remote registry (false)

registry:

skip-verify-tls: false # Skip TLS verification

use-http: false # Use HTTP instead of HTTPS

auths: # Registry authentication

authority: "authority"

username: "username"

password: "password"

token: "token"

output_format: "cyclonedx-json" # Output format for SBOMs (cyclonedx-json, cyclonedx-xml, spdx-json, spdx-tv, syft-json)

analyzers_config: # Configuration for each analyzer

syft:

scope: "Squashed" # Scope of the scan (squashed, all-layers)

exclude_paths: # Paths to exclude from the scan

- "./dev"

- "./proc"

## Overrides parent sbom configs

# local_image_scan: ...

# registry: ...

trivy:

timeout: 300 # Timeout in seconds

cache_dir: /tmp/.trivy/cache # Cache directory

temp_dir: /tmp/.trivy/ # Temp directory

## Overrides parent sbom configs

# local_image_scan: ...

# registry: ...

# Vulnerabilities scanner family

vulnerabilities:

enabled: false # Enable or disable vulnerabilities scanner family

scanners_list: # List of scanners to run

- "grype"

- "trivy"

inputs: # List of inputs to scan

- input: "nginx:1.12"

input_type: "image" # Type of input (image, rootfs, etc.)

# - input: "nginx:1.13"

# input_type: "image"

- input: "/mnt/"

input_type: "sbom"

local_image_scan: true # Scan images from local docker daemon (true) or from remote registry (false)

registry: # Registry configuration

skip-verify-tls: false

use-http: false

auths:

authority: "authority"

username: "username"

password: "password"

token: "token"

scanners_config: # Configuration for each scanner

grype:

mode: "LOCAL" # Mode of operation (LOCAL, REMOTE). LOCAL uses local database, REMOTE uses Grype server.

local_grype_config:

update_db: true # Update the database

db_root_dir: "/tmp/" # Database root directory

listing_url: "https://toolbox-data.anchore.io/grype/databases/listing.json" # Listing URL

max_allowed_built_age: "120h" # Max allowed built age

listing_file_timeout: "60s" # Listing file timeout

update_timeout: "60s" # Update timeout

scope: "squashed" # Scope of the scan (squashed, all-layers)

## Overrides parent sbom configs

# local_image_scan: ...

# registry: ...

remote_grype_config:

grype_server_address: "" # Grype server address

grype_server_timeout: "2m" # Grype server timeout

grype_server_schemes: [] # Grype server schemes

trivy:

timeout: 300 # Timeout in seconds

cache_dir: /tmp/.trivy/cache # Cache directory

temp_dir: /tmp/.trivy/ # Temp directory

server_addr: "trivy.example.com" # Trivy server address

server_token: "token" # Trivy server token

## Overrides parent sbom configs

# registry: ...

# Secrets scanner family

secrets:

enabled: false # Enable or disable secrets scanner family

scanners_list: # List of scanners to run

- "gitleaks"

strip_input_paths: false # Strip input paths from the output

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: # Configuration for each scanner

gitleaks:

binary_path: "/usr/local/bin/gitleaks" # Path to gitleaks binary

# Exploits scanner family

exploits:

enabled: false # Enable or disable exploits scanner family

scanners_list: # List of scanners to run

- "exploitdb"

inputs: # List of inputs to scan

- input: "CVE-2024-5535,CVE-2023-3446"

input_type: "csv"

scanners_config: # Configuration for each scanner

exploit_db:

base_url: "http://localhost:1326" # Base URL for the ExploitDB server

# Misconfigurations scanner family

misconfiguration:

enabled: false # Enable or disable misconfigurations scanner family

scanners_list: # List of scanners to run

- "cisdocker"

- "lynis"

- "fake"

strip_input_paths: false # Strip input paths from the output

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: # Configuration for each scanner

cisdocker:

timeout: "60s" # Timeout

registry: # Registry configuration

skip-verify-tls: false

use-http: false

auths:

authority: "authority"

username: "username"

password: "password"

token: "token"

lynis:

binary_path: "/usr/local/bin/lynis" # Path to Lynis binary

# InfoFinder scanner family

infofinder:

enabled: false # Enable or disable infofinder scanner family

scanners_list: # List of scanners to run

- "sshTopology"

strip_input_paths: false # Strip input paths from the output

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: {}

# Malware scanner family

malware:

enabled: false # Enable or disable malware scanner family

scanners_list: # List of scanners to run

- "clam"

- "yara"

strip_input_paths: false # Strip input paths from the output

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: # Configuration for each scanner

clam:

freshclam_binary_path: "/usr/local/bin/freshclam" # Path to freshclam binary

freshclam_config_path: "/etc/clamav/freshclam.conf" # Path to freshclam configuration file

alternative_freshclam_mirror_url: "" # Alternative freshclam mirror URL. Config option cannot include servers under *.clamav.net.

use_native_clamscan: false # Scan using native clamscan command (true) instead of daemon clamdscan (false)

clamscan_binary_path: "/usr/local/bin/clamscan" # Path to clamscan binary

clamscan_exclude_files: # Files to exclude from the scan

- "^.*\\.log$"

clamscan_exclude_dirs: # Directories to exclude from the scan

- "^/sys"

clam_daemon_binary_path: "/usr/local/bin/clamd" # Path to clamd binary

clam_daemon_config_path: "/etc/clamav/clamd.conf" # Path to clamd configuration file

clam_daemon_client_binary_path: "/usr/local/bin/clamdscan" # Path to clamdscan binary

yara:

yara_binary_path: "/usr/local/bin/yara" # Path to yara binary

compiled_rule_url: "" # URL to download compiled rules

rule_sources: # List of rule sources

- name: ""

url: ""

yarac_binary_path: "/usr/local/bin/yarac" # Path to yarac binary

cache_dir: "/tmp/.yara" # Cache directory

directories_to_scan: [] # Directories to scan

# Rootkits scanner family

rootkits:

enabled: false # Enable or disable rootkits scanner family

scanners_list: # List of scanners to run

- "chkrootkit"

strip_input_paths: false # Strip input paths from the output

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: # Configuration for each scanner

chkrootkit:

binary_path: "/usr/local/bin/chkrootkit" # Path to chkrootkit binary

# Plugins scanner family

plugins:

enabled: false # Enable or disable plugins scanner family

binary_mode: false # Use binary mode for plugins

binary_artifacts_path: "" # Path to binary artifacts

binary_artifacts_clean: true # Clean binary artifacts after execution

scanners_list: # List of scanners to run

- "kics"

inputs: # List of inputs to scan

- input: "/"

input_type: "rootfs"

scanners_config: # Configuration for each scanner

kics:

image_name: "ghcr.io/openclarity/openclarity-plugin-kics:latest" # Image name for KICS plugin

config: "{\"preview-lines\": 3, \"report-formats\": [\"json\" ], \"platform\": [], \"max-file-size-flag\": 100, \"disable-secrets\": true, \"query-exec-timeout\": 60, \"silent\": true, \"minimal\": true}" # Configuration example for KICS

3.3 - OpenClarity SDKs

See the scanner module for the SDK.

4 - Features

OpenClarity provides a wide range of features for asset scanning and discovery:

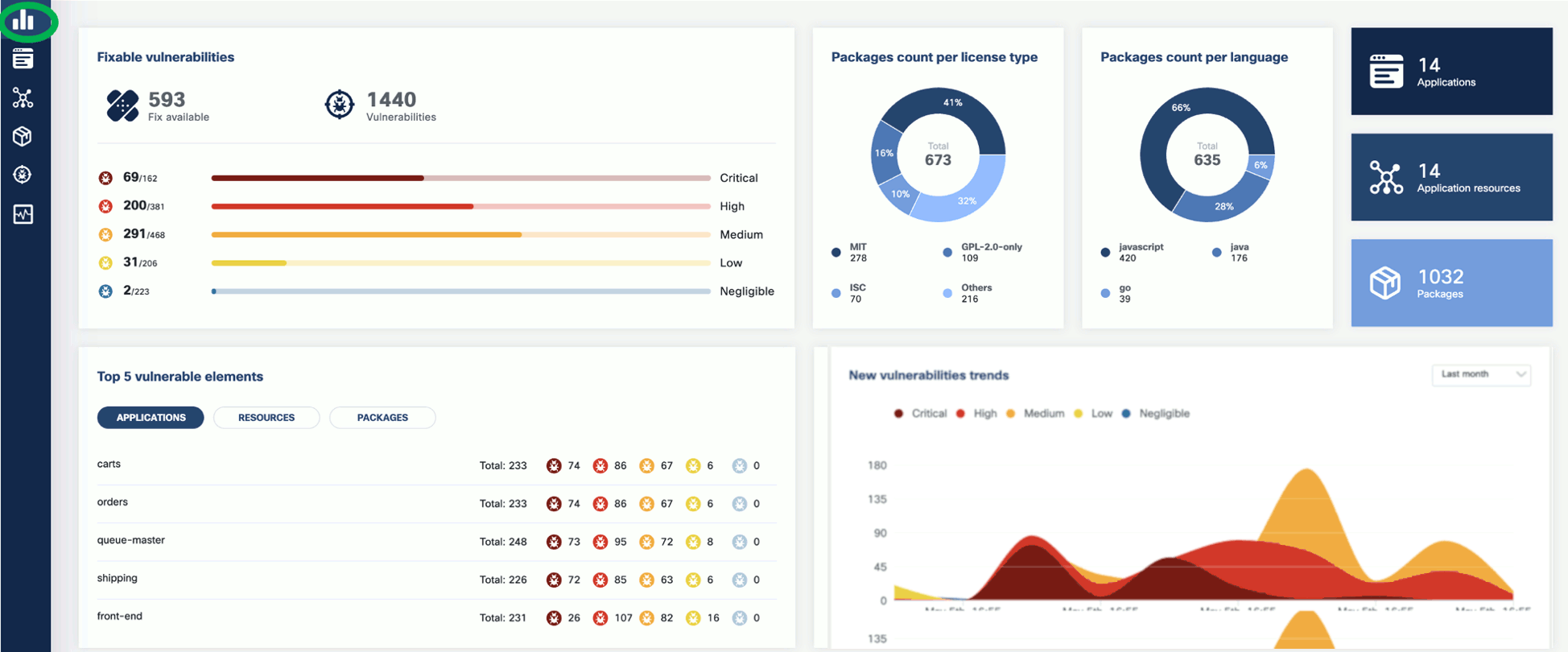

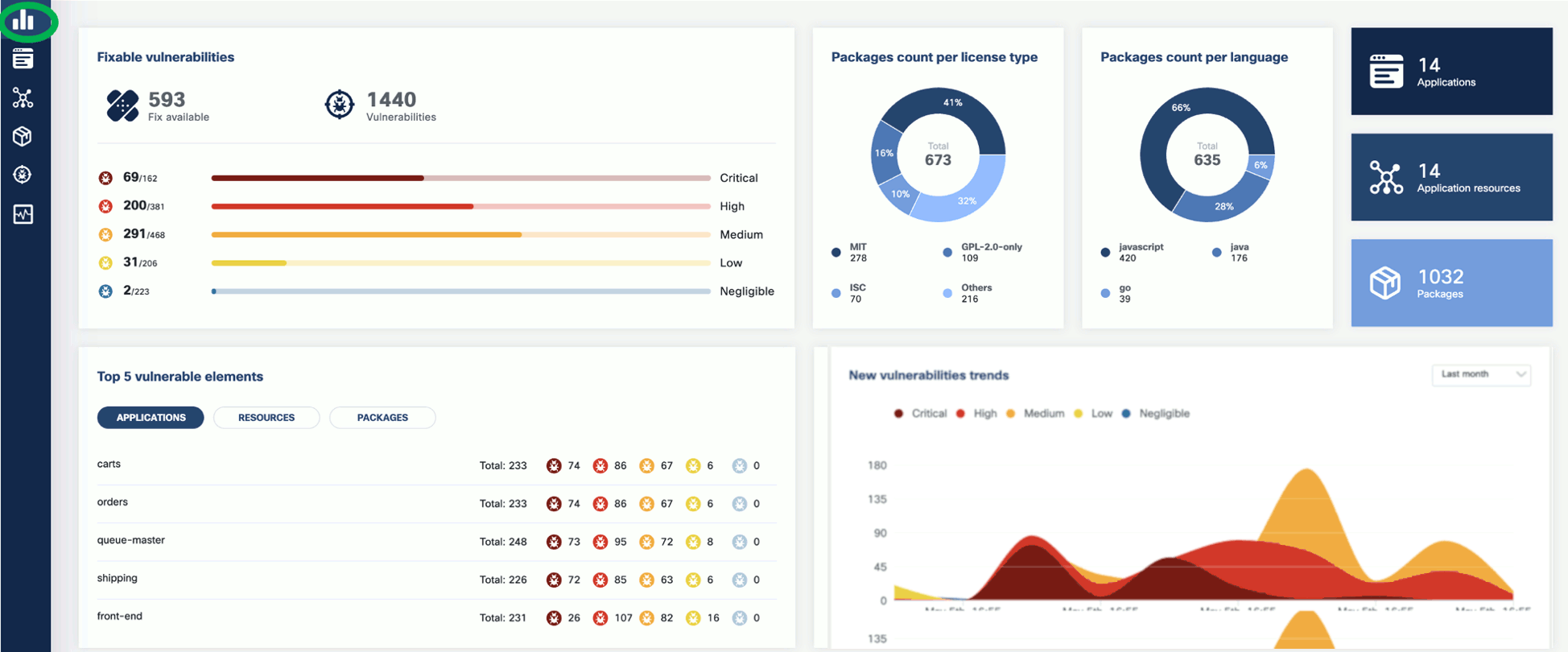

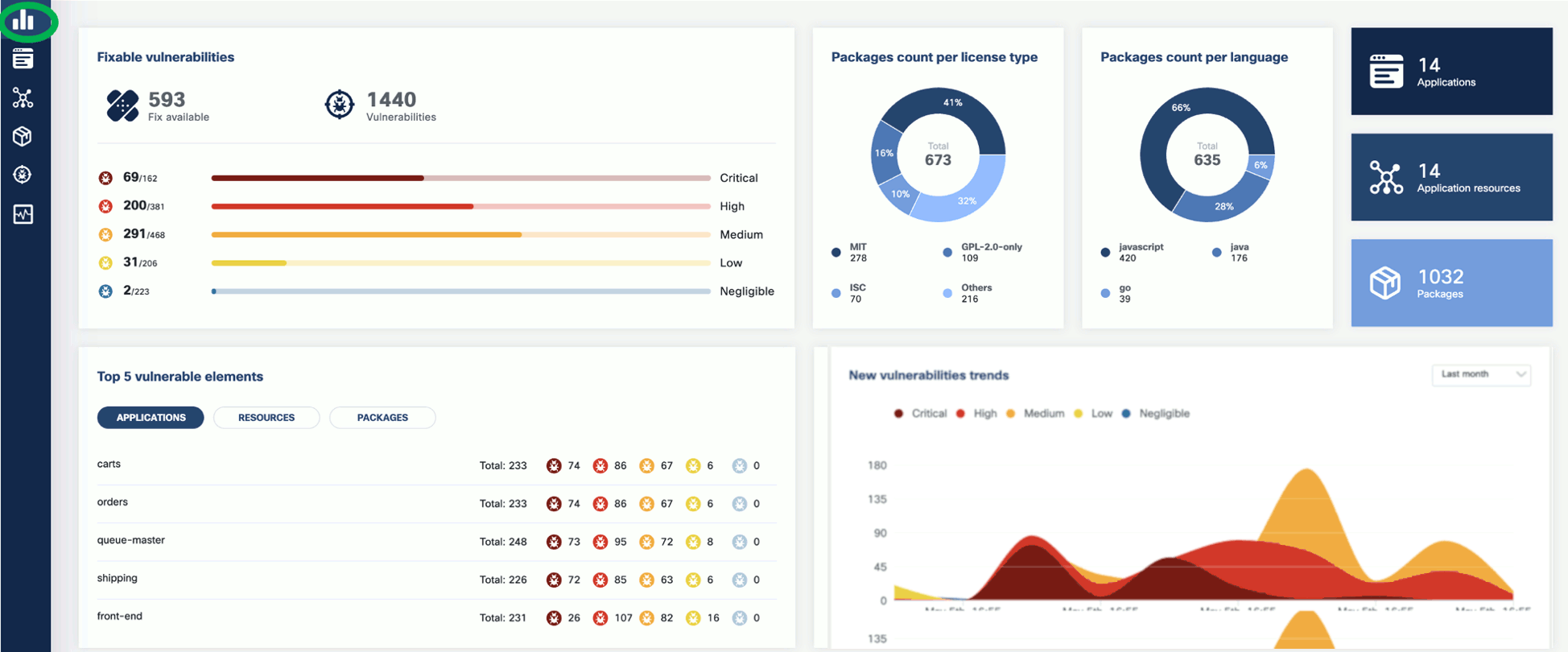

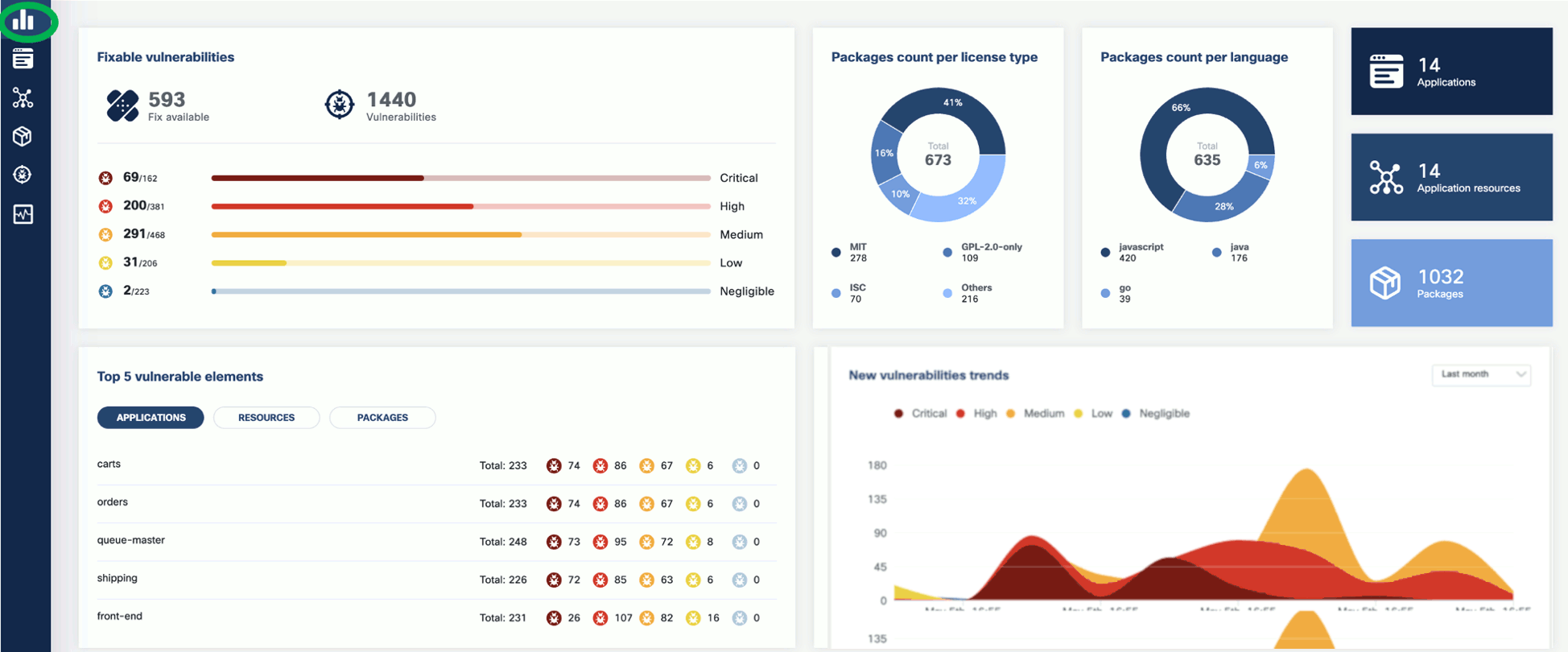

- Dashboard

- Fixable vulnerabilities per severity

- Top 5 vulnerable elements (applications, resources, packages)

- New vulnerabilities trends

- Package count per license type

- Package count per programming language

- General counters

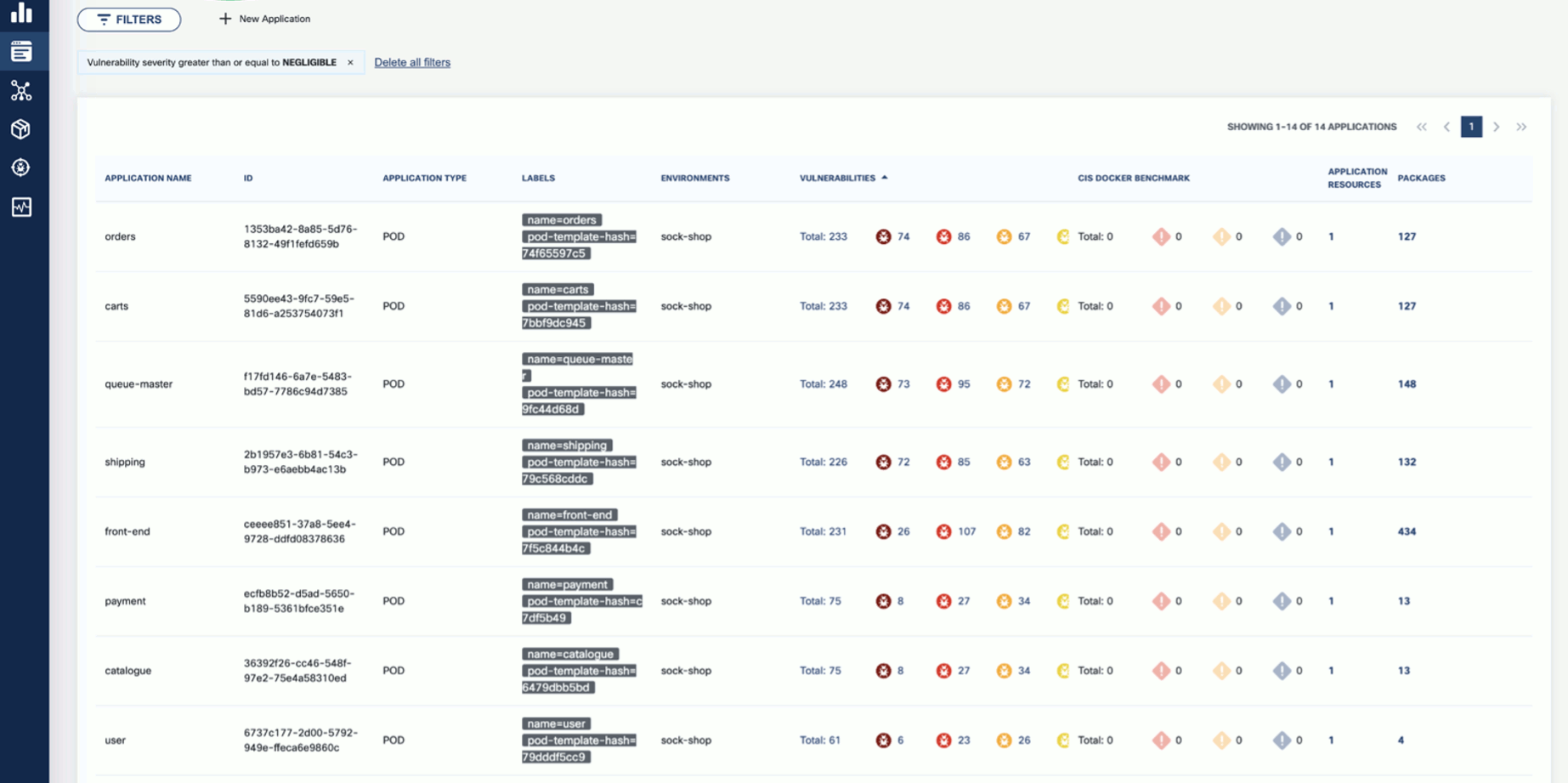

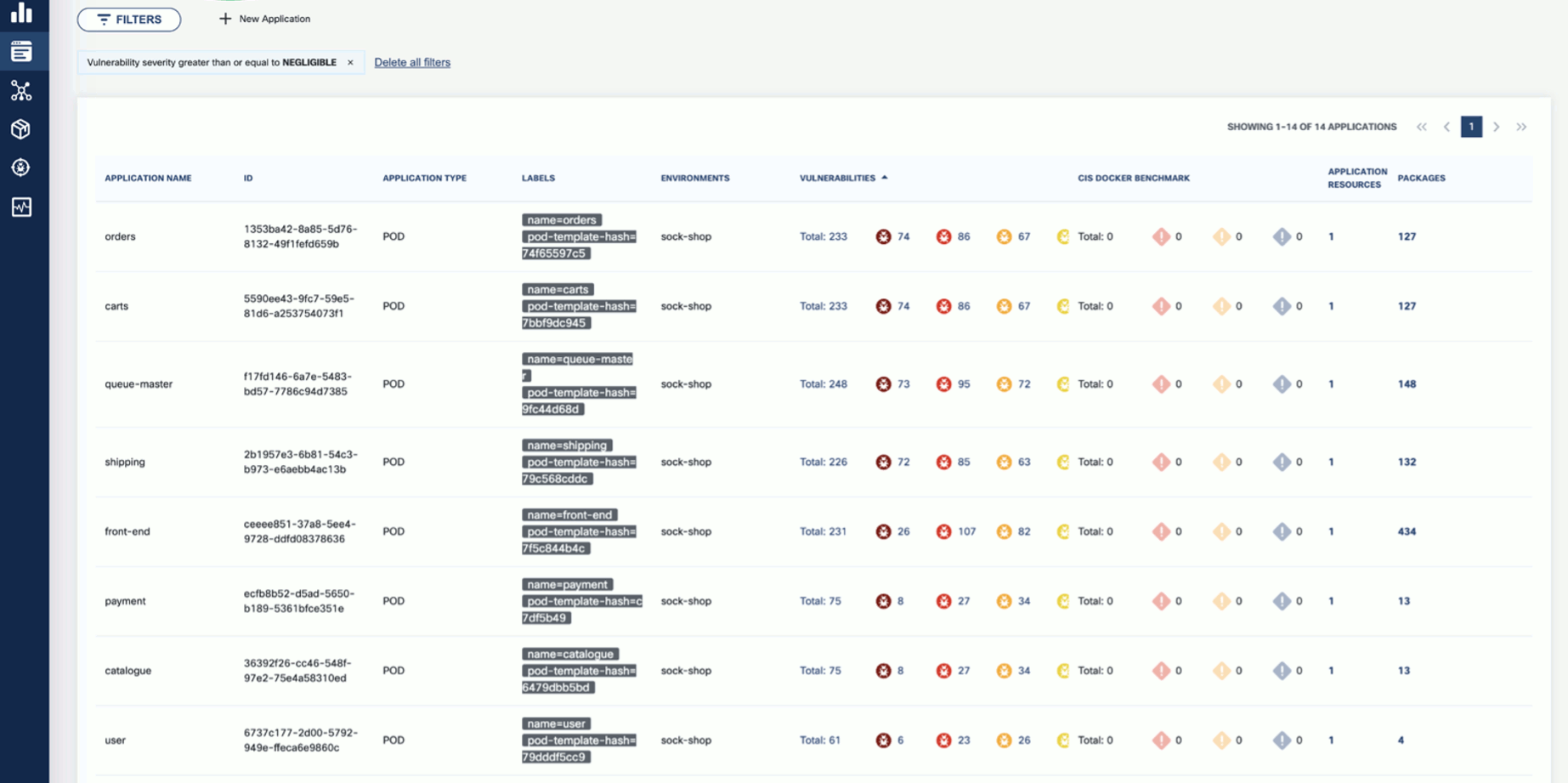

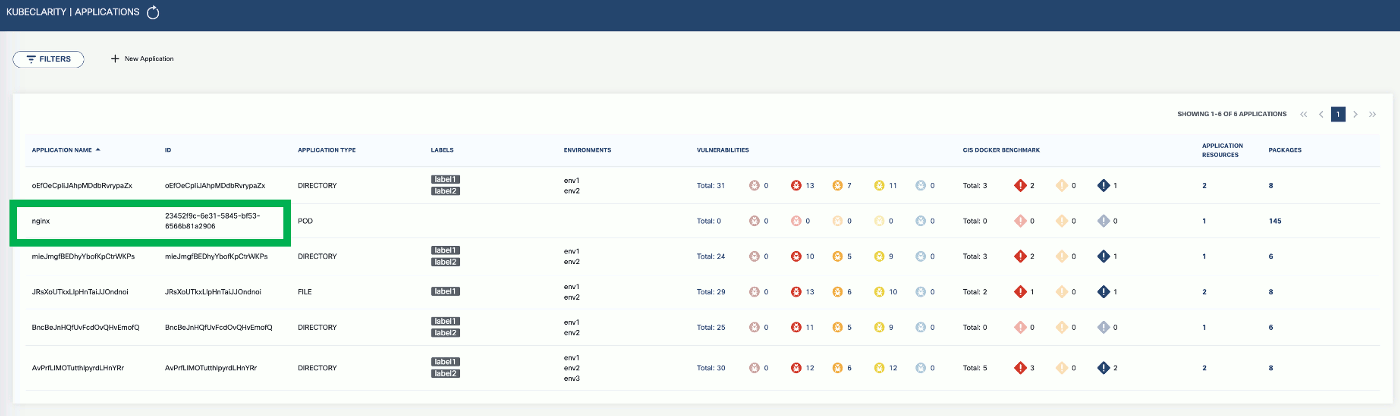

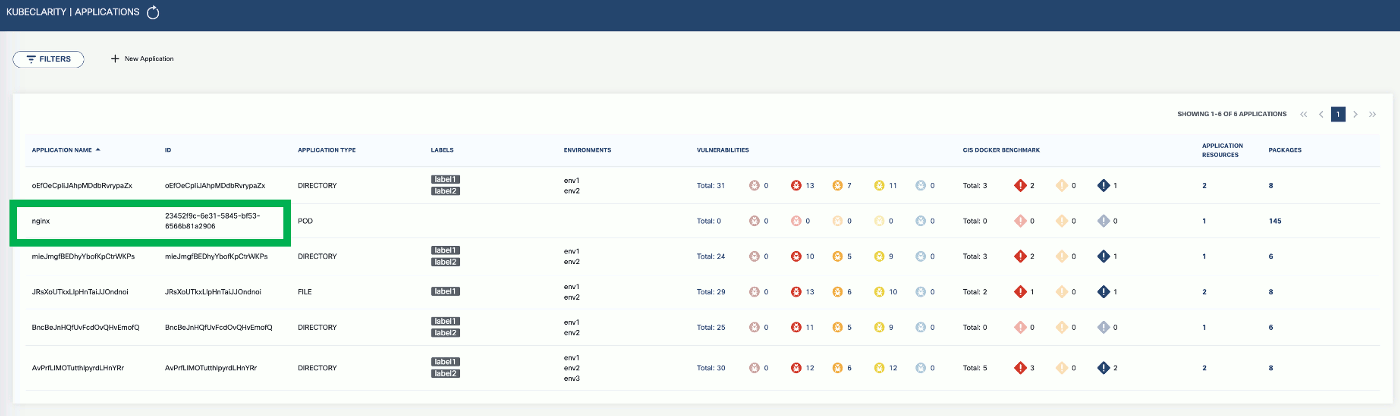

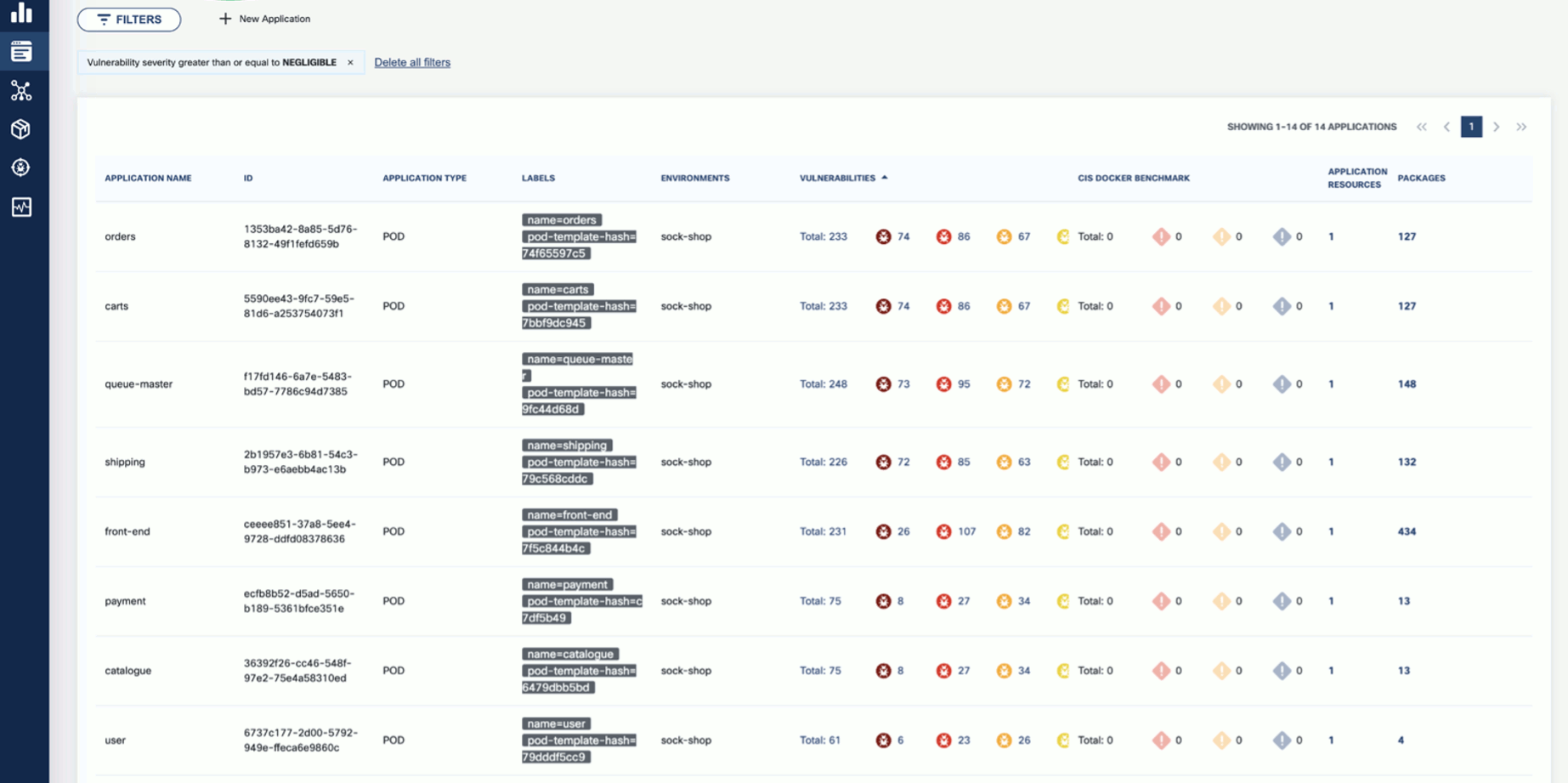

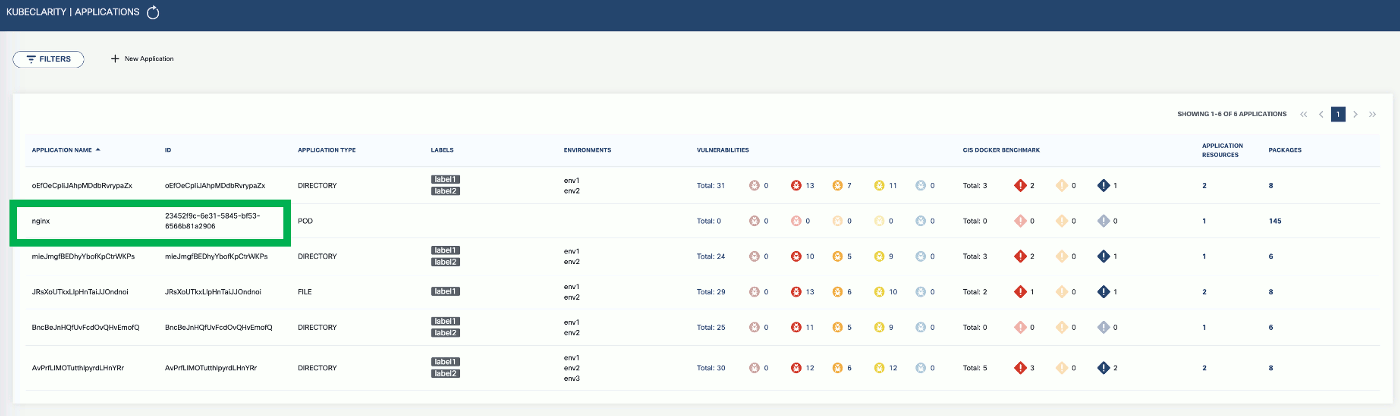

- Applications

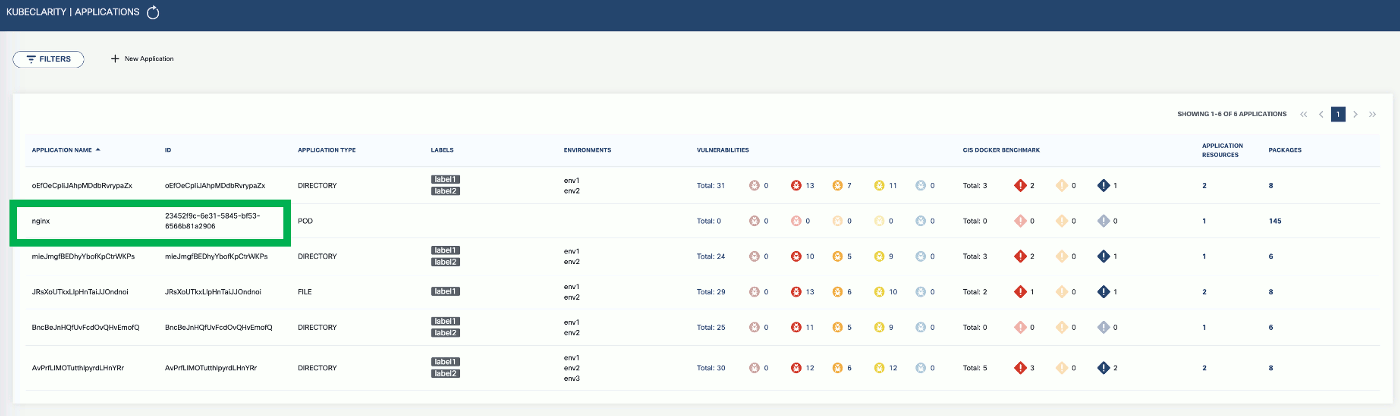

- Automatic application detection in K8s runtime

- Create/edit/delete applications

- Per application, navigation to related:

- Resources (images/directories)

- Packages

- Vulnerabilities

- Licenses in use by the resources

- Application Resources (images/directories)

- Per resource, navigation to related:

- Applications

- Packages

- Vulnerabilities

- Packages

- Per package, navigation to related:

- Applications

- Linkable list of resources and the detecting SBOM analyzers

- Vulnerabilities

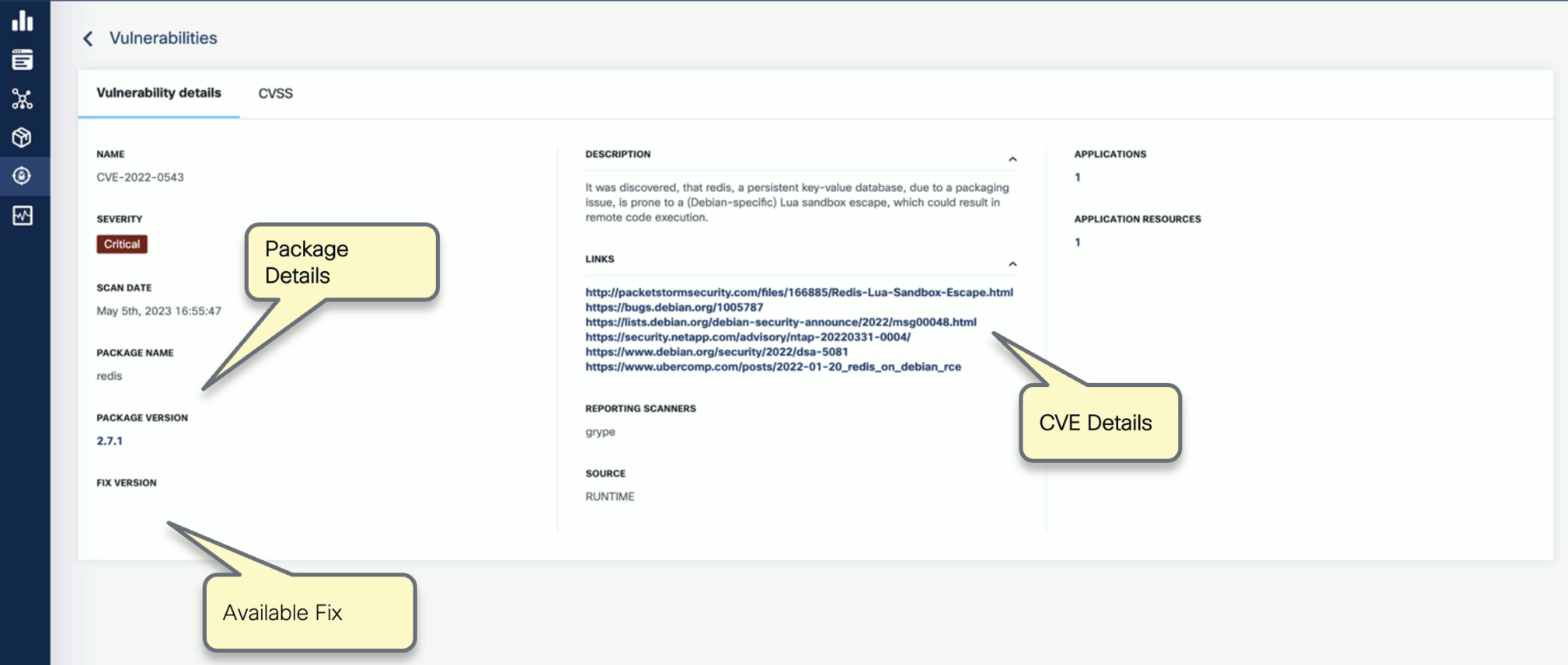

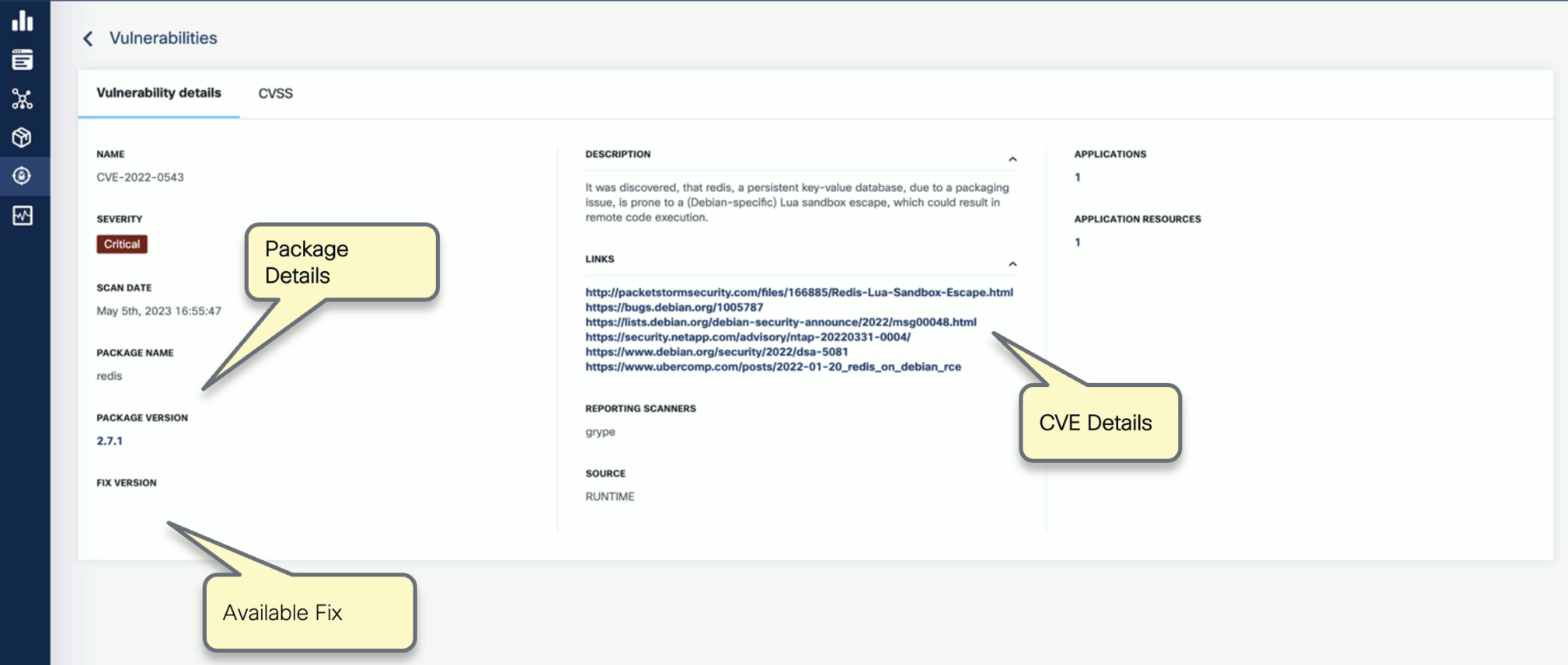

- Vulnerabilities

- Per vulnerability, navigation to related:

- Applications

- Resources

- List of detecting scanners

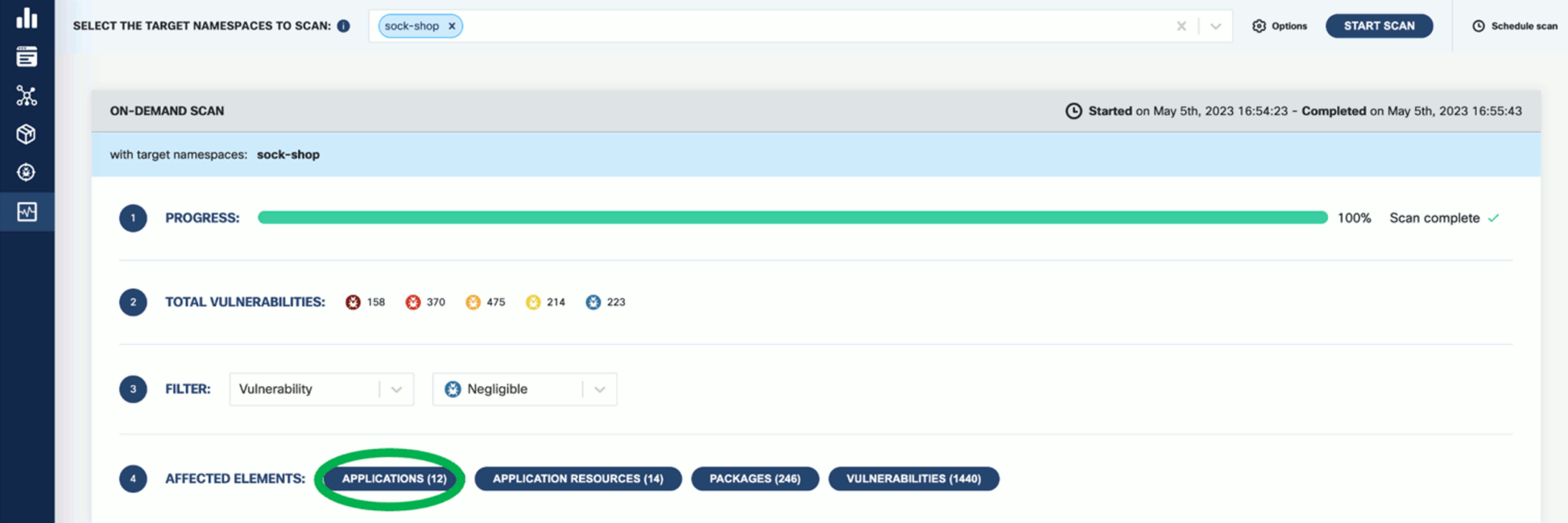

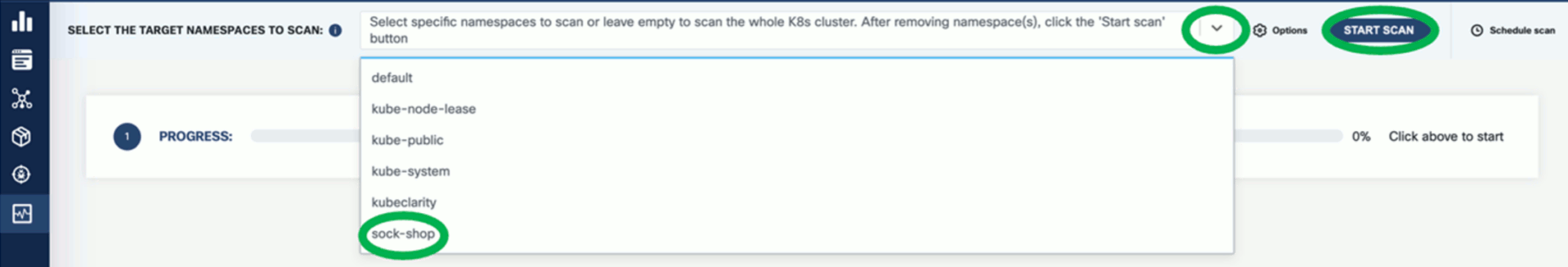

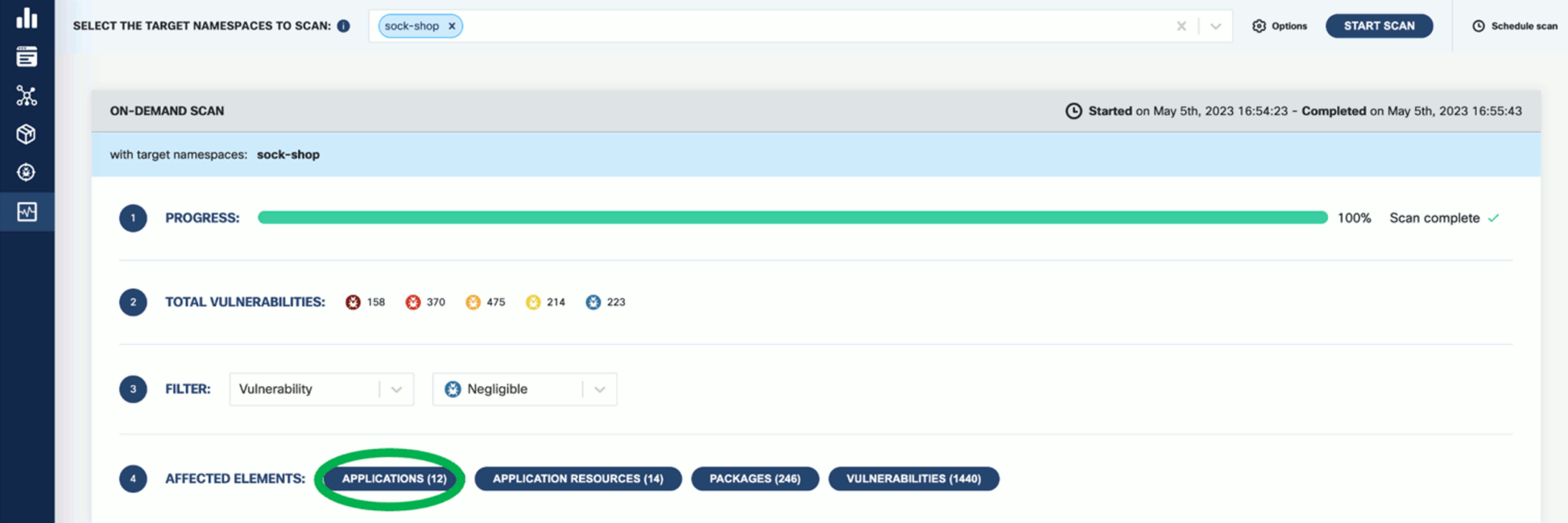

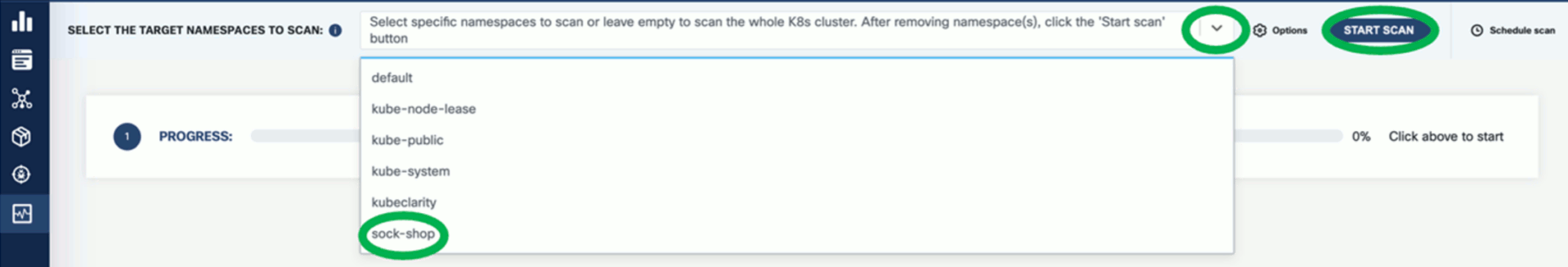

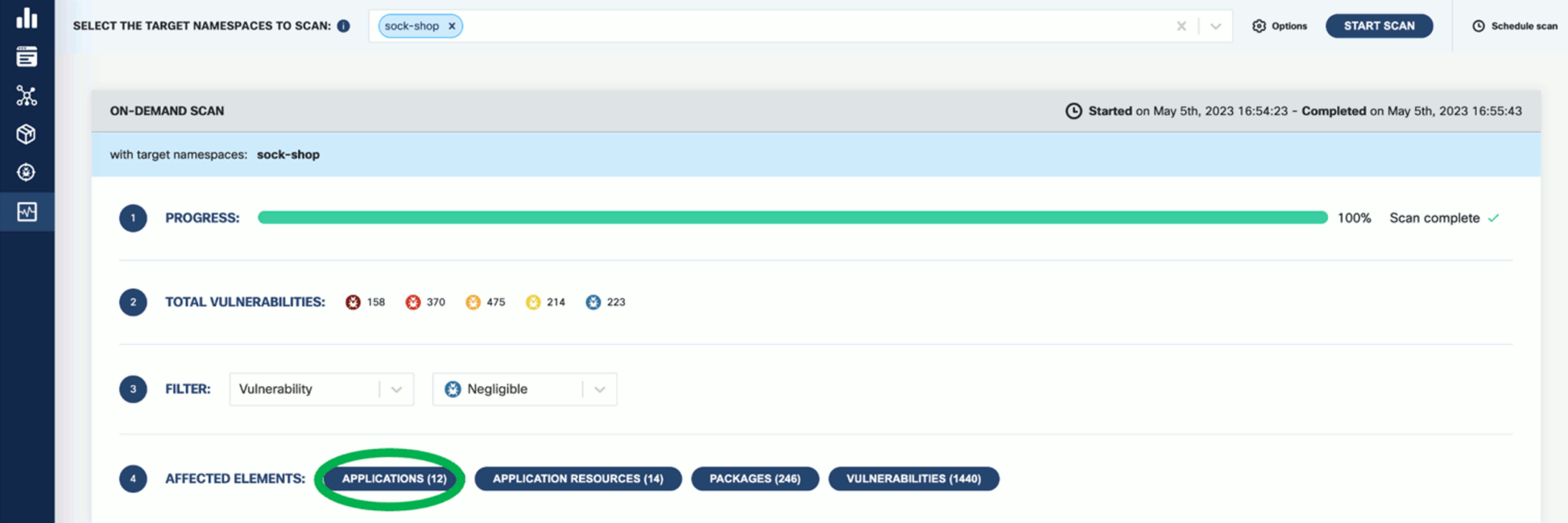

- K8s Runtime scan

- On-demand or scheduled scanning

- Automatic detection of target namespaces

- Scan progress and result navigation per affected element (applications, resources, packages, vulnerabilities)

- CIS Docker benchmark

- CLI (CI/CD)

- SBOM generation using multiple integrated content analyzers (Syft, cyclonedx-gomod)

- SBOM/image/directory vulnerability scanning using multiple integrated scanners (Grype, Dependency-track)

- Merging of SBOM and vulnerabilities across different CI/CD stages

- Export results to OpenClarity backend

- API

Runtime environment

The following table lists all supported environments and asset types that can be discovered and scanned by OpenClarity.

| Environment | Asset Type | Scope |

| Docker | Containers, Container Images | Docker Daemon |

| Kubernetes | Containers, Container Images | Cluster |

| AWS | Virtual machines | All VMs accessible by credentials |

| Azure | Virtual machines | All VMs accessible by credentials |

| GCP | Virtual machines | All VMs accessible by credentials |

| Local (OS) | Containers, Container Images, Container Image Archives, Filesystem | All assets accessible by OS |

Scanning

The following table lists all supported scanners that can be used when performing a scan on an asset, such as a container image or a directory.

| VMClarity | KubeClarity | OpenClarity |

| SBOM generation and analysis | ✅ | ✅ | ✅ |

| Syft | ✅ | ✅ | ✅ |

| Trivy | ✅ | ✅ | ✅ |

| cyclonedx-gomod | ✅ | ✅ | ✅ |

| Windows Registry | ✅ | ❌ | ✅ |

| Vulnerability detection | ✅ | ✅ | ✅ |

| Grype | ✅ | ✅ | ✅ |

| Trivy | ✅ | ✅ | ✅ |

| Dependency Track | ❌ | ✅ | ❌ |

| Exploits | ✅ | ❌ | ✅ |

| ExploitDB | ✅ | ❌ | ✅ |

| Secrets | ✅ | ❌ | ✅ |

| Gitleaks | ✅ | ❌ | ✅ |

| Malware | ✅ | ❌ | ✅ |

| ClamAV | ✅ | ❌ | ✅ |

| Yara | ✅ | ❌ | ✅ |

| Misconfiguration | ✅ | ✅ | ✅ |

| Lynis | ✅ | ❌ | ✅ |

| CIS Docker Benchmark | ✅ | ✅ | ✅ |

| Rootkits | ✅ | ❌ | ✅ |

| Chrootkit | ✅ | ❌ | ✅ |

| Plugins | ✅ | ❌ | ✅ |

| KICS | ✅ | ❌ | ✅ |

Integrated SBOM Generators and Vulnerability Scanners

OpenClarity content analyzer integrates with the following SBOM generators:

OpenClarity vulnerability scanner integrates with the following scanners:

4.1 - Kubernetes Scanning

For details on performing runtime scans with OpenClarity, see the First Tasks on the UI.

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in Kubernetes:

4.2 - Docker Scanning

For details on performing runtime scans with OpenClarity, see the First Tasks on the UI.

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in Docker:

| Asset types | Scope | Installation |

| Docker containers and images | Local Docker daemon | Deploy on Docker |

4.3 - AWS Scanning

For details on performing runtime scans with OpenClarity, see the First Tasks on the UI.

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in AWS:

| Asset types | Scope | Installation |

| Virtual machines (EC2 instances) | Account (all regions) | Deploy on AWS |

4.4 - GCP Scanning

For details on performing runtime scans with OpenClarity, see the First Tasks on the UI.

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in GCP:

| Asset types | Scope | Installation |

| Virtual machines | Project | Deploy on GCP |

4.5 - Azure Scanning

For details on performing runtime scans with OpenClarity, see the First Tasks on the UI.

Asset Discovery

The OpenClarity stack supports the automatic discovery of assets in Azure:

4.6 - Scanner Plugins

Plugins provide additional scanning capabilities to OpenClarity ecosystem.

Project structure:

- runner - Provides necessary logic to manage scanner plugins in OpenClarity.

- sdk - Language-specific libraries, templates, and examples to aid with the implementation of scanner plugins.

- store - Collection of available plugins that can be directly used in OpenClarity.

Requirements

Scanner plugins are distributed as containers and require Docker Engine on the host that runs the actual scanning via

OpenClarity CLI to work.

Support

List of supported environments:

- AWS

- GCP

- Azure

- Docker

List of unsupported environments:

- Kubernetes - We plan on adding plugin support to Kubernetes once we have dealt with all the security considerations.

Note: Plugin support has been tested against OpenClarity installation artifacts for the given environments.

Usage

You can start using plugins via Plugins Store.

For example, you can pass the .families.yaml scan config file defined below to the OpenClarity CLI scan command.

This configuration uses KICS scanner to scan /tmp dir for IaC security misconfigurations. See the KICS documentation for further information.

# --- .families.yaml

plugins:

enabled: true

scanners_list:

- "kics"

inputs:

- input: "/tmp"

input_type: "rootfs"

scanners_config:

kics:

image_name: "ghcr.io/openclarity/openclarity-plugin-kics:latest"

config: "{}"

SDKs

You can use one of available SDKs in your language of choice to quickly develop scanner plugins for OpenClarity.

List of supported languages:

4.7 - Cost Estimation

Available in version 0.6.0 and later. Currently, this feature is exclusively available on AWS.

You can get a preliminary cost estimation before initiating a security scan with OpenClarity. This helps you plan and budget your security assessments more effectively, ensuring that you have a clear understanding of the financial implications before taking action.

To start a new estimation, complete the following steps.

-

Create a new resource called ScanEstimation in the API server. For example, if your POST’s body is the following JSON, it will estimate an SBOM scan on your workload with id i-123456789.

Use the same same scanTemplate in the ScanEstimation than in the ScanConfiguration.

{

"assetIDs": ["i-123456789"],

"state": {

"state": "Pending"

},

"scanTemplate": {

"scope": "contains(assetInfo.tags, '{\"key\":\"scanestimation\",\"value\":\"test\"}')",

"assetScanTemplate": {

"scanFamiliesConfig": {

"sbom": {

"enabled": true

}

}

}

}

}

-

Retrieve the object from the <apiserver IP address>:8888/scanEstimations endpoint, and wait for the state to be Done. The totalScanCost of the summary property shows your scan’s cost in USD:

{

"assetIDs":[

"d337bd07-b67f-4cf0-ac43-f147fce7d1b2"

],

"assetScanEstimations":[

{

"id":"23082244-0fb6-4aca-8a9b-02417dfc95f8"

}

],

"deleteAfter":"2023-10-08T17:33:52.512829081Z",

"endTime":"2023-10-08T15:33:52.512829081Z",

"id":"962e3a10-05fb-4c5d-a773-1198231f3103",

"revision":5,

"scanTemplate":{

"assetScanTemplate":{

"scanFamiliesConfig":{

"sbom":{

"enabled":true

}

}

},

"scope":"contains(assetInfo.tags, '{\"key\":\"scanestimation\",\"value\":\"test\"}')"

},

"startTime":"2023-10-08T15:33:37.513073573Z",

"state":{

"state":"Done",

"stateMessage":"1 succeeded, 0 failed out of 1 total asset scan estimations",

"stateReason":"Success"

},

"summary":{

"jobsCompleted":1,

"jobsLeftToRun":0,

"totalScanCost":0.0006148403,

"totalScanSize":3,

"totalScanTime":12

},

"ttlSecondsAfterFinished":7200

}

5 -

Configuration

API server

| Environment Variable | Required | Default | Values | Description |

OPENCLARITY_APISERVER_LISTEN_ADDRESS | | 0.0.0.0:8888 | | Address of the API Server to send requests to. Example: localhost:8890 |

OPENCLARITY_APISERVER_HEALTHCHECK_ADDRESS | | 0.0.0.0:8081 | | Bind address to used by the API Server for healthz endpoint. Example: localhost:8082 which will make the health endpoints be available at localhost:8082/healthz/live and localhost:8082/healthz/ready. |

OPENCLARITY_APISERVER_DATABASE_DRIVER | yes | LOCAL | LOCAL, POSTGRES | Database driver type. LOCAL = SQLite, POSTGRES = PostgreSQL. |

OPENCLARITY_APISERVER_DB_NAME | If OPENCLARITY_APISERVER_DATABASE_DRIVER is POSTGRES | | | Database name. |

OPENCLARITY_APISERVER_DB_USER | If OPENCLARITY_APISERVER_DATABASE_DRIVER is POSTGRES | | | Database user. |

OPENCLARITY_APISERVER_DB_PASS | If OPENCLARITY_APISERVER_DATABASE_DRIVER is POSTGRES | | | Database user password. |

OPENCLARITY_APISERVER_DB_HOST | If OPENCLARITY_APISERVER_DATABASE_DRIVER is POSTGRES | | | Database host, e.g. postgresql. |

OPENCLARITY_APISERVER_DB_PORT | If OPENCLARITY_APISERVER_DATABASE_DRIVER is POSTGRES | | | Database port, e.g. 5432. |

OPENCLARITY_APISERVER_ENABLE_DB_INFO_LOGS | | false | | Boolean value whether to enable info logs of the database or not. |

OPENCLARITY_APISERVER_LOCAL_DB_PATH | If OPENCLARITY_APISERVER_DATABASE_DRIVER is LOCAL | | | Path of the database, if LOCAL is used as databse driver, e.g. /data/openclarity.db. |

Orchestrator

| Environment Variable | Required | Default | Values | Description |

OPENCLARITY_ORCHESTRATOR_PROVIDER | yes | aws | aws, azure, gcp, docker | Provider used for Asset discovery and scans. |

OPENCLARITY_ORCHESTRATOR_APISERVER_ADDRESS | yes | | | The URL for the API Server used by the Orchestrator to interact with the API. Example: https://apiserver.example.com:8888/api |

OPENCLARITY_ORCHESTRATOR_HEALTHCHECK_ADDRESS | | :8082 | | Bind address to used by the Orchestrator for healthz endpoint. Example: localhost:8082 which will make the health endpoints be available at localhost:8082/healthz/live and localhost:8082/healthz/ready. |

OPENCLARITY_ORCHESTRATOR_DISCOVERY_INTERVAL | | 2m | | How frequently the Discovery perform discovery of Assets. |

OPENCLARITY_ORCHESTRATOR_CONTROLLER_STARTUP_DELAY | | 7s | | The time interval to wait between controller startups. Do NOT change this parameter unless you know what you are doing. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_POLL_PERIOD | | 15s | | How frequently poll the API for events related AssetScan objects. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_RECONCILE_TIMEOUT | | 5m | | Time period for reconciling a AssetScan event is allowed to run. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_ABORT_TIMEOUT | | 10m | | Time period to wait for the Scanner to gracefully stop on-going scan for AssetScan before setting the state of the AssetScan to Failed. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_DELETE_POLICY | | Always | Always, Never, OnSuccess | Whether to delete resources (disk snapshot, container snapshot/images) or not based on the status of the AssetScan. Always means the AssetScan is deleted no matter if it failed or not. Never skip cleaning up the resources created for scanning. OnSuccess means that cleanup is happening only iun case the AssetScan was successful. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_CONTAINER_IMAGE | yes | | | The Scanner container image used for running scans. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_FRESHCLAM_MIRROR | | | | |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_APISERVER_ADDRESS | | | | The URL for the API Server used by the Scanner to interact with the API. Example: https://apiserver.example.com:8888/api |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_EXPLOITSDB_ADDRESS | | | | The URL for the ExploitsDB Server used by the Scanner. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_TRIVY_SERVER_ADDRESS | | | | The URL for the Trivy Server used by the Scanner. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_TRIVY_SERVER_TIMEOUT | | 5m | | |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_GRYPE_SERVER_ADDRESS | | | | The URL for the Grype Server used by the Scanner. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_GRYPE_SERVER_TIMEOUT | | 2m | | |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_WATCHER_SCANNER_YARA_RULE_SERVER_ADDRESS | | | | The URL for the Yara Rule Server used by the Scanner. |

OPENCLARITY_ORCHESTRATOR_SCANCONFIG_WATCHER_POLL_PERIOD | | | | How frequently the ScanConfig Watcher poll the API for events related ScanConfig objects. |

OPENCLARITY_ORCHESTRATOR_SCANCONFIG_WATCHER_RECONCILE_TIMEOUT | | | | Time period which a reconciliation for a ScanConfig event is allowed to run. |

OPENCLARITY_ORCHESTRATOR_SCAN_WATCHER_POLL_PERIOD | | | | How frequently the AssetScan Watcher poll the API for events related Scan objects. |

OPENCLARITY_ORCHESTRATOR_SCAN_WATCHER_RECONCILE_TIMEOUT | | | | Time period for reconciling a Scan event is allowed to run. |

OPENCLARITY_ORCHESTRATOR_SCAN_WATCHER_SCAN_TIMEOUT | | | | Time period to wait for the Scan finish before marked it’s state as Failed with Timeout as a reason. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_PROCESSOR_POLL_PERIOD | | | | How frequently the AssetScan Processor poll the API for events related AssetScan objects. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_PROCESSOR_RECONCILE_TIMEOUT | | | | Time period for processing for a AssetScan result is allowed to run. |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_ESTIMATION_WATCHER_POLL_PERIOD | | 5s | | |

OPENCLARITY_ORCHESTRATOR_ASSETSCAN_ESTIMATION_WATCHER_RECONCILE_TIMEOUT | | 15s | | |

OPENCLARITY_ORCHESTRATOR_SCAN_ESTIMATION_WATCHER_POLL_PERIOD | | 5s | | |

OPENCLARITY_ORCHESTRATOR_SCAN_ESTIMATION_WATCHER_RECONCILE_TIMEOUT | | 2m | | |

OPENCLARITY_ORCHESTRATOR_SCAN_ESTIMATION_WATCHER_ESTIMATION_TIMEOUT | | 48h | | |

Provider

AWS

| Environment Variable | Required | Default | Description |

OPENCLARITY_AWS_REGION | yes | | Region where the Scanner instance needs to be created |

OPENCLARITY_AWS_SUBNET_ID | yes | | SubnetID where the Scanner instance needs to be created |

OPENCLARITY_AWS_SECURITY_GROUP_ID | yes | | SecurityGroupId which needs to be attached to the Scanner instance |

OPENCLARITY_AWS_KEYPAIR_NAME | | | Name of the SSH KeyPair to use for Scanner instance launch |

OPENCLARITY_AWS_SCANNER_INSTANCE_ARCHITECTURE | | x86_64 | Architecture to be used for Scanner instance. The Provider will use this value to lookup for instance details in OPENCLARITY_AWS_SCANNER_INSTANCE_ARCHITECTURE_TO_TYPE_MAPPING and OPENCLARITY_AWS_SCANNER_INSTANCE_ARCHITECTURE_TO_AMI_MAPPING. |

OPENCLARITY_AWS_SCANNER_INSTANCE_ARCHITECTURE_TO_TYPE_MAPPING | | x86_64:t3.large,arm64:t4g.large | Comma separated list of architecture:instance_type pairs used for OpenClarity Scanner instance |

OPENCLARITY_AWS_SCANNER_INSTANCE_ARCHITECTURE_TO_AMI_MAPPING | | x86_64:ami-03f1cc6c8b9c0b899,arm64:ami-06972d841707cc4cf | Comma separated list of architecture:ami_id pairs used for OpenClarity Scanner instance |

OPENCLARITY_AWS_BLOCK_DEVICE_NAME | | xvdh | Block device name used for attaching Scanner volume to the Scanner instance |

UI backend

| Environment Variable | Required | Default | Values | Description |

OPENCLARITY_UIBACKEND_APISERVER_ADDRESS | yes | | | The URL for the API Server used by the UI backend to interact with the API. Example: https://apiserver.example.com:8888/api |

OPENCLARITY_UIBACKEND_LISTEN_ADDRESS | | 0.0.0.0:8890 | | Address of the UI backend to send requests to. Example: localhost:8890 |

OPENCLARITY_UIBACKEND_HEALTHCHECK_ADDRESS | | 0.0.0.0:8083 | | Bind address to used by the UI backend for healthz endpoint. Example: localhost:8082 which will make the health endpoints be available at localhost:8083/healthz/live and localhost:8083/healthz/ready. |

7 -

Troubleshooting and debugging OpenClarity

Table of Contents

How to debug the Scanner VMs

Docker and Kubernetes provider

For Docker provider, scanners are created as containers, while as pods in case of Kubernetes. In both cases, you can

access them directly and check the logs.

Cloud providers

On cloud providers (AWS, Azure, GCP) OpenClarity is configured to create the Scanner VMs with the same key-pair that the

OpenClarity server has. The Scanner VMs run in a private network, however the OpenClarity Server can be used as a

bastion/jump host to reach them via SSH.

ssh -i <key-pair private key> -J ubuntu@<openclarity server public IP> ubuntu@<scanner VM private IP address>

Once SSH access has been established, the status of the VM’s start up

configuration can be debugged by checking the cloud-init logs:

sudo journalctl -u cloud-final

And the openclarity-scanner service logs:

sudo journalctl -u openclarity-scanner

8 - Archive

This section contains the documentation for VMClarity, KubeClarity, and APIClarity.

Announcement: OpenClarity Unification

We have reached the final step in the OpenClarity unification roadmap. Previously, we successfully enriched VMClarity with Kubernetes scanning and runtime features, achieving feature parity with KubeClarity.

We are currently in the process of unifying the two projects - KubeClarity and VMClarity - into one consolidated project under OpenClarity.

The VMClarity and KubeClarity repositories will not be maintained in the future. Thank you for your support and contributions as we continue to enhance and streamline the OpenClarity ecosystem.

8.1 - VM Security

VMClarity is an open source tool for agentless detection and management of Virtual Machine Software Bill Of Materials (SBOM) and security threats such as vulnerabilities, exploits, malware, rootkits, misconfigurations and leaked secrets.

VMClarity is the tool responsible for VM Security in the OpenClarity platform.

Join VMClarity’s Slack channel to hear about the latest announcements and upcoming activities. We would love to get your feedback!

Why VMClarity?

Virtual machines (VMs) are the most used service across all hyperscalers. AWS,

Azure, GCP, and others have virtual computing services that are used not only

as standalone VM services but also as the most popular method for hosting

containers (e.g., Docker, Kubernetes).

VMs are vulnerable to multiple threats:

- Software vulnerabilities

- Leaked Secrets/Passwords

- Malware

- System Misconfiguration

- Rootkits

There are many very good open source and commercial-based solutions for

providing threat detection for VMs, manifesting the different threat categories above.

However, there are challenges with assembling and managing these tools yourself:

- Complex installation, configuration, and reporting

- Integration with deployment automation

- Siloed reporting and visualization

The VMClarity project is focused on unifying detection and management of VM security threats in an agentless manner.

Overview

VMClarity uses a pluggable scanning infrastructure to provide:

- SBOM analysis

- Package and OS vulnerability detection

- Exploit detection

- Leaked secret detection

- Malware detection

- Misconfiguration detection

- Rootkit detection

The pluggable scanning infrastructure uses several tools that can be

enabled/disabled on an individual basis. VMClarity normalizes, merges and

provides a robust visualization of the results from these various tools.

These tools include:

- SBOM Generation and Analysis

- Vulnerability detection

- Exploits

- Secrets

- Malware

- Misconfiguration

- Rootkits

A high-level architecture overview is available in Architecture.

Roadmap

VMClarity project roadmap is available here.

8.1.1 - Architecture

Today, VMClarity has two halves, the VMClarity control plane, and the

VMClarity CLI.

The VMClarity control plane includes several microservices:

-

API Server: The VMClarity API for managing all objects in the VMClarity

system. This is the only component in the system which talks to the DB.

-

Orchestrator: Orchestrates and manages the life cycle of VMClarity

scan configs, scans and asset scans. Within the Orchestrator there is a

pluggable “provider” which connects the orchestrator to the environment to be

scanned and abstracts asset discovery, VM snapshotting as well as creation of

the scanner VMs. (Note The only supported provider today is AWS, other

hyperscalers are on the roadmap)

-

UI Backend: A separate backend API which offloads some processing from

the browser to the infrastructure to process and filter data closer to the

source.

-

UI Webserver: A server serving the UI static files.

-

DB: Stores the VMClarity objects from the API. Supported options are

SQLite and Postgres.

-

Scanner Helper services: These services provide support to the VMClarity

CLI to offload work that would need to be done in every scanner, for example

downloading the latest vulnerability or malware signatures from the various DB

sources. The components included today are:

- grype-server: A rest API wrapper around the grype vulnerability scanner

- trivy-server: Trivy vulnerability scanner server

- exploitDB server: A test API which wraps the Exploit DB CVE to exploit mapping logic

- freshclam-mirror: A mirror of the ClamAV malware signatures

The VMClarity CLI contains all the logic for performing a scan, from mounting

attached volumes and all the pluggable infrastructure for all the families, to

exporting the results to VMClarity API.

These components are containerized and can be deployed in a number of different

ways. For example our cloudformation installer deploys VMClarity on a VM using

docker in an dedicated AWS Virtual Private Cloud (VPC).

Once the VMClarity server instance has been deployed, and the scan

configurations have been created, VMClarity will discover VM resources within

the scan range defined by the scan configuration (e.g., by region, instance

tag, and security group). Once the asset list has been created, snapshots of

the assets are taken, and a new scanner VM are launched using the snapshots as

attached volumes. The VMClarity CLI running within the scanner VM will perform

the configured analysis on the mounted snapshot, and report the results to the

VMClarity API. These results are then processed by the VMClarity backend into

findings.

8.1.2 - Getting started

This chapter guides you through the installation of the VMClarity backend and the CLI, and shows you the most common tasks that you can perform with VMClarity.

8.1.2.1 - Deploy on AWS

An AWS CloudFormation template is provided for quick deployment of the VMClarity environment.

Note: To avoid extra costs (cross-region snapshots), you may want to deploy the VMClarity AWS CloudFormation template in the same region where the majority of the VMs are that you want to scan with VMClarity.

The following figure shows the basic AWS resources that the VMClarity CloudFormation template creates:

-

a VPC with a public and private subnet, and

-

an AWS Internet Gateway (IGW) and NAT Gateway (NGW) into the VPC.

The public subnet (VmClarityServerSubnet) hosts the VMClarity Server (VmClarityServer) EC2 instance. The VMClarity server houses the scanning configuration, the UI, and other control components. The EC2 instance is assigned an external IPv4 address (EIP) for SSH and web UI access.

The private subnet (VmClarityScannerSubnet) hosts the VM snapshot instances (EC2) that are scanned for security vulnerabilities.

Prerequisites

Deployment steps

To deploy the VMClarity AWS CloudFormation Stack, you can:

- click this quick-create link to navigate directly to the AWS CloudFormation console and jump to the wizard instructions, or

- complete the following steps.

-

Download the latest VMClarity release.

wget https://github.com/openclarity/vmclarity/releases/download/v1.1.2/aws-cloudformation-v1.1.2.tar.gz

Alternatively, copy the AWS CloudFormation template file from the project repository to deploy the latest development code and skip the next step.

-

Create a new directory and extract the files.

mkdir aws-cloudformation-v1.1.2

tar -xvzf aws-cloudformation-v1.1.2.tar.gz -C aws-cloudformation-v1.1.2

-

Log in to the AWS CloudFormation console and go to the AWS CloudFormation Stacks section, then select Create Stack > With New Resources (standard).

-

Check Template is ready and Upload a template file, then click Upload a template file/Choose file and upload the previously downloaded CFN template file.

-

In the VMClarity CloudFormation Stack wizard, set the following:

- Enter a name for the stack.

- Select the InstanceType (defaults to

t2.large for the VMClarity Server, and the scanner VMs). - Specify the SSH key for the EC2 instance in the KeyName field. You will need this key to connect to VMClarity.

- Adjust SSHLocation according to your policies.

- Do not change AdvancedConfiguration, unless you are building from a custom registry.

- Click NEXT.

- (Optional) Add tags as needed for your environment. You can use the defaults unless you need to adjust for your own policies.

- Click NEXT, then scroll to the bottom of the screen, and check I acknowledge….

- Click SUBMIT.

-

Once the stack is deployed successfully, copy the VMClarity SSH address from the Outputs tab.

-

Open an SSH tunnel to VMClarity the server

ssh -N -L 8080:localhost:80 -i "<Path to the SSH key specified during install>" ubuntu@<VmClarity SSH Address copied during install>

-

Open the VMClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API athttp://localhost:8080/api. For details on the API, see API Reference.

Next steps

Complete the First tasks on the UI.

8.1.2.2 - Deploy on Azure

Prerequisites

Deployment steps

-

Click here to deploy VMClarity’s custom template.

-

Fill out the required Project details and Instance details in the Basics tab.

You can set the following parameters:

| Parameter | Required | Description |

| Subscription | True | Azure subscription where resources will be billed. |

| Region | False | Azure region where resources will be deployed. |

| VMClarity Deploy Postfix | True | Postfix for Azure resource group name (e.g. vmclarity-<postfix>). |

| VMClarity Server SSH Username | True | SSH Username for the VMClarity Server Virtual Machine. |

| VMClarity Server SSH Public Key | True | SSH Public Key for the VMClarity Server Virtual Machine. Paste the contents of ~/.ssh/id_rsa2.pub here. |

| VMClarity Server VM Size | True | The size of the VMClarity Server Virtual Machine. |

| VMClarity Scanner VMs Size | True | The size of the VMClarity Scanner Virtual Machines. |

| Security Type | False | Security Type of the VMClarity Server Virtual Machine, e.g. TrustedLaunch (default) or Standard. |

-

(Optional) In the Advanced tab, modify the Container Image for each service if a specific VMClarity version is required. Then, select the delete policy and the database.

| Parameter | Required | Description |

| Service Container Image | True | Docker Container Image to use for each service. |

| Asset Scan Delete Policy | True | Delete Policy for resources created when performing an asset scan, e.g. Always, OnSuccess or Never. |

| Database To Use | True | Database type to use, e.g. SQLite, PostgreSQL or External PostgreSQL. |

-

Click Review + create to create the deployment.

-

Once the deployment is completed successfully, copy the VMClarity SSH address from the Outputs tab.

-

Open an SSH tunnel to VMClarity the server

ssh -N -L 8080:localhost:80 -i "<Path to the SSH key specified during install>" ubuntu@<VmClarity SSH Address copied during install>

-

Open the VMClarity UI in your browser at http://localhost:8080/. The dashboard opens.

-

(Optional) If needed, you can access the API athttp://localhost:8080/api. For details on the API, see API Reference.

Next steps

Complete the First tasks on the UI.

8.1.2.3 - Deploy on Docker

Prerequisites

Deployment steps

To run VMClarity in Docker on a local machine, complete the following steps.

-

Download the latest VMClarity release.

wget https://github.com/openclarity/vmclarity/releases/download/v1.1.2/docker-compose-v1.1.2.tar.gz

-

Create a new directory, extract the files and navigate to the directory.

mkdir docker-compose-v1.1.2

tar -xvzf docker-compose-v1.1.2.tar.gz -C docker-compose-v1.1.2

cd docker-compose-v1.1.2

-

Start every control plane element with the docker compose file.

docker compose --project-name vmclarity --file docker-compose.yml up -d --wait --remove-orphans

The output should be similar to:

[+] Running 14/14

⠿ Network vmclarity Created 0.2s

⠿ Volume "vmclarity_grype-server-db" Created 0.0s

⠿ Volume "vmclarity_apiserver-db-data" Created 0.0s

⠿ Container vmclarity-orchestrator-1 Healthy 69.7s

⠿ Container vmclarity-yara-rule-server-1 Healthy 17.6s

⠿ Container vmclarity-exploit-db-server-1 Healthy 17.7s

⠿ Container vmclarity-swagger-ui-1 Healthy 7.8s

⠿ Container vmclarity-trivy-server-1 Healthy 26.7s

⠿ Container vmclarity-uibackend-1 Healthy 17.6s

⠿ Container vmclarity-ui-1 Healthy 7.7s

⠿ Container vmclarity-freshclam-mirror-1 Healthy 7.8s

⠿ Container vmclarity-grype-server-1 Healthy 37.3s

⠿ Container vmclarity-gateway-1 Healthy 7.7s

⠿ Container vmclarity-apiserver-1 Healthy 17.7s

Please note that the image_override.env file enables you to use the images you build yourself. You can override parameters in the docker-compose.yml by passing a custom env file to the docker compose up command via the --env-file flag. The /installation/docker/image_override.env file contains an example overriding all the container images.

-

Check the running containers in the Docker desktop.

-

Access the VMClarity UI. Navigate to http://localhost:8080/ in your browser.

Next steps

Complete the First tasks on the UI.

Clean up steps

-

After you’ve finished your tasks, stop the running containers.

docker compose --project-name vmclarity --file docker-compose.yml down --remove-orphans

8.1.2.4 - Deploy on GCP

Prerequisites

- You can install VMClarity using the CLI, so you have to have gcloud on your

computer available beforehand. For details on installing and configuring gcloud, see the official installation guide.

- If you have already installed VMClarity before and want to reinstall it, you have to manually restore deleted roles that were created during the previous installation.

Deployment steps

To install VMClarity on Google Cloud Platform (GCP), complete the following steps.

-

Download the newest GCP deployment release from GitHub and extract it to any location.

wget https://github.com/openclarity/vmclarity/releases/download/v1.1.2/gcp-deployment-v1.1.2.tar.gz

-

Create a new directory, extract the files and navigate to the directory.

mkdir gcp-deployment-v1.1.2

tar -xvzf gcp-deployment-v1.1.2.tar.gz -C gcp-deployment-v1.1.2

cd gcp-deployment-v1.1.2

-

Copy the example configuration file and rename it.

cp vmclarity-config.example.yaml vmclarity-config.yaml

-

The following table contains all the fields that can be set in the vmclarity-config.yaml file. You have to set at

least the required ones.

| Field | Required | Default | Description |

zone | yes | | The Zone to locate the VMClarity server. |